Code

library(tidyverse)

library(ggplot2)

library(lubridate)

library(readxl)

library(hrbrthemes)

library(viridis)

library(ggpubr)

knitr::opts_chunk$set(echo = TRUE, warning=FALSE, message=FALSE)Theresa Szczepanski

November 11, 2022

The MCAS_2022 data frame contains performance results from 495 students from Rising Tide Charter Public School on the Spring 2022 Massachusetts Comprehensive Assessment System (MCAS) tests.

For each student, there are values reported for 256 different variables which consist of information from four broad categories

Demographic characteristics of the students themselves (e.g., race, gender, date of birth, town, grade level, years in school, years in Massachusetts, and low income, title1, IEP, 504, and EL status ).

Key assessment features including subject, test format, and accommodations provided

Performance metrics: This includes a student’s score on individual item strands, e.g.,mitem1-mitem42.

See the MCAS_2022 data frame summary and codebook in the appendix for further details.

The second data set, MCAS_G9Sci2022_Item, is 42 by 9 and consists of 9 variables with information pertaining to the 42 questions on the 2022 HS Introductory Physics Item Report. The variables can be broken down into 2 categories:

Details about the content of a given test item:

This includes the content Reporting Category (MF (motion and forces) WA (waves), and EN (energy), the Standard from the 2016 STE Massachusetts Curriculum Framework, the Item Description providing the details of what specifically was asked of students, and the points available for a given question, sitem Possible Points.

Summary Performance Metrics:

For each item, the state reports the percentage of points earned by students at Rising Tide, RT Percent Points, the percentage of available points earned by students in the state, State Percent Points, and the difference between the percentage of points earned by Rising Tide students and the percentage of points earned by students in the state, RT-State Diff.

Lastly, CU306 Disability, is a 3 X 5 dataframe consisting of summary performance data by sItem Reporting Category for students with disabilities; most importantly including RT Percent Points and State Percent Points.

When considering our student performance data, we hope to address the following broad questions:

What adjustments (if any) should be made at the Tier 1 level, i.e., curricular adjustments for all students?

What would be the most beneficial areas of focus for a targeted intervention course for students struggling to meet or exceed performance expectations?

Are there notable differences in student performance for students with and without disabilities?

To read in, tidy, and join our data frames for each content area we will use the following functions. This still needs to be made more adaptable to include Math and ELA.

#Item analysis Read in

#subject must be: "math", "ela", or "science"

read_item<-function(sheet_name, subject){

subject_item<-case_when(

subject == "science"~"sitem",

subject == "math"~"mitem",

subject == "ela"~"eitem"

)

read_excel("_data/2022MCASDepartmentalAnalysis.xlsx", sheet = sheet_name,

skip = 1, col_names= c(subject_item, "Type", "Reporting Category", "Standard", "item Desc", "delete", "item Possible Points","RT Percent Points", "State Percent Points", "RT-State Diff")) %>%

select(!contains("delete"))%>%

filter(!str_detect(sitem,"Legend|legend"))%>%

mutate(sitem= as.character(sitem))%>%

separate(c(1), c("sitem", "delete"))%>%

select(!contains("delete"))%>%

mutate(sitem =

str_c(subject_item, sitem))

}

# Test<-read_item("SG9Physics", "science")

# view(Test)

# Test2<-read_item("SG8", "science")

# view(Test2)

Test3<-read_item("SG5", "science")

view(Test3)

# item_sheets<-excel_sheets("_data/2022MCASDepartmentalAnalysis.xlsx")

# item_sheets

# science_all<- map_dfr(

# excel_sheets("_data/ActiveDuty_MaritalStatus.xls")[1:3],"science",

# read_item)

# science_all#Filter, rename variables, and mutate values of variables on read-in

MCAS_2022<-read_csv("_data/PrivateSpring2022_MCAS_full_preliminary_results_04830305.csv",

skip=1)%>%

select(-c("sprp_dis", "sprp_sch", "sprp_dis_name", "sprp_sch_name", "sprp_orgtype",

"schtype", "testschoolname", "yrsindis", "conenr_dis"))%>%

#Recode all nominal variables as characters

mutate(testschoolcode = as.character(testschoolcode))%>%

# mutate(sasid = as.character(sasid))%>%

mutate(highneeds = as.character(highneeds))%>%

mutate(lowincome = as.character(lowincome))%>%

mutate(title1 = as.character(title1))%>%

mutate(ever_EL = as.character(ever_EL))%>%

mutate(EL = as.character(EL))%>%

mutate(EL_FormerEL = as.character(EL_FormerEL))%>%

mutate(FormerEL = as.character(FormerEL))%>%

mutate(ELfirstyear = as.character(ELfirstyear))%>%

mutate(IEP = as.character(IEP))%>%

mutate(plan504 = as.character(plan504))%>%

mutate(firstlanguage = as.character(firstlanguage))%>%

mutate(nature0fdis = as.character(natureofdis))%>%

mutate(spedplacement = as.character(spedplacement))%>%

mutate(town = as.character(town))%>%

mutate(ssubject = as.character(ssubject))%>%

#Recode all ordinal variable as factors

mutate(grade = as.factor(grade))%>%

mutate(levelofneed = as.factor(levelofneed))%>%

mutate(eperf2 = recode_factor(eperf2,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

.ordered = TRUE))%>%

mutate(eperflev = recode_factor(eperflev,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

"DNT" = "DNT",

"ABS" = "ABS",

.ordered = TRUE))%>%

mutate(mperf2 = recode_factor(mperf2,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

.ordered = TRUE))%>%

mutate(mperflev = recode_factor(mperflev,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

"INV" = "INV",

"ABS" = "ABS",

.ordered = TRUE))%>%

# The science variables contain a mixture of legacy performance levels and

# next generation performance levels which needs to be addressed in the ordering

# of these factors.

mutate(sperf2 = recode_factor(sperf2,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

.ordered = TRUE))%>%

mutate(sperflev = recode_factor(sperflev,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

"INV" = "INV",

"ABS" = "ABS",

.ordered = TRUE))%>%

#recode DOB using lubridate

mutate(dob = mdy(dob,

quiet = FALSE,

tz = NULL,

locale = Sys.getlocale("LC_TIME"),

truncated = 0

))

#view(MCAS_2022)

MCAS_2022After examining the summary (see appendix), I chose to

Filter:

SchoolID : There are several variables that identify our school, I removed all but one, testschoolcode.

StudentPrivacy: I left the sasid variable which is a student identifier number, but eliminated all values corresponding to students’ names.

dis: We are a charter school within our own unique district, therefore any “district level” data is identical to our “school level” data.

Rename

I currently have not renamed variables, but there are some trends to note:

e before most ELA MCAS student item performance metric variablesm before most Math MCAS student item performance metric variabless before most Science MCAS student item performance metric variablesMutate

I left as doubles

mitem1sgp)Recode to char

townRefactor as ord

mperflev.Recode to date

dob using lubridate.G9Sci_CU306Dis<-read_excel("_data/MCAS CU306 2022/CU306MCAS2022PhysicsGrade9ByDisability.xlsm",

sheet = "Disabled Students",

col_names = c("Reporting Category", "Possible Points", "RT%Points",

"State%Points", "RT-State Diff"))%>%

filter(`Reporting Category` == "Energy"|`Reporting Category`== "Motion, Forces, and Interactions"| `Reporting Category` == "Waves" )

#view(G9Sci_CU306Dis)

G9Sci_CU306DisG9Sci_CU306NonDis<-read_excel("_data/MCAS CU306 2022/CU306MCAS2022PhysicsGrade9ByDisability.xlsm",

sheet = "Non-Disabled Students",

col_names = c("Reporting Category", "Possible Points", "RT%Points",

"State%Points", "RT-State Diff"))%>%

filter(`Reporting Category` == "Energy"|`Reporting Category`== "Motion, Forces, and Interactions"| `Reporting Category` == "Waves" )

G9Sci_CU306NonDisI am interested in analyzing the 9th Grade Science Performance. To do this, I will select a subset of our data frame. I selected:

G9ScienceMCAS_2022 <- select(MCAS_2022, contains("sitem"), gender, grade, yrsinsch,

race, IEP, `plan504`, sattempt, sperflev)%>%

filter((grade == 9) & sattempt != "N")

G9ScienceMCAS_2022<-select(G9ScienceMCAS_2022, !(contains("43")|contains("44")|contains("45")))

#view(G9ScienceMCAS_2022)

G9ScienceMCAS_2022When I compared this data frame to the State reported analysis, the state analysis only contains 68 students. Notably, my data frame has 69 entries while the state is reporting data on only 68 students. I will have to investigate this further.

Since I will join this data frame with the MCAS_G9Sci2022_Item, using sitem as the key, I need to pivot this data set longer.

As expected, we now have 42 X 69 = 2898 rows.

Now, we should be ready to join our data sets using sitem as the key. We should have a 2,898 by (10 + 8) = 2,898 by 18 data frame. We will also check our raw data against the performance data reported by the state in the item report by calculating percent_earned by Rising Tide students and comparing it to the figure RT Percent Points and storing the difference in earned_diff

As expected, we now have a 2,898 X 18 data frame and the earned_diff values all round to 0.

When considering our student performance data, we hope to address the following broad questions:

What adjustments (if any) should be made at the Tier 1 level, i.e., curricular adjustments for all students?

What would be the most beneficial areas of focus for a targeted intervention course for students struggling to meet or exceed performance expectations?

Are there notable differences in student performance for students with and without disabilities?

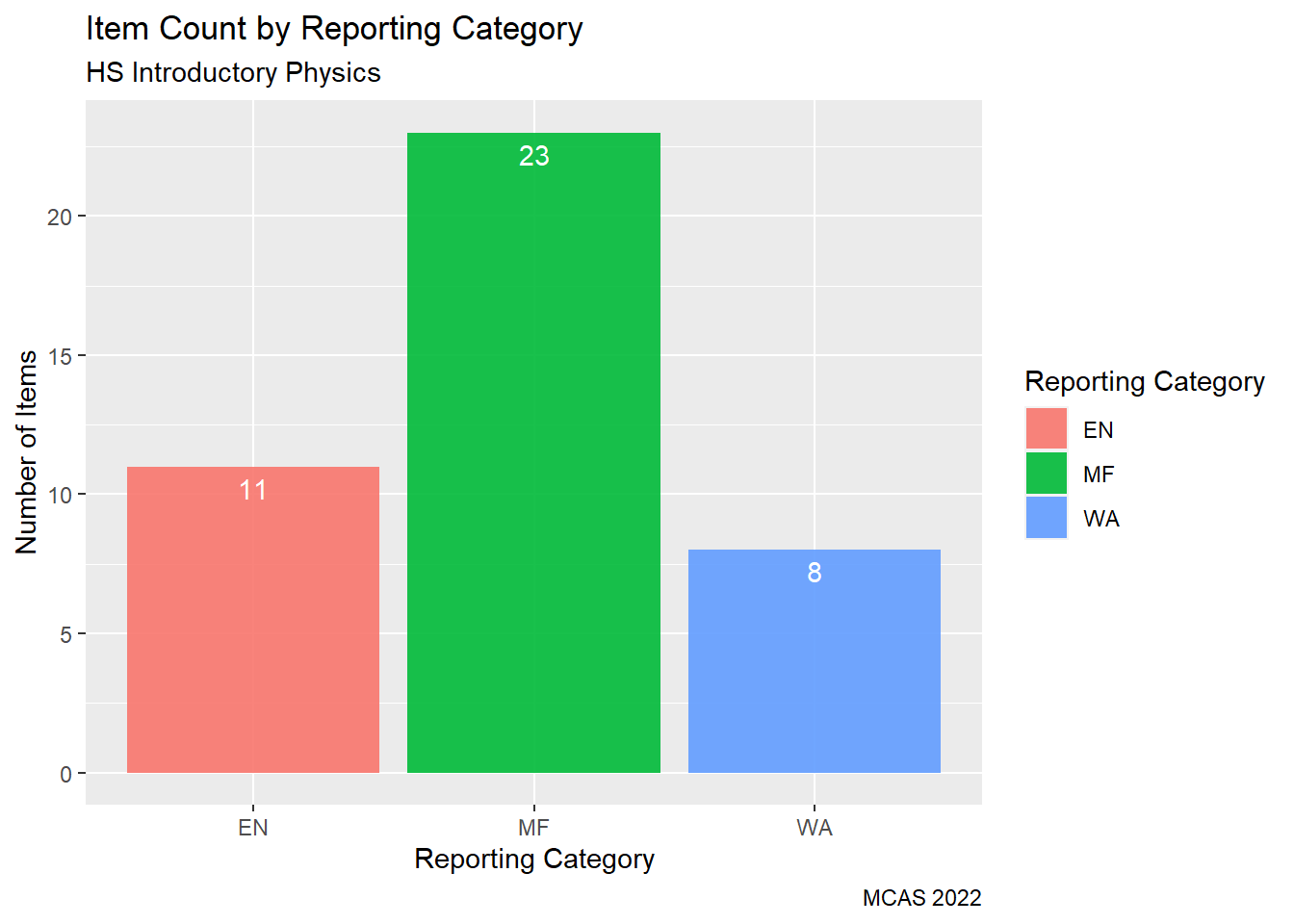

What reporting categories were emphasized by the state?

We can see from our summary,that 50% of the exam points (30 of the available 60) come from questions from the Motion and Forces reporting category and 23 of the 42 questions were from this category.

G9Science_Cat_Total<-MCAS_G9Sci2022_Item%>%

select(`sitem`, `item Possible Points`, `Reporting Category`, `State Percent Points`, `RT Percent Points`, `RT-State Diff`)

G9Science_Cat_Total%>%

group_by(`Reporting Category`)%>%

summarise(available_points = sum(`item Possible Points`, na.rm=TRUE),

RT_percent_points = mean(`RT Percent Points`, na.rm = TRUE),

State_percent_points = mean(`State Percent Points`, na.rm = TRUE)) ggplot(G9Science_Cat_Total, aes(x= `Reporting Category`, fill = `Reporting Category`))+

geom_bar( alpha=0.9) +

labs(title = "Item Count by Reporting Category",

subtitle = "HS Introductory Physics",

caption = "MCAS 2022",

y = "Number of Items",

x = "Reporting Category") +

geom_text(aes(label = ..count..), stat = "count", vjust = 1.5, colour = "white")

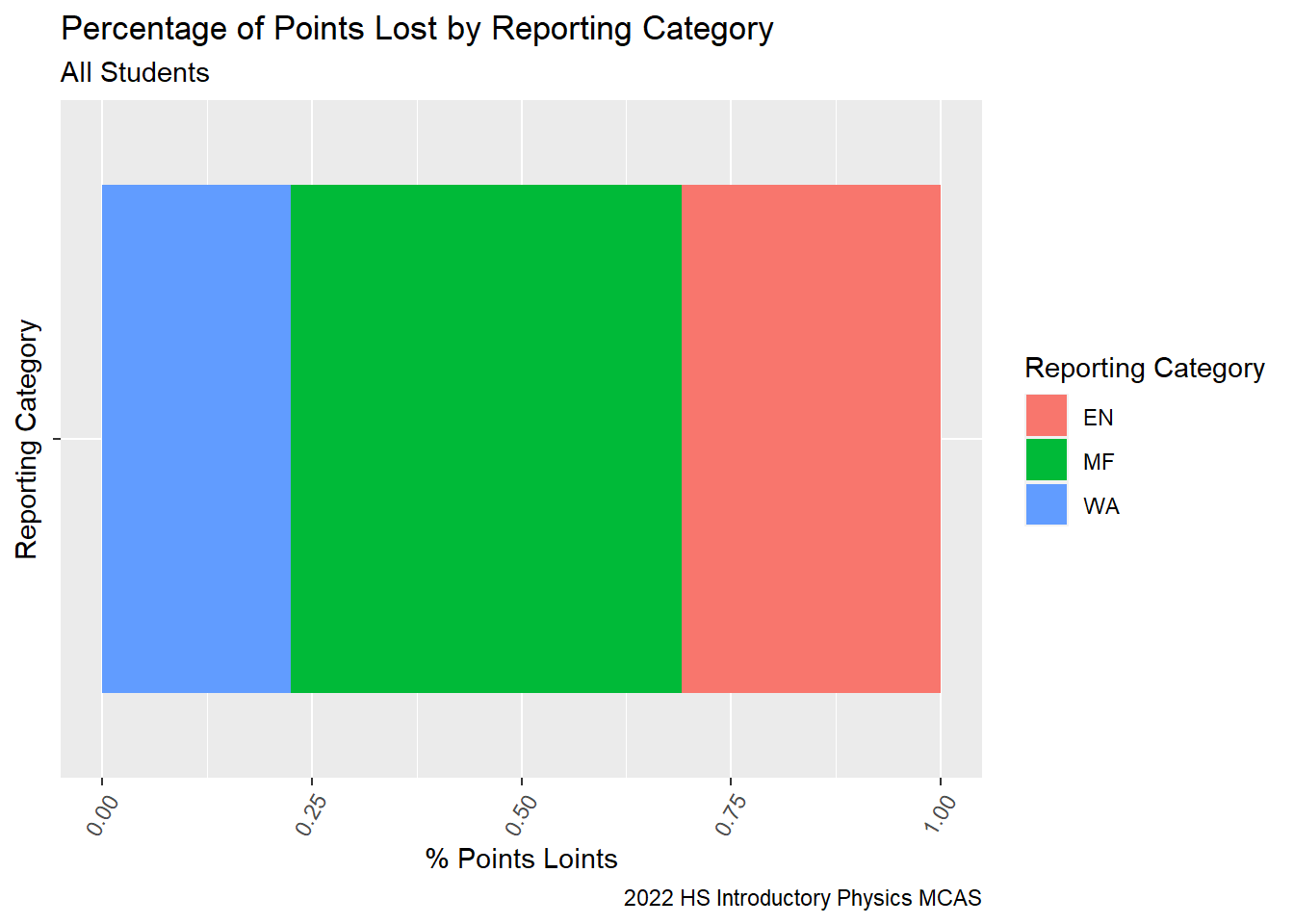

Where did RT students lose most of their points? Students lost the largest proportion of points in the Motion and Forces category, which was the Reporting Category for 50% of the points on the exam. The points lost by Rising Tide students seem to be proportional to the number of points available for each category.

G9Science_Percent_Loss<-G9Science_StudentItem%>%

select(`sitem`, `Reporting Category`, `item Possible Points`, `sitem_score`)%>%

mutate(`points_lost` = `item Possible Points` - `sitem_score`)%>%

#ggplot(df, aes(x='', fill=option)) + geom_bar(position = "fill")

ggplot( aes(x='',fill = `Reporting Category`, y = `points_lost`)) +

geom_bar(position="fill", stat = "identity") + coord_flip()+

labs(subtitle ="All Students" ,

y = "% Points Loints",

x= "Reporting Category",

title = "Percentage of Points Lost by Reporting Category",

caption = "2022 HS Introductory Physics MCAS")+

theme(axis.text.x=element_text(angle=60,hjust=1))

G9Science_Percent_Loss

Did Rising Tide students’ performance relative to the state vary by content reporting categories?

#Need to use CU306 and not take average of percentages...but percent of available points

# G9Science_Cat_Total%>%

# group_by(`Reporting Category`)%>%

# summarise(available_points = sum(`item Possible Points`, na.rm=TRUE),

# RT_percent_points = round(mean(`RT Percent Points`, na.rm = TRUE),2),

# State_percent_points = round(mean(`State Percent Points`, na.rm = TRUE),2))%>%

# pivot_longer(contains("percent"), names_to = "Group", values_to = "percent_points")%>%

# ggplot( aes(fill = `Group`, y=`percent_points`, x=`Reporting Category`)) +

# geom_bar(position="dodge", stat="identity") +

# labs(subtitle ="All Students" ,

# y = "Mean % Points",

# x= "Reporting Category",

# title = "Percent Points Earned by Reporting Category",

# caption = "2022 HS Introductory Physics MCAS")+

# theme(axis.text.x=element_text(angle=60,hjust=1))+

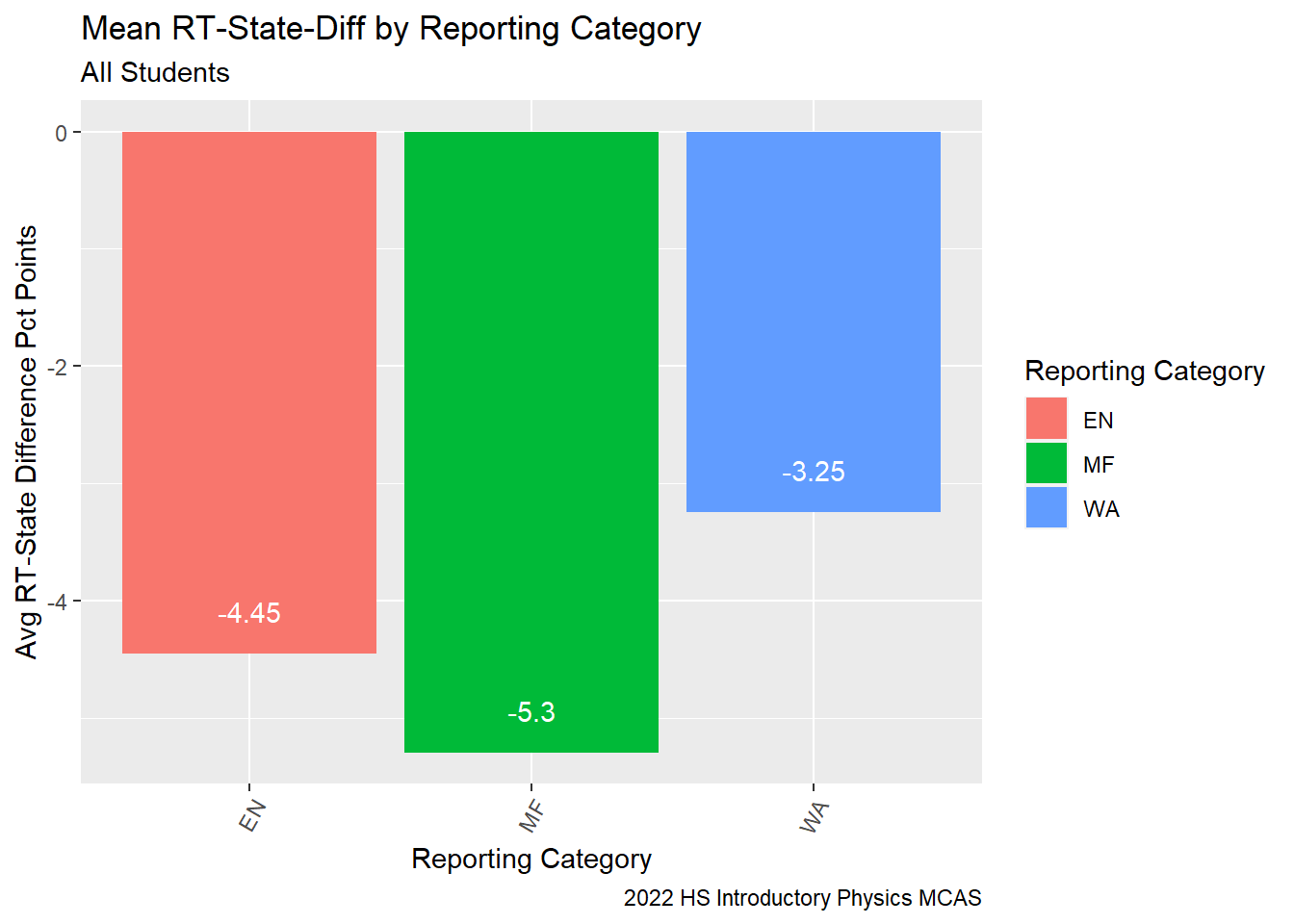

# geom_text(aes(label = `percent_points`), vjust = 1.5, colour = "white", position = position_dodge(.9))As we can see from our table, on average, our students earned fewer points relative to their peers in the state on items across all three reporting categories.

#view(MCAS_G9Sci2022_Item)

G9Science_Cat_StateDiff<-MCAS_G9Sci2022_Item%>%

select(`sitem`, `Reporting Category`, `item Possible Points`, `State Percent Points`, `RT-State Diff`)%>%

group_by(`Reporting Category`)%>%

summarise(avg_RT_State_Diff = round(mean(`RT-State Diff`, na.rm=TRUE),2),

sd_RT_State_Diff = sd(`RT-State Diff`, na.rm=TRUE),

med_RT_State_Diff = median(`RT-State Diff`, na.rm=TRUE),

sum_RT_State_Diff = sum(`RT-State Diff`, na.rm=TRUE))

G9Science_Cat_StateDiffG9Science_Cat_MeanStateDiff<-G9Science_Cat_StateDiff%>%

ggplot( aes(fill = `Reporting Category`, y=`avg_RT_State_Diff`, x=`Reporting Category`)) +

geom_bar(position="dodge", stat="identity") +

labs(subtitle ="All Students" ,

y = "Avg RT-State Difference Pct Points",

x= "Reporting Category",

title = "Mean RT-State-Diff by Reporting Category",

caption = "2022 HS Introductory Physics MCAS")+

theme(axis.text.x=element_text(angle=60,hjust=1))+

geom_text(aes(label = `avg_RT_State_Diff`), vjust = -1.5, colour = "white")

G9Science_Cat_MeanStateDiff

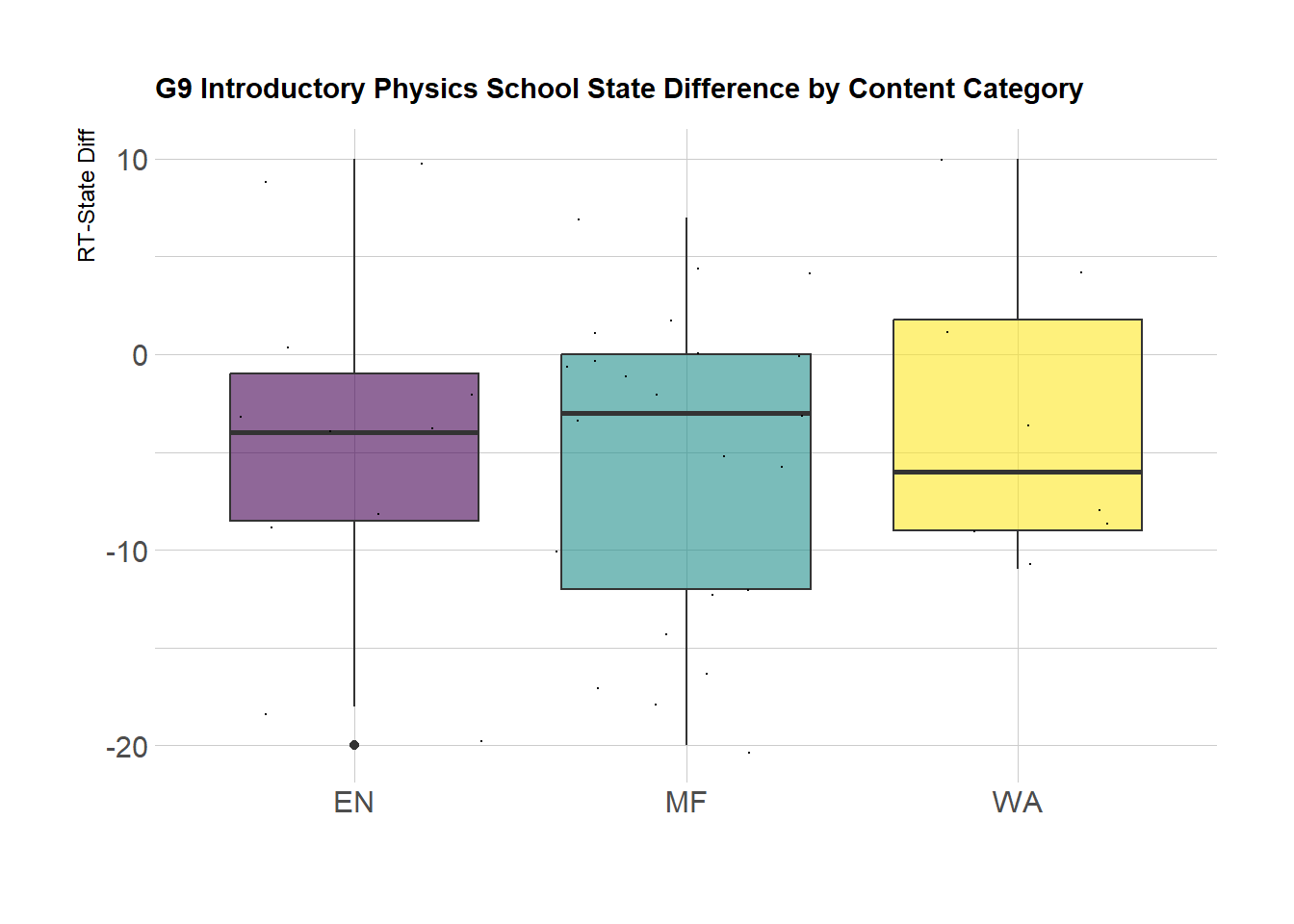

Here we see the distribution of RT-State Diff (difference between the percentage of points earned on a given item by Rising Tide students and percentage of points earned on the same item by their peers in the State) by reporting categor. We can see generally that Motion and Forces seems to have the highest variability. It would be worth looking at the specific question strands with the Physics Teachers. (It would be helpful to add item labels to the dots)

G9Science_Cat_Box <-MCAS_G9Sci2022_Item%>%

select(`sitem`, `Reporting Category`, `State Percent Points`, `RT-State Diff`)%>%

group_by(`Reporting Category`)%>%

ggplot( aes(x=`Reporting Category`, y=`RT-State Diff`, fill=`Reporting Category`)) +

geom_boxplot() +

scale_fill_viridis(discrete = TRUE, alpha=0.6) +

geom_jitter(color="black", size=0.1, alpha=0.9) +

theme_ipsum() +

theme(

legend.position="none",

plot.title = element_text(size=11)

) +

ggtitle("G9 Introductory Physics School State Difference by Content Category") +

xlab("")

G9Science_Cat_Box

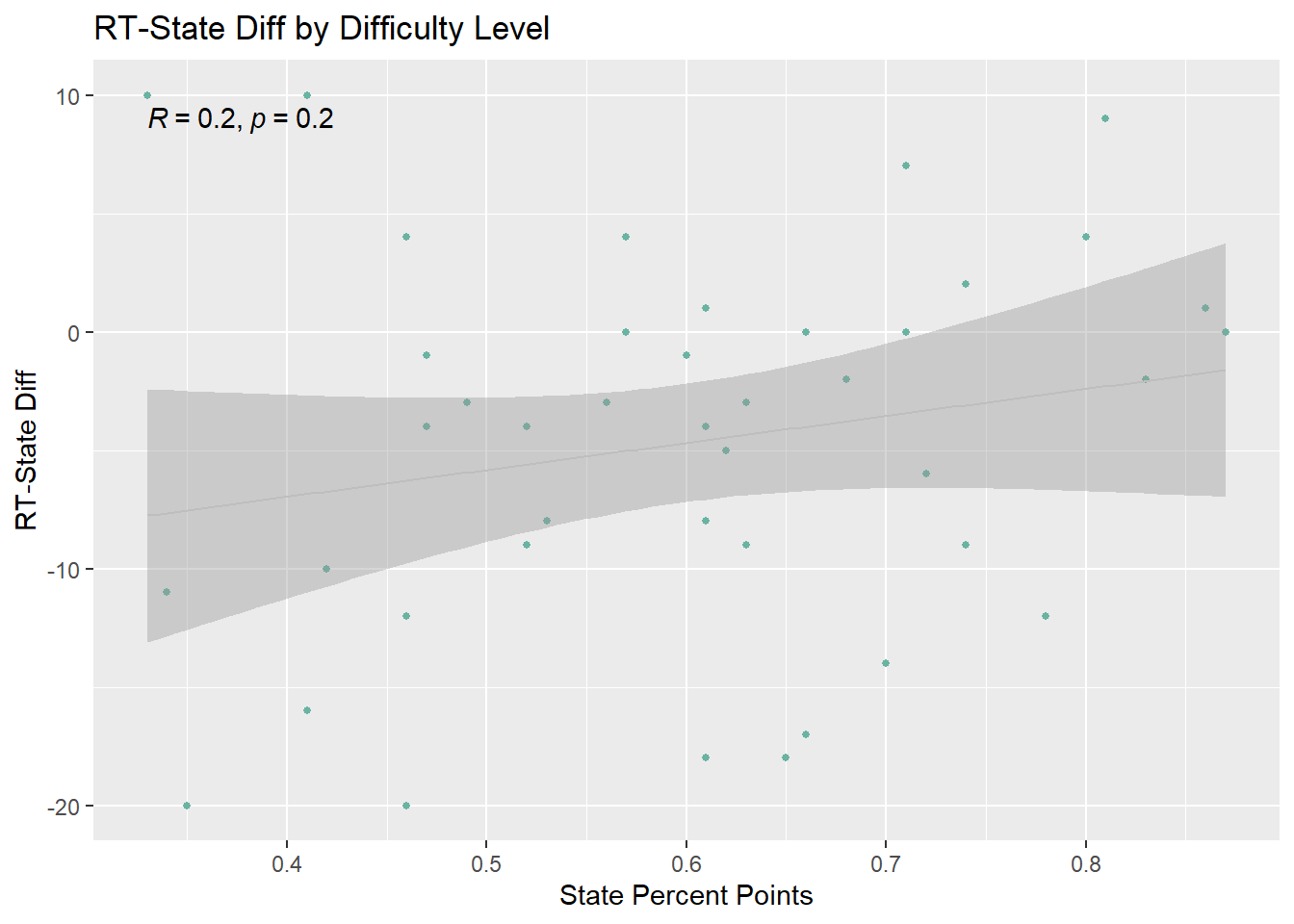

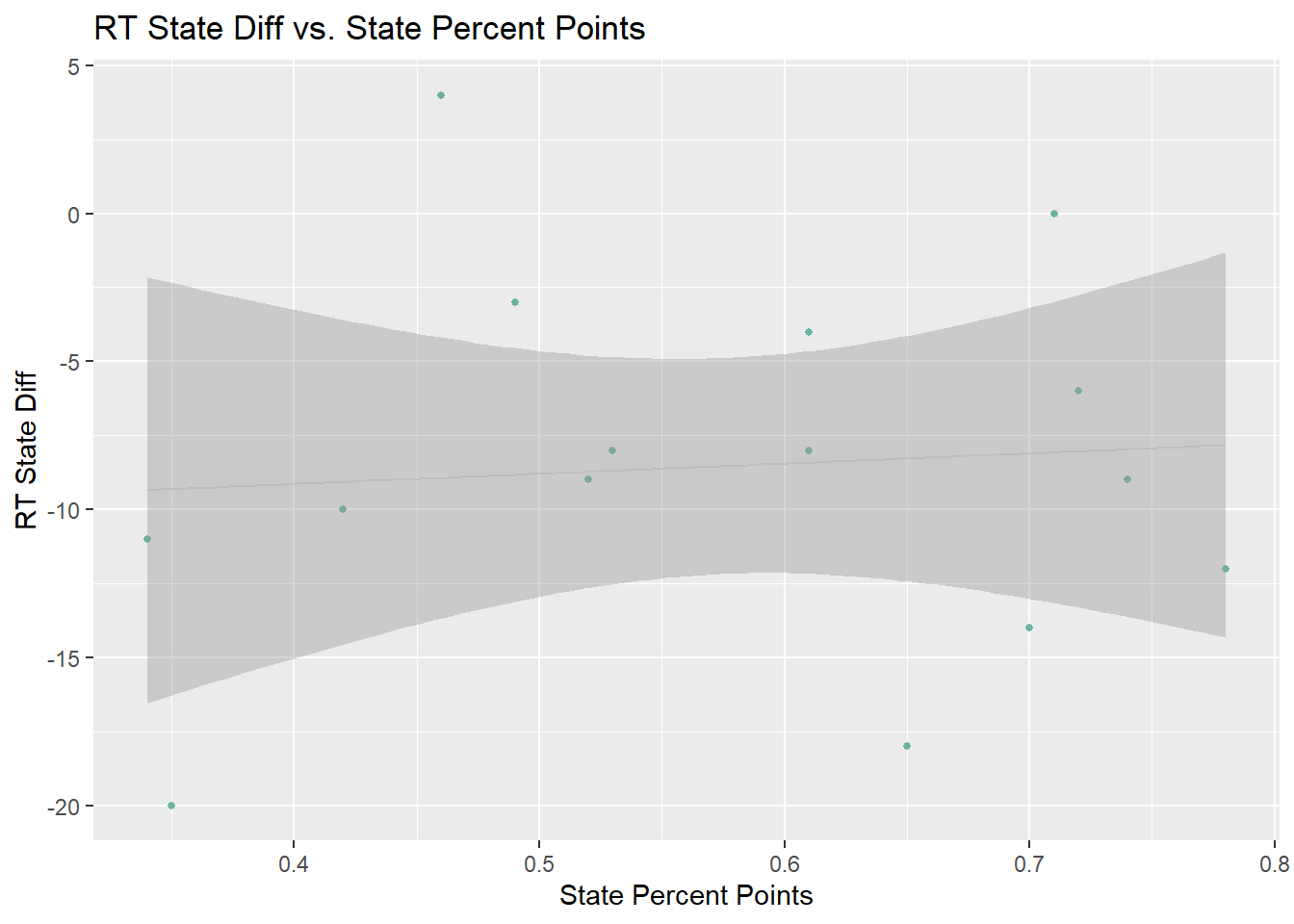

Can differences in Rising Tide student performance on an item and State performance on an item be explained by the difficulty level of an item?

When considering RT-State Diff against State Percent Points this does not seem to generally be the case. Although the regression line shows RT-State Diff more likely to be negative on items where students in the State earned fewer points; the p-value is not significant.

G9Sci_Diff_Dot<-MCAS_G9Sci2022_Item%>%

select(`State Percent Points`, `RT-State Diff`, `Reporting Category`)%>%

ggplot( aes(x=`State Percent Points`, y=`RT-State Diff`)) +

geom_point(size = 1, color="#69b3a2")+

geom_smooth(method="lm",color="grey", size =.5 )+

labs(title = "RT-State Diff by Difficulty Level", y = "RT-State Diff",

x = "State Percent Points") +

stat_cor(method = "pearson")#+facet(vars(`Reporting Category`)) +#label.x = 450, label.y = 550)

G9Sci_Diff_Dot

How did students perform based on key words?

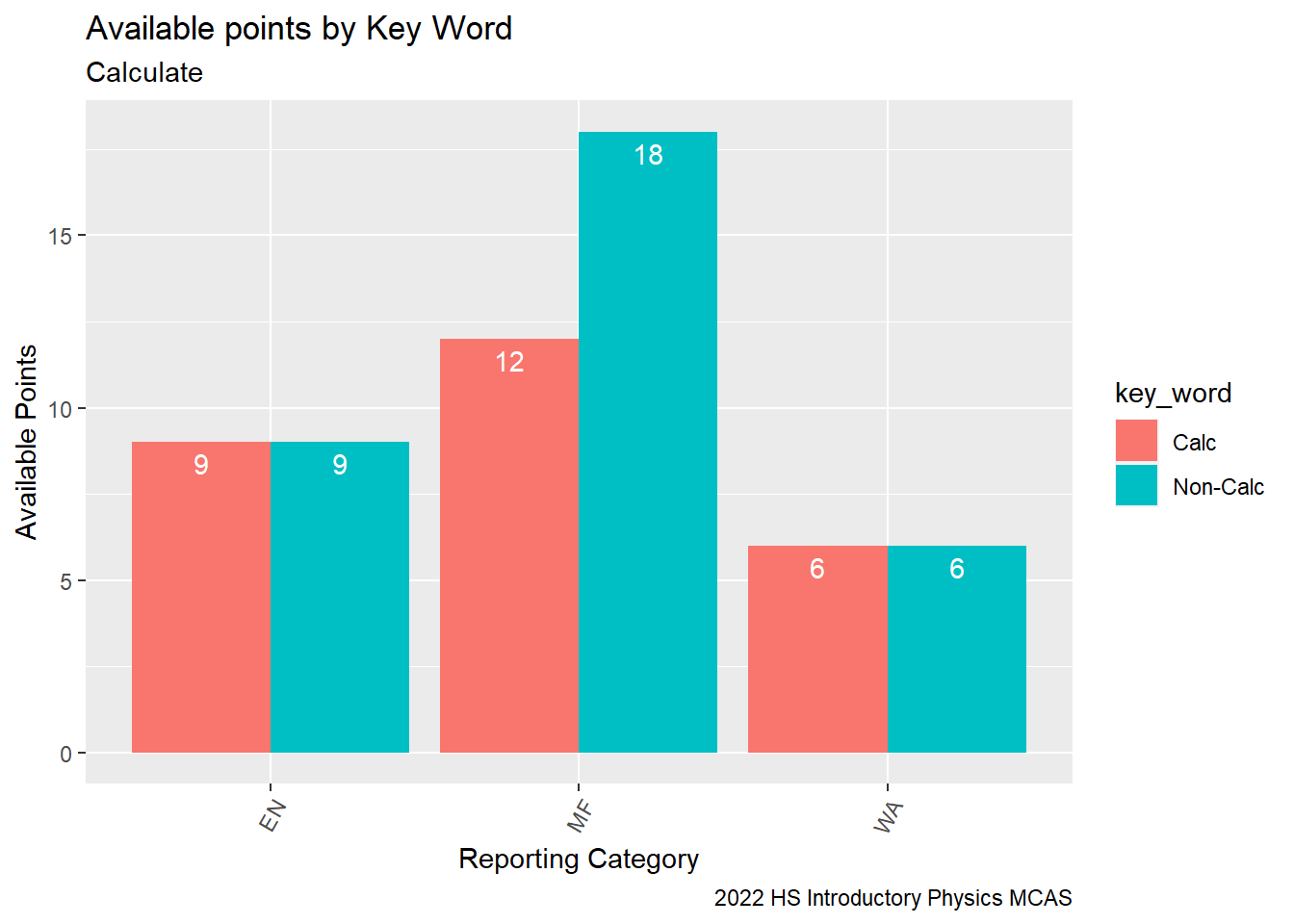

When scanning the item Desc entries, there are several questions containing the word “Calculate” in their description. How much is calculation emphasized on this exam and how did Rising Tide students perform relative to their peers in the state on items containing “calculate” in their description?

G9Science_Calc<-MCAS_G9Sci2022_Item%>%

select(`sitem`, `item Desc`,`item Possible Points`, `Reporting Category`, `State Percent Points`, `RT-State Diff`)%>%

mutate( key_word = case_when(

!str_detect(`item Desc`, "calculate|Calculate") ~ "Non-Calc",

str_detect(`item Desc`, "calculate|Calculate") ~ "Calc"))

#view(G9Science_Calc)

G9Science_CalcG9Science_Calc%>%

group_by(`Reporting Category`, `key_word`)%>%

summarise(avg_RT_State_Diff = mean(`RT-State Diff`, na.rm=TRUE),

med_RT_State_Diff = median(`RT-State Diff`, na.rm =TRUE),

sum_RT_State_Diff = sum(`RT-State Diff`, na.rm=TRUE),

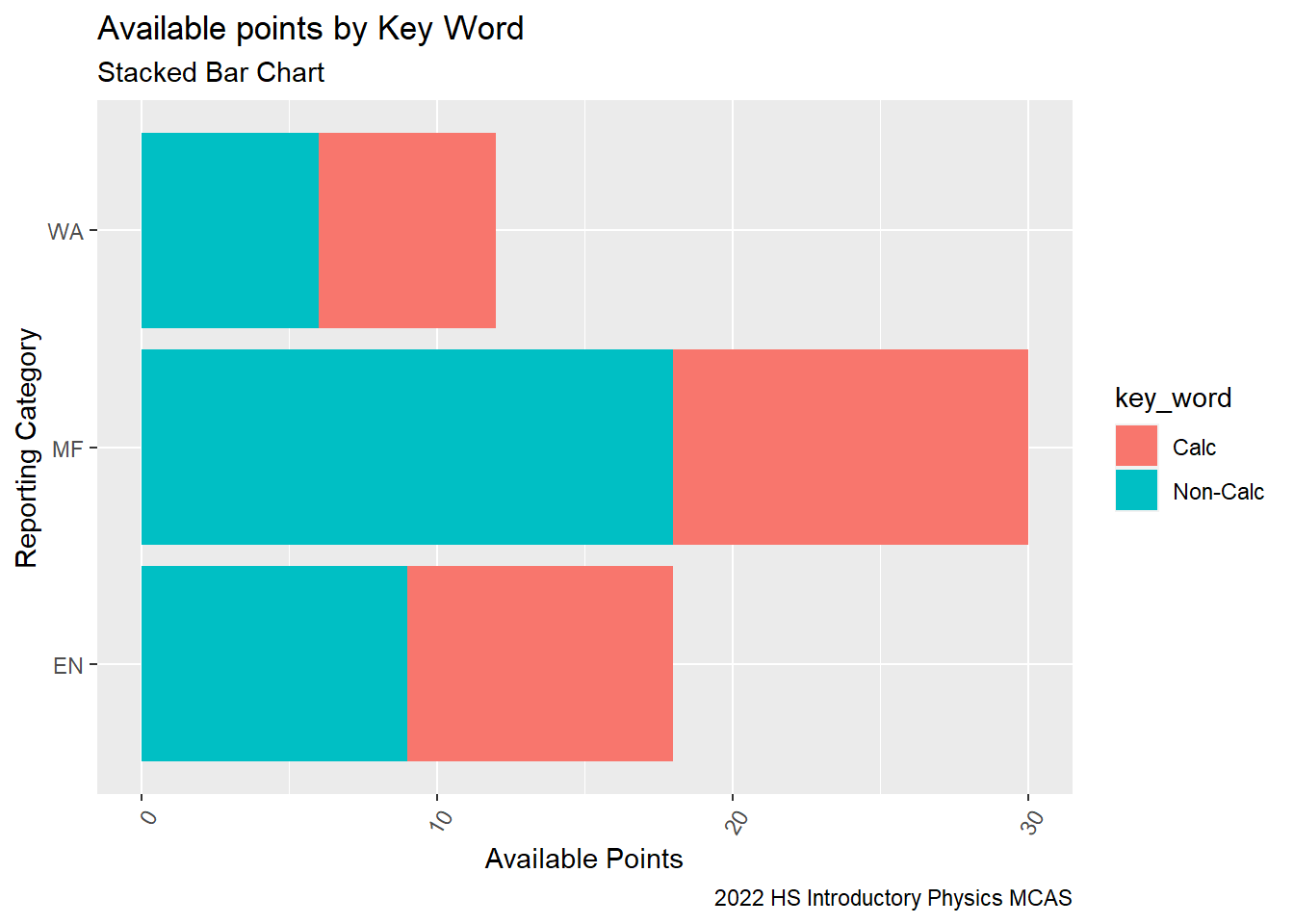

sum_sitem_Possible_Points = sum(`item Possible Points`, na.rm = TRUE))Now, we can see that by the Waves and Energy categories, half of the available points come from questions with calculate and half do not. In the Motion and Forces category, 40% of points are associated with questions that ask students to “calculate”.

G9Science_Calc_PointsAvail<-G9Science_Calc%>%

group_by(`Reporting Category`, `key_word`)%>%

summarise(avg_RT_State_Diff = mean(`RT-State Diff`, na.rm=TRUE),

med_RT_State_Diff = median(`RT-State Diff`, na.rm =TRUE),

sum_RT_State_Diff = sum(`RT-State Diff`, na.rm=TRUE),

sum_item_Possible_Points = sum(`item Possible Points`, na.rm = TRUE))%>%

ggplot(aes(fill=`key_word`, y=sum_item_Possible_Points, x=`Reporting Category`)) + geom_bar(position="dodge", stat="identity")+

labs(subtitle ="Calculate" ,

y = "Available Points",

x= "Reporting Category",

title = "Available points by Key Word",

caption = "2022 HS Introductory Physics MCAS")+

theme(axis.text.x=element_text(angle=60,hjust=1))+

geom_text(aes(label = `sum_item_Possible_Points`), vjust = 1.5, colour = "white", position = position_dodge(.9))

G9Science_Calc_PointsAvail

G9Science_Calc_PointsAvail_Stacked <- G9Science_Calc%>%

ggplot(aes(fill=key_word, y = `item Possible Points`, x=`Reporting Category`)) +

geom_bar(position="stack", stat="identity")+

labs(subtitle = "Stacked Bar Chart",

y = "Available Points",

x= "Reporting Category",

title = "Available points by Key Word",

caption = "2022 HS Introductory Physics MCAS")+

theme(axis.text.x=element_text(angle=60,hjust=1))+

coord_flip()

G9Science_Calc_PointsAvail_Stacked

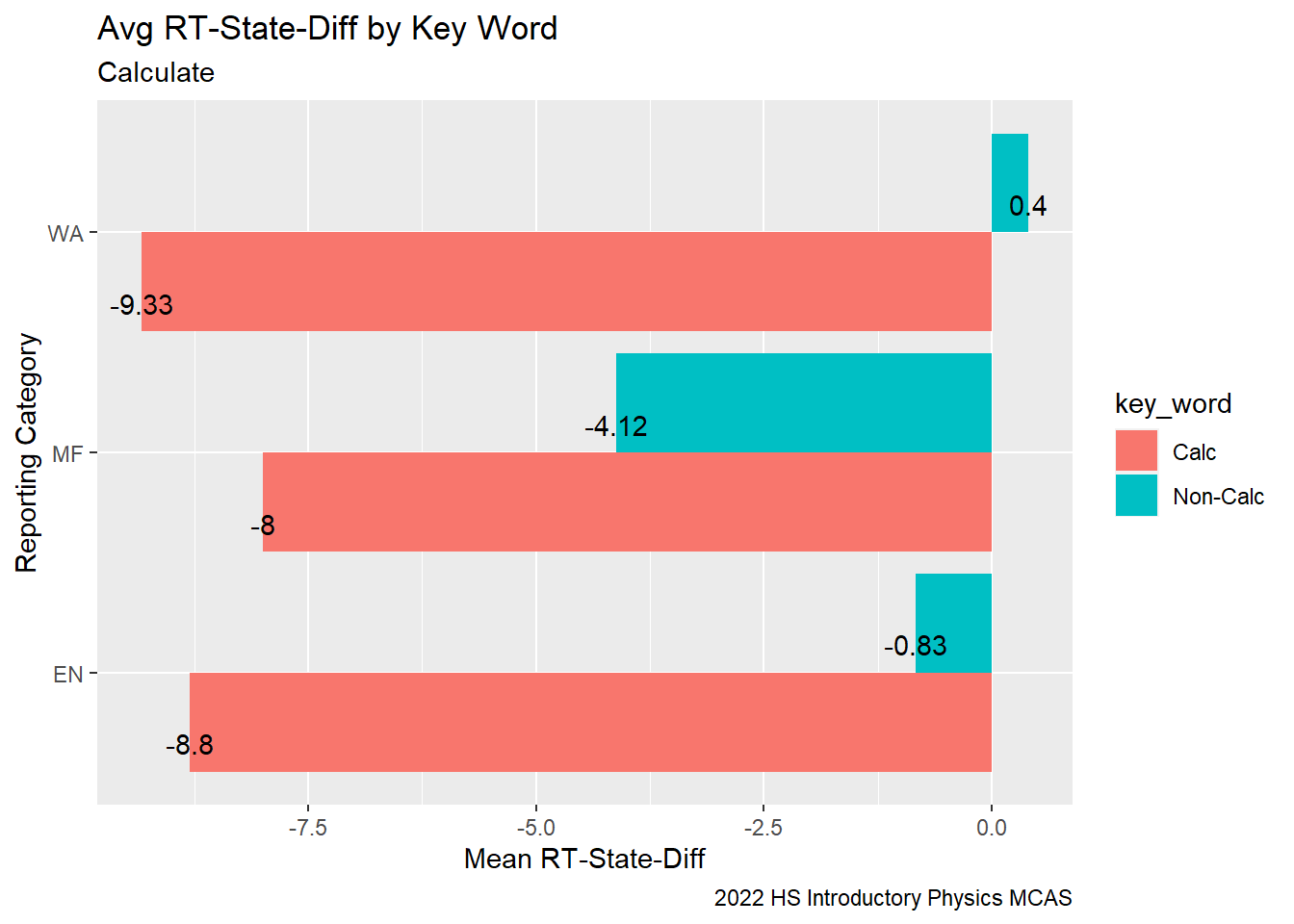

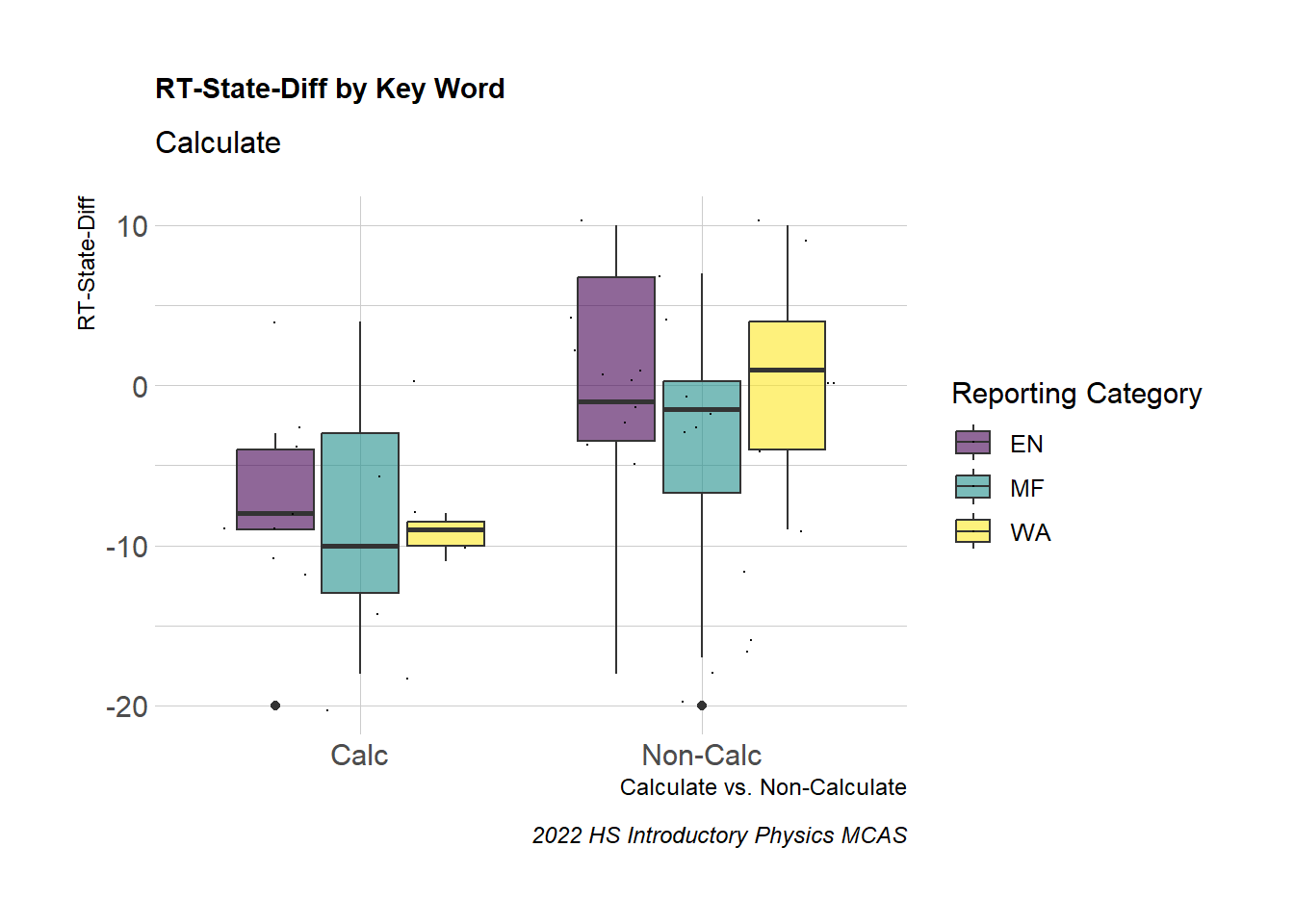

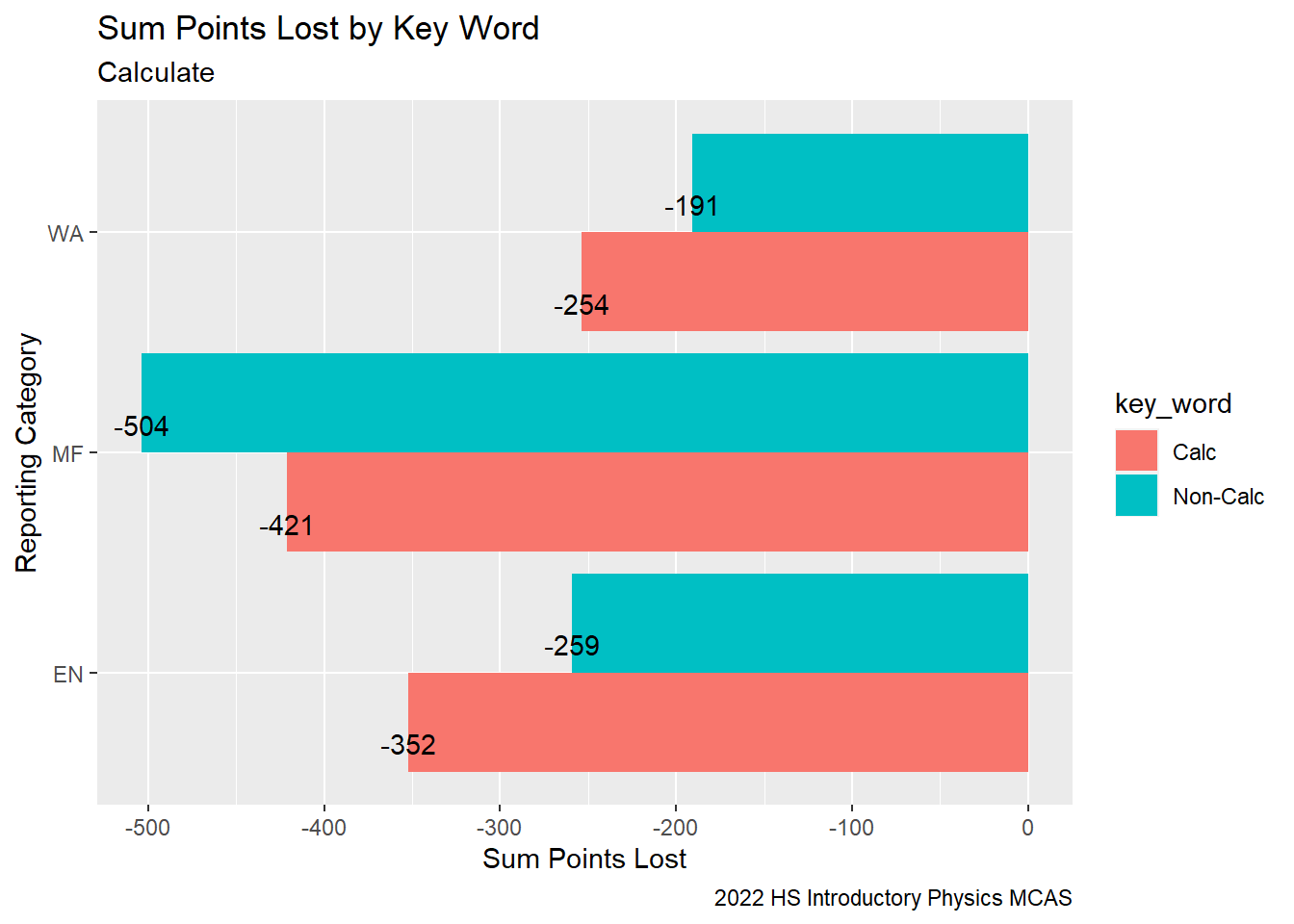

When we compare the mean RT-State Diff for items containing the word “calculate” in their description vs. items that do not, we can see that across all of the Reporting Categories, Rising Tide students performed significantly weaker relative to their peers on questions that asked them to “calculate”.

G9Science_Calc_MeanDiffBar<-G9Science_Calc%>%

group_by(`Reporting Category`, `key_word`)%>%

summarise(mean_RT_State_Diff = round(mean(`RT-State Diff`, na.rm=TRUE),2),

med_RT_State_Diff = median(`RT-State Diff`, na.rm =TRUE),

sum_RT_State_Diff = sum(`RT-State Diff`, na.rm=TRUE))%>%

ggplot(aes(fill=`key_word`, y=mean_RT_State_Diff, x=`Reporting Category`)) + geom_bar(position="dodge", stat="identity") + coord_flip()+

labs(subtitle ="Calculate" ,

y = "Mean RT-State-Diff",

x= "Reporting Category",

title = "Avg RT-State-Diff by Key Word",

caption = "2022 HS Introductory Physics MCAS")+

geom_text(aes(label = `mean_RT_State_Diff`), vjust = 1.5, colour = "black", position = position_dodge(.9))

G9Science_Calc_MeanDiffBar

Here we can see the distribution of RT-State Diff by item, Reporting Category and disparity in the RT-State Diff when we consider the term “Calculate”.

G9Science_Calc_Box <-G9Science_Calc%>%

group_by(`key_word`, `Reporting Category`)%>%

ggplot( aes(x=`key_word`, y=`RT-State Diff`, fill=`Reporting Category`)) +

geom_boxplot() +

scale_fill_viridis(discrete = TRUE, alpha=0.6) +

geom_jitter(color="black", size=0.1, alpha=0.9) +

theme_ipsum() +

theme(

#legend.position="none",

plot.title = element_text(size=11)

) + labs(subtitle ="Calculate" ,

y = "RT-State-Diff",

x= "Calculate vs. Non-Calculate",

title = "RT-State-Diff by Key Word",

caption = "2022 HS Introductory Physics MCAS")

# ggtitle("RT-State-Diff by Key Word") +

# xlab("")

G9Science_Calc_Box

Did RT students perform worse relative to their peers in the state on more “challenging” calculation items? If we consider the difficulty of items containing the word calculate for students as reflected in the state-wide performance (State Percent Points) for a given item, the gap between Rising Tide students’ performance to their peers in the state RT-State Diff does not seem to increase with the difficulty .

view(G9Science_Calc)

G9Science_Calc_Dot<- G9Science_Calc%>%

select(`State Percent Points`, `RT-State Diff`, `key_word`)%>%

filter(key_word == "Calc")%>%

ggplot( aes(x=`State Percent Points`, y=`RT-State Diff`)) +

geom_point(size = 1, color="#69b3a2")+

geom_smooth(method="lm",color="grey", size =.5 )+

labs(title = "RT State Diff vs. State Percent Points", y = "RT State Diff",

x = "State Percent Points")#+ face(vars())

G9Science_Calc_Dot

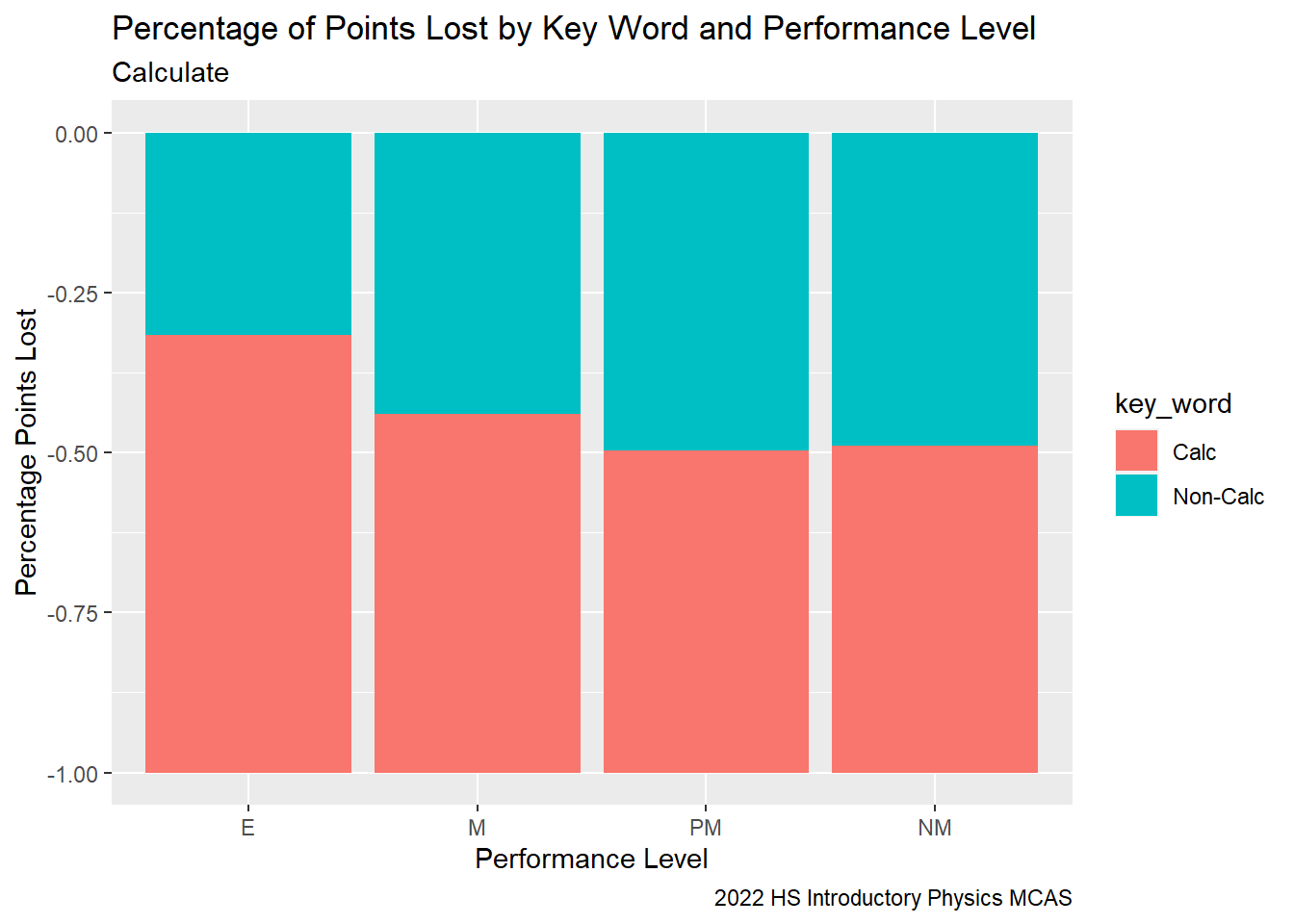

Is the “calculation gap” consistent across performance levels?

Here we can see that students with a higher performance level, lost a higher proportion of their points on questions involving “Calculate”. I.e., the higher a student’s performance level, the higher percentage of their points were lost to items asking them to “calculate”. This suggests, in the general classroom, to raise student performance, students should spend a higher proportion of time on calculation based activities.

# G9 Points Lost

G9Sci_StudentCalcPerflev<-G9Science_StudentItem%>%

select(gender, sitem, sitem_score, `item Desc`, `item Possible Points`, `State Percent Points`, IEP, `RT-State Diff`, `Reporting Category`, `sperflev`)%>%

mutate( key_word = case_when(

!str_detect(`item Desc`, "calculate|Calculate") ~ "Non-Calc",

str_detect(`item Desc`, "calculate|Calculate") ~ "Calc"))%>%

group_by(`sperflev`, `key_word`)%>%

summarise(total_points_lost = sum(`sitem_score`-`item Possible Points`, na.rm = TRUE),

mean_RT_State_Diff = round(mean(`RT-State Diff`, na.rm=TRUE),2))

G9Sci_StudentCalcPerflev#view(G9Science_StudentItem)

G9Sci_StudentCalc<-G9Science_StudentItem%>%

select(gender, sitem, sitem_score, `item Desc`, `item Possible Points`, `State Percent Points`, IEP, `RT-State Diff`, `Reporting Category`, `sperflev`)%>%

mutate( key_word = case_when(

!str_detect(`item Desc`, "calculate|Calculate") ~ "Non-Calc",

str_detect(`item Desc`, "calculate|Calculate") ~ "Calc"))%>%

group_by(`Reporting Category`, `key_word`)%>%

summarise(total_points_lost = sum(`sitem_score`-`item Possible Points`, na.rm = TRUE))%>%

ggplot(aes(fill=`key_word`, y=total_points_lost, x=`Reporting Category`)) + geom_bar(position="dodge", stat="identity") + coord_flip()+

labs(subtitle ="Calculate" ,

y = "Sum Points Lost",

x= "Reporting Category",

title = "Sum Points Lost by Key Word",

caption = "2022 HS Introductory Physics MCAS")+

geom_text(aes(label = `total_points_lost`), vjust = 1.5, colour = "black", position = position_dodge(.9))

#+

#geom_text(aes(label = `total_points_lost`), vjust = 1.5, colour = "white", position = position_dodge(.9))

G9Sci_StudentCalc

G9Sci_StudentCalcPerflev%>%

ggplot(aes(fill=`key_word`, y=total_points_lost, x=`sperflev`)) + geom_bar(position="fill", stat="identity") +

labs(subtitle ="Calculate" ,

y = "Percentage Points Lost",

x= "Performance Level",

title = "Percentage of Points Lost by Key Word and Performance Level",

caption = "2022 HS Introductory Physics MCAS")

# G9Sci_StudentCalcPerflevBox<-G9Sci_StudentCalcPerflev%>%

# ggplot( aes(x=`key_word`, y=`RT-State Diff`, fill=`sperflev`)) +

# geom_boxplot() +

# scale_fill_viridis(discrete = TRUE, alpha=0.6) +

# geom_jitter(color="black", size=0.1, alpha=0.9) +

# theme_ipsum() +

# theme(

# #legend.position="none",

# plot.title = element_text(size=11)

# ) + labs(subtitle ="Calculate" ,

# y = "RT-State-Diff",

# x= "Calculate vs. Non-Calculate",

# title = "RT-State-Diff by Key Word",

# caption = "2022 HS Introductory Physics MCAS") +

#

#

# G9Sci_StudentCalcPerflevBoxWe can see from our CU306 reports, that our students with disabilities performed better relative to their peers in the state RT-State Diff across all Reporting Categories, while our non-disabled students performed worse relative to their peers in the state across all Reporting Categories.

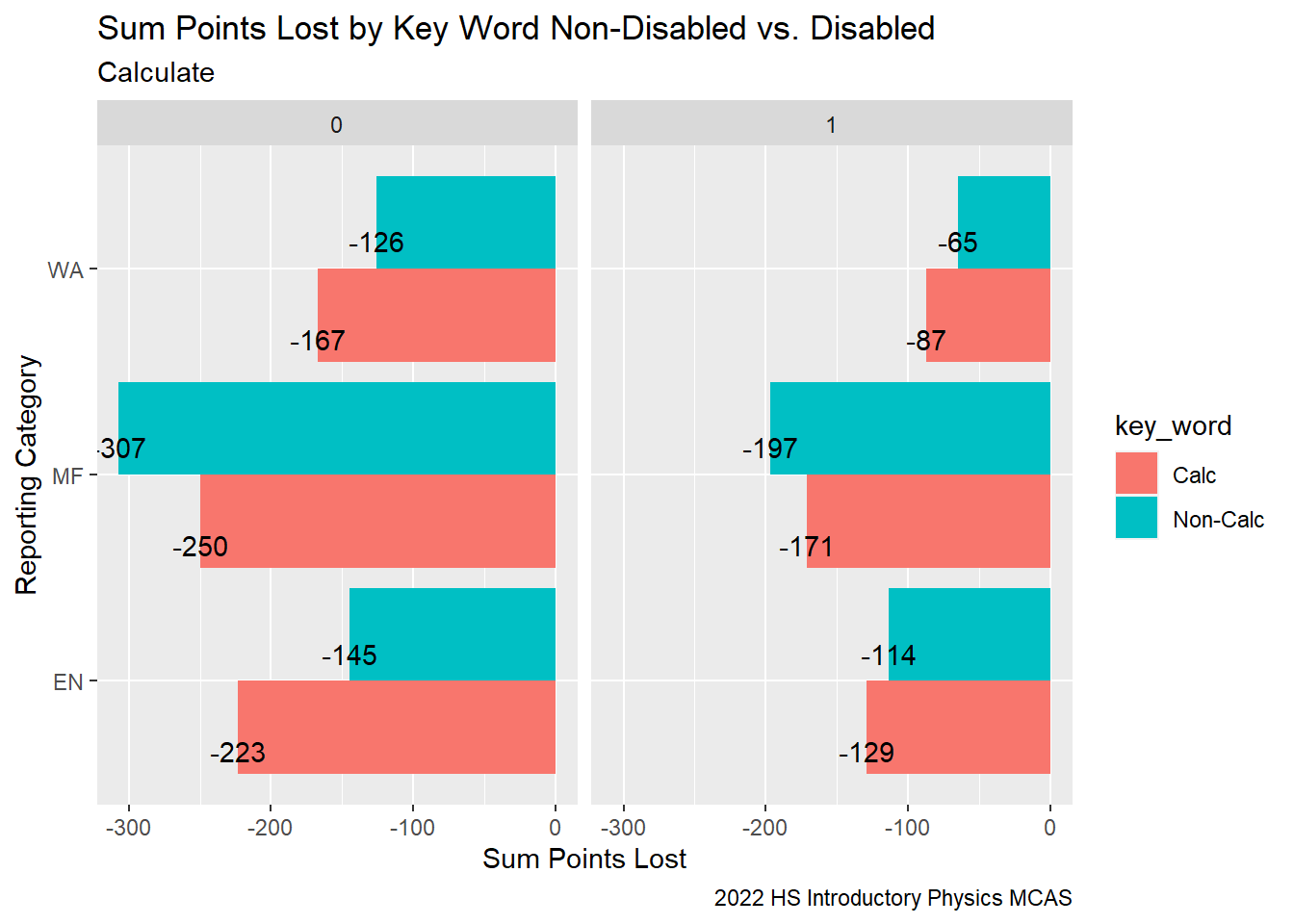

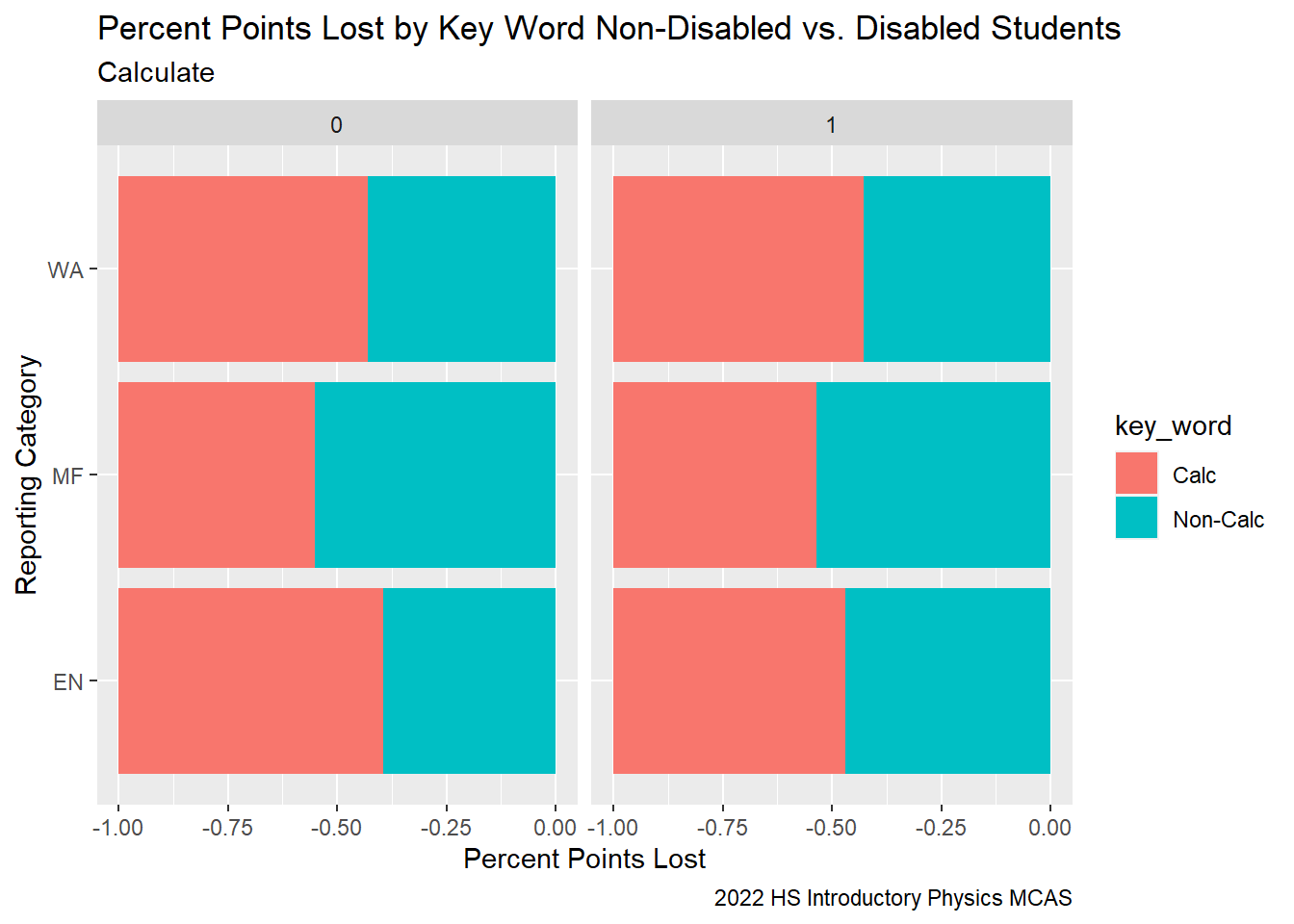

When we examine the points lost by reporting category and disability status, there does not seem to be a difference in performance between disabled and non-disabled students.

G9Sci_StudentCalcDis<-G9Science_StudentItem%>%

select(gender, sitem, sitem_score, `item Desc`, `item Possible Points`, `State Percent Points`, IEP, `RT-State Diff`, `Reporting Category`, `sperflev`)%>%

mutate( key_word = case_when(

!str_detect(`item Desc`, "calculate|Calculate") ~ "Non-Calc",

str_detect(`item Desc`, "calculate|Calculate") ~ "Calc"))%>%

group_by(`Reporting Category`, `key_word`, `IEP`)%>%

summarise(total_points_lost = sum(`sitem_score`-`item Possible Points`, na.rm = TRUE))%>%

ggplot(aes(fill=`key_word`, y=total_points_lost, x=`Reporting Category`)) + geom_bar(position="dodge", stat="identity")+

facet_wrap(vars(IEP))+ coord_flip()+

labs(subtitle ="Calculate" ,

y = "Sum Points Lost",

x= "Reporting Category",

title = "Sum Points Lost by Key Word Non-Disabled vs. Disabled",

caption = "2022 HS Introductory Physics MCAS")+

geom_text(aes(label = `total_points_lost`), vjust = 1.5, colour = "black", position = position_dodge(.95))

G9Sci_StudentCalcDis

G9Sci_StudentCalcDis<-G9Science_StudentItem%>%

select(gender, sitem, sitem_score, `item Desc`, `item Possible Points`, `State Percent Points`, IEP, `RT-State Diff`, `Reporting Category`, `sperflev`)%>%

mutate( key_word = case_when(

!str_detect(`item Desc`, "calculate|Calculate") ~ "Non-Calc",

str_detect(`item Desc`, "calculate|Calculate") ~ "Calc"))%>%

group_by(`Reporting Category`, `key_word`, `IEP`)%>%

summarise(sum_points_lost = sum(`sitem_score`-`item Possible Points`, na.rm = TRUE))%>%

ggplot(aes(fill=`key_word`, y=sum_points_lost, x=`Reporting Category`)) + geom_bar(position="fill", stat="identity")+

facet_wrap(vars(IEP))+ coord_flip()+

labs(subtitle ="Calculate" ,

y = "Percent Points Lost",

x= "Reporting Category",

title = "Percent Points Lost by Key Word Non-Disabled vs. Disabled Students",

caption = "2022 HS Introductory Physics MCAS")

G9Sci_StudentCalcDis

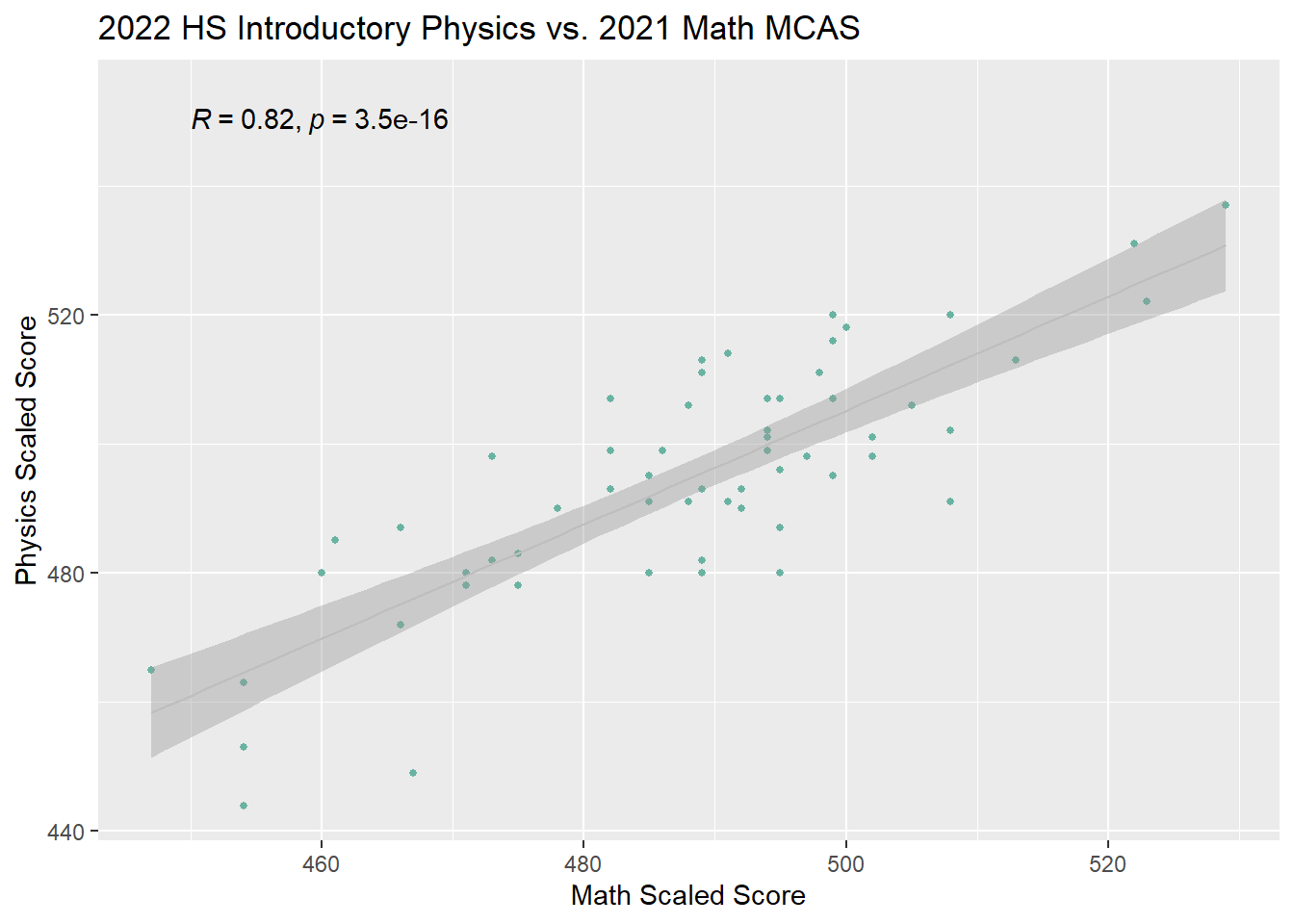

A student’s performance on their 9th Grade Introductory Physics MCAS is strongly associated with their performance on their 8th Grade Math MCAS exam. This suggests, we should use prior MCAS and current STAR Math testing data to identify students in need of extra support.

G9Science_Math<-MCAS_2022%>%

select(sscaleds, mscaleds2021,sscaleds_prior, grade, sattempt)%>%

filter((grade == 9) & sattempt != "N")%>%

ggplot(aes(x=`mscaleds2021`, y =`sscaleds`))+

geom_point(size = 1, color="#69b3a2")+

geom_smooth(method="lm",color="grey", size =.5 )+

labs(title = "2022 HS Introductory Physics vs. 2021 Math MCAS", y = "Physics Scaled Score",

x = "Math Scaled Score") +

stat_cor(method = "pearson", label.x = 450, label.y = 550)

G9Science_Math

Rising Tide students performed slightly weaker relative to the state in all content reporting areas and lost the highest percentage of their points from items in Motion and Forces which is also represented in the most items on the exam.

Rising Tide students performed significantly weaker relative to students in the State on items including the key word Calculate in their item description. This suggests, we should dedicate more classroom instructional time to problem solving with calculation. Notably, the higher a student’s performance level, the higher the percentage of points a student lost for calculation items. To increase the proportion of students exceeding expectations, we need to improve our students performance on calculation based items; evidence based math interventions include small group, differentiated problem sets.

Students with disabilities performed better relative to their peers in the state while our non-disabled students performed worse. This further supports the need for differentiated small group problem sets in the general classroom setting.

To improve this report, I need to

IEP so that the values 0 and 1 are Disabled and Non-DisabledReporting Category so that the full category names appear in the graphs.totals and when to use averages. To improve performance on a test, we are concerned with total points lost; to identify curricular weaknesses we are also interested in relative performance to the state by content areaHere I would complete a key for all of the variables that are included in my table. And link relevant decoding documents from DESE

esgp, msgp, ssgp continuous: The student’s growth percentile. by subject area (e: English, m: Math, s: Science)

eperf2, mperf2, ordinal: The student’s performance level in ELA and Math

| value | Key |

|---|---|

| Exceeds Expectations | E |

| Meets Expectations | M |

| Partially Meets Expectations | PM |

| Does Not Meet Expectations | NM |

gender, nominal : the reported gender identify of the student.

| value | Key |

|---|---|

| Female | F |

| Male | M |

| Non binary | N |

| Variable | Stats / Values | Freqs (% of Valid) | Graph | Missing | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| adminyear [numeric] | 1 distinct value |

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| testschoolcode [character] | 1. 4830305 |

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| grade [factor] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| gradesims [numeric] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| dob [Date] |

|

427 distinct values | 0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| gender [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| race [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| yrsinmass [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| yrsinmass_num [numeric] |

|

12 distinct values | 0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| yrsinsch [numeric] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| highneeds [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| lowincome [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| title1 [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ever_EL [character] | 1. 1 |

|

475 (96.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| EL [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| EL_FormerEL [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| FormerEL [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ELfirstyear [character] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| IEP [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| plan504 [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| firstlanguage [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| natureofdis [numeric] |

|

|

380 (76.8%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| levelofneed [factor] |

|

|

380 (76.8%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| spedplacement [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| town [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| county [character] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| octenr [numeric] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| conenr_sch [numeric] | 1 distinct value |

|

440 (88.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| conenr_sta [numeric] | 1 distinct value |

|

434 (87.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| access_part [numeric] | 1 distinct value |

|

488 (98.6%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ealt [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ecomplexity [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| emode [character] | 1. O |

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eteststat [character] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| wptopdev [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| wpcompconv [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem1 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem2 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem3 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem4 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem5 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem6 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem7 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem8 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem9 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem10 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem11 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem12 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem13 [numeric] |

|

|

75 (15.2%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem14 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem15 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem16 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem17 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem18 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem19 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem20 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem21 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem22 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem23 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem24 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem25 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem26 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem27 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem28 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem29 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem30 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem31 [numeric] |

|

|

135 (27.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem32 [numeric] |

|

|

403 (81.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem33 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem34 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem35 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem36 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem37 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem38 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem39 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eitem40 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| erawsc [numeric] |

|

39 distinct values | 73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| emcpts [numeric] |

|

24 distinct values | 73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eorpts [numeric] |

|

28 distinct values | 73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eperpospts [numeric] |

|

63 distinct values | 73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| escaleds [numeric] |

|

74 distinct values | 74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eperflev [ordered, factor] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eperf2 [ordered, factor] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| enumin [numeric] | 1 distinct value |

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eassess [numeric] |

|

|

70 (14.1%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| esgp [numeric] |

|

96 distinct values | 109 (22.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| idea1 [character] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| conv1 [character] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| idea2 [character] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| conv2 [character] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| idea3 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| conv3 [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eattempt [character] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| malt [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mcomplexity [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mmode [character] | 1. O |

|

71 (14.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mteststat [character] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem1 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem2 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem3 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem4 [numeric] |

|

|

75 (15.2%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem5 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem6 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem7 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem8 [numeric] |

|

|

76 (15.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem9 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem10 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem11 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem12 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem13 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem14 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem15 [numeric] |

|

|

76 (15.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem16 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem17 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem18 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem19 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem20 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem21 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem22 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem23 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem24 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem25 [numeric] |

|

|

75 (15.2%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem26 [numeric] |

|

|

72 (14.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem27 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem28 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem29 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem30 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem31 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem32 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem33 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem34 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem35 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem36 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem37 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem38 [numeric] |

|

|

74 (14.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem39 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem40 [numeric] |

|

|

73 (14.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem41 [numeric] |

|

|

432 (87.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mitem42 [numeric] |

|

|

432 (87.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mrawsc [numeric] |

|

51 distinct values | 72 (14.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mmcpts [numeric] |

|

22 distinct values | 72 (14.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| morpts [numeric] |

|

38 distinct values | 72 (14.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mperpospts [numeric] |

|

67 distinct values | 72 (14.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mscaleds [numeric] |

|

80 distinct values | 72 (14.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mperflev [ordered, factor] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mperf2 [ordered, factor] |

|

|

72 (14.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mnumin [numeric] | 1 distinct value |

|

72 (14.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| massess [numeric] |

|

|

70 (14.1%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| msgp [numeric] |

|

97 distinct values | 107 (21.6%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mattempt [character] |

|

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| salt [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| scomplexity [logical] |

|

495 (100.0%) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| smode [character] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| steststat [character] |

|

|

183 (37.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ssubject [character] |

|

|

363 (73.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem1 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem2 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem3 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem4 [numeric] |

|

|

240 (48.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem5 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem6 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem7 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem8 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem9 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem10 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem11 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem12 [numeric] |

|

|

240 (48.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem13 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem14 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem15 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem16 [numeric] |

|

|

242 (48.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem17 [numeric] |

|

|

240 (48.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem18 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem19 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem20 [numeric] |

|

|

240 (48.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem21 [numeric] |

|

|

243 (49.1%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem22 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem23 [numeric] |

|

|

240 (48.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem24 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem25 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem26 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem27 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem28 [numeric] |

|

|

240 (48.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem29 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem30 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem31 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem32 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem33 [numeric] |

|

|

240 (48.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem34 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem35 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem36 [numeric] |

|

|

240 (48.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem37 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem38 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem39 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem40 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem41 [numeric] |

|

|

239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem42 [numeric] |

|

|

418 (84.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem43 [numeric] |

|

|

487 (98.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem44 [numeric] |

|

|

488 (98.6%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sitem45 [numeric] |

|

|

488 (98.6%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| srawsc [numeric] |

|

43 distinct values | 239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| smcpts [numeric] |

|

26 distinct values | 239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sorpts [numeric] |

|

33 distinct values | 239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sperpospts [numeric] |

|

59 distinct values | 239 (48.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sscaleds [numeric] |

|

91 distinct values | 185 (37.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sperflev [ordered, factor] |

|

|

183 (37.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sperf2 [ordered, factor] |

|

|

254 (51.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| snumin [numeric] | 1 distinct value |

|

254 (51.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sassess [numeric] |

|

|

252 (50.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sattempt [character] |

|

|

183 (37.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ela_cd [numeric] |

|

|

363 (73.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| math_cd [numeric] |

|

|

363 (73.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sci_cd [numeric] |

|

|

363 (73.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| accom_e [numeric] | 1 distinct value |

|

419 (84.6%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| accom_m [numeric] | 1 distinct value |

|

417 (84.2%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| accom_s [numeric] | 1 distinct value |

|

448 (90.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| accom_readaloud [character] |

|

|

492 (99.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| accom_scribe [character] | 1. H |

|

493 (99.6%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| accom_calculator [numeric] | 1 distinct value |

|

493 (99.6%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| grade2018 [numeric] |

|

|

224 (45.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| grade2019 [numeric] |

|

|

134 (27.1%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| grade2021 [numeric] |

|

|

94 (19.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| escaleds2018 [numeric] |

|

61 distinct values | 229 (46.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| escaleds2019 [numeric] |

|

71 distinct values | 138 (27.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| escaleds2021 [numeric] |

|

83 distinct values | 96 (19.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mscaleds2018 [numeric] |

|

71 distinct values | 229 (46.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mscaleds2019 [numeric] |

|

77 distinct values | 138 (27.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mscaleds2021 [numeric] |

|

83 distinct values | 95 (19.2%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| esgp2018 [numeric] |

|

81 distinct values | 316 (63.8%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| esgp2019 [numeric] |

|

91 distinct values | 231 (46.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| esgp2021 [numeric] |

|

88 distinct values | 201 (40.6%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| msgp2018 [numeric] |

|

85 distinct values | 316 (63.8%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| msgp2019 [numeric] |

|

92 distinct values | 231 (46.7%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| msgp2021 [numeric] |

|

82 distinct values | 200 (40.4%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| summarize [numeric] |

|

|

0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| amend [character] | 1. M |

|

494 (99.8%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| datachanged [numeric] | 1 distinct value |

|

494 (99.8%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eScaleForm [numeric] | 1 distinct value |

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mScaleForm [numeric] | 1 distinct value |

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sScaleForm [numeric] | 1 distinct value |

|

307 (62.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| eFormType [character] | 1. C |

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mFormType [character] | 1. C |

|

69 (13.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sFormType [character] |

|

|

183 (37.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| days_in_person [numeric] |

|

53 distinct values | 0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| member [numeric] |

|

22 distinct values | 0 (0.0%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ssubject_prior [numeric] |

|

|

435 (87.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sscaleds_prior [numeric] |

|

24 distinct values | 435 (87.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| escaleds.legacy.equivalent [numeric] |

|

14 distinct values | 433 (87.5%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mscaleds.legacy.equivalent [numeric] |

|

24 distinct values | 432 (87.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sscaleds.legacy.equivalent [numeric] |

|

26 distinct values | 425 (85.9%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sscaleds.highest.on.legacy.scale [numeric] |

|

30 distinct values | 363 (73.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| scpi [numeric] |

|

|

432 (87.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sscaleds.highest.on.nextGen.scale [numeric] |

|

24 distinct values | 432 (87.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| sperf2.highest.on.nextGen.scale [character] |

|

|

432 (87.3%) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| nature0fdis [character] |

|

|

380 (76.8%) |

Generated by summarytools 1.0.1 (R version 4.2.1)

2022-12-21

| value | Key |

|---|---|

| Female | F |

| Male | M |

| Non binary | N |

---

title: "Homework 3"

author: "Theresa Szczepanski"

desription: "MCAS G9 Science Analysis"

date: "11/11/2022"

format:

html:

df-print: paged

toc: true

code-fold: true

code-copy: true

code-tools: true

categories:

- Theresa_Szczepanski

- hw3

- MCAS_2022

- MCAS_G9Sci2022_Item

always_allow_html: true

---

```{r}

#| label: setup

#| warning: false

#| message: false

library(tidyverse)

library(ggplot2)

library(lubridate)

library(readxl)

library(hrbrthemes)

library(viridis)

library(ggpubr)

knitr::opts_chunk$set(echo = TRUE, warning=FALSE, message=FALSE)

```

## Narrative Summary

The `MCAS_2022` data frame contains performance results from 495 students from [Rising Tide Charter Public School](https://risingtide.org/) on the Spring 2022

[Massachusetts Comprehensive Assessment System (MCAS)](https://www.doe.mass.edu/mcas/default.html)

tests.

For each student, there are values reported for 256 different variables which

consist of information from four broad categories

- *Demographic characteristics* of

the students themselves (e.g., race, gender, date of birth, town, grade level,

years in school, years in Massachusetts, and low income, title1, IEP, 504,

and EL status ).

- *Key assessment features* including subject, test format, and

accommodations provided

- *Performance metrics*: This includes a student's score on individual item strands,

e.g.,`mitem1`-`mitem42`.

See the `MCAS_2022` data frame summary and __codebook__ in the __appendix__ for further details.

The second data set, `MCAS_G9Sci2022_Item`, is 42 by 9 and consists of

9 variables with information pertaining to the 42 questions on the 2022 [HS Introductory Physics Item Report](https://profiles.doe.mass.edu/mcas/mcasitems2.aspx?grade=HS&subjectcode=PHY&linkid=23&orgcode=04830000&fycode=2022&orgtypecode=5&). The variables can be broken down into 2 categories:

Details about the content of a given test item:

This includes the content `Reporting Category` (MF (motion and forces)

WA (waves), and EN (energy), the `Standard` from the [2016 STE Massachusetts Curriculum Framework](https://www.doe.mass.edu/frameworks/scitech/2016-04.pdf), the `Item Description` providing the details of what specifically was asked of students, and the points

available for a given question, `sitem Possible Points`.

Summary Performance Metrics:

- For each item, the state reports the percentage of points earned by students at

Rising Tide, `RT Percent Points`, the percentage of available points earned by students

in the state, `State Percent Points`, and the difference between the percentage of points earned by Rising Tide students and the percentage of points earned by students in the state, `RT-State Diff`.

- Lastly, `CU306 Disability`, is a 3 X 5 dataframe consisting of summary

performance data by `sItem Reporting Category` for students with disabilities; most importantly including `RT Percent Points` and `State Percent Points`.

When considering our student performance data, we hope to address the following broad questions:

- What adjustments (if any) should be made at the Tier 1 level, i.e., curricular adjustments

for all students?

- What would be the most beneficial areas of focus for a targeted intervention course for

students struggling to meet or exceed performance expectations?

- Are there notable differences in student performance for students with and without disabilities?

## Function Library

To read in, tidy, and join our data frames for each content area we will use the

following functions. This still needs to be made more adaptable to include Math and ELA.

```{r}

#Item analysis Read in

#subject must be: "math", "ela", or "science"

read_item<-function(sheet_name, subject){

subject_item<-case_when(

subject == "science"~"sitem",

subject == "math"~"mitem",

subject == "ela"~"eitem"

)

read_excel("_data/2022MCASDepartmentalAnalysis.xlsx", sheet = sheet_name,

skip = 1, col_names= c(subject_item, "Type", "Reporting Category", "Standard", "item Desc", "delete", "item Possible Points","RT Percent Points", "State Percent Points", "RT-State Diff")) %>%

select(!contains("delete"))%>%

filter(!str_detect(sitem,"Legend|legend"))%>%

mutate(sitem= as.character(sitem))%>%

separate(c(1), c("sitem", "delete"))%>%

select(!contains("delete"))%>%

mutate(sitem =

str_c(subject_item, sitem))

}

# Test<-read_item("SG9Physics", "science")

# view(Test)

# Test2<-read_item("SG8", "science")

# view(Test2)

Test3<-read_item("SG5", "science")

view(Test3)

# item_sheets<-excel_sheets("_data/2022MCASDepartmentalAnalysis.xlsx")

# item_sheets

# science_all<- map_dfr(

# excel_sheets("_data/ActiveDuty_MaritalStatus.xls")[1:3],"science",

# read_item)

# science_all

```

## MCAS 2022 Read-In Tidy

::: panel-tabset

### Read in Student Performance Data

```{r}

#Filter, rename variables, and mutate values of variables on read-in

MCAS_2022<-read_csv("_data/PrivateSpring2022_MCAS_full_preliminary_results_04830305.csv",

skip=1)%>%

select(-c("sprp_dis", "sprp_sch", "sprp_dis_name", "sprp_sch_name", "sprp_orgtype",

"schtype", "testschoolname", "yrsindis", "conenr_dis"))%>%

#Recode all nominal variables as characters

mutate(testschoolcode = as.character(testschoolcode))%>%

# mutate(sasid = as.character(sasid))%>%

mutate(highneeds = as.character(highneeds))%>%

mutate(lowincome = as.character(lowincome))%>%

mutate(title1 = as.character(title1))%>%

mutate(ever_EL = as.character(ever_EL))%>%

mutate(EL = as.character(EL))%>%

mutate(EL_FormerEL = as.character(EL_FormerEL))%>%

mutate(FormerEL = as.character(FormerEL))%>%

mutate(ELfirstyear = as.character(ELfirstyear))%>%

mutate(IEP = as.character(IEP))%>%

mutate(plan504 = as.character(plan504))%>%

mutate(firstlanguage = as.character(firstlanguage))%>%

mutate(nature0fdis = as.character(natureofdis))%>%

mutate(spedplacement = as.character(spedplacement))%>%

mutate(town = as.character(town))%>%

mutate(ssubject = as.character(ssubject))%>%

#Recode all ordinal variable as factors

mutate(grade = as.factor(grade))%>%

mutate(levelofneed = as.factor(levelofneed))%>%

mutate(eperf2 = recode_factor(eperf2,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

.ordered = TRUE))%>%

mutate(eperflev = recode_factor(eperflev,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

"DNT" = "DNT",

"ABS" = "ABS",

.ordered = TRUE))%>%

mutate(mperf2 = recode_factor(mperf2,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

.ordered = TRUE))%>%

mutate(mperflev = recode_factor(mperflev,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

"INV" = "INV",

"ABS" = "ABS",

.ordered = TRUE))%>%

# The science variables contain a mixture of legacy performance levels and

# next generation performance levels which needs to be addressed in the ordering

# of these factors.

mutate(sperf2 = recode_factor(sperf2,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

.ordered = TRUE))%>%

mutate(sperflev = recode_factor(sperflev,

"E" = "E",

"M" = "M",

"PM" = "PM",

"NM"= "NM",

"INV" = "INV",

"ABS" = "ABS",

.ordered = TRUE))%>%

#recode DOB using lubridate

mutate(dob = mdy(dob,

quiet = FALSE,

tz = NULL,

locale = Sys.getlocale("LC_TIME"),

truncated = 0

))

#view(MCAS_2022)

MCAS_2022

```

### Workflow Summary

After examining the summary (see appendix), I chose to

**Filter**:

- _SchoolID_ : There are several variables that identify our school, I removed all

but one, `testschoolcode`.

- _StudentPrivacy_: I left the `sasid` variable which is a student identifier number,

but eliminated all values corresponding to students' names.

- `dis`: We are a charter school within our own unique district, therefore any

"district level" data is identical to our "school level" data.

__Rename__

I currently have not renamed variables, but there are some trends to note:

- an `e` before most `ELA` MCAS student item performance metric variables

- an `m` before most `Math` MCAS student item performance metric variables

- an `s` before most `Science` MCAS student item performance metric variables

__Mutate__

I left as __doubles__

- variables that measured scores on specific MCAS items e.g., `mitem1`

- variables that measured student growth percentiles (`sgp`)

- variables that counted a student's years in the school system or state.

Recode to __char__

- variables that are __nominal__ but have numeric values, e.g., `town`

Refactor as __ord__

- variables that are __ordinal__, e.g., `mperflev`.

Recode to __date__

- `dob` using lubridate.

:::

## G9Science Read-in and Join

::: panel-tabset

### G9 Science Read-In

```{r}

# G9 Science Item analysis

MCAS_G9Sci2022_Item<-read_item("SG9Physics", "science")

MCAS_G9Sci2022_Item

#view(MCAS_G9Sci2022_Item)

```

```{r}

G9Sci_CU306Dis<-read_excel("_data/MCAS CU306 2022/CU306MCAS2022PhysicsGrade9ByDisability.xlsm",

sheet = "Disabled Students",

col_names = c("Reporting Category", "Possible Points", "RT%Points",

"State%Points", "RT-State Diff"))%>%

filter(`Reporting Category` == "Energy"|`Reporting Category`== "Motion, Forces, and Interactions"| `Reporting Category` == "Waves" )

#view(G9Sci_CU306Dis)

G9Sci_CU306Dis

```

```{r}

G9Sci_CU306NonDis<-read_excel("_data/MCAS CU306 2022/CU306MCAS2022PhysicsGrade9ByDisability.xlsm",

sheet = "Non-Disabled Students",

col_names = c("Reporting Category", "Possible Points", "RT%Points",

"State%Points", "RT-State Diff"))%>%

filter(`Reporting Category` == "Energy"|`Reporting Category`== "Motion, Forces, and Interactions"| `Reporting Category` == "Waves" )

G9Sci_CU306NonDis

#view(G9Sci_CU306NonDis)

```

### Tidy Data and Join

I am interested in analyzing the 9th Grade Science Performance. To do this, I will

select a subset of our data frame. I selected:

- 9th Grade student who took the Introductory Physics test

- Scores on the 42 Science Items

- Demographic characteristics of the students.

```{r}

G9ScienceMCAS_2022 <- select(MCAS_2022, contains("sitem"), gender, grade, yrsinsch,

race, IEP, `plan504`, sattempt, sperflev)%>%

filter((grade == 9) & sattempt != "N")

G9ScienceMCAS_2022<-select(G9ScienceMCAS_2022, !(contains("43")|contains("44")|contains("45")))

#view(G9ScienceMCAS_2022)

G9ScienceMCAS_2022

```

When I compared this data frame to the State reported analysis, the state analysis only contains

68 students. Notably, my data frame has 69 entries while the state is reporting data on only 68 students. I will have to investigate this further.

Since I will join this data frame with the `MCAS_G9Sci2022_Item`, using `sitem` as the key, I need to pivot this data set longer.

```{r}

G9ScienceMCAS_2022<- pivot_longer(G9ScienceMCAS_2022, contains("sitem"), names_to = "sitem", values_to = "sitem_score")

view(G9ScienceMCAS_2022)

G9ScienceMCAS_2022

```

As expected, we now have 42 X 69 = 2898 rows.

Now, we should be ready to join our data sets using `sitem` as the key. We should have a

2,898 by (10 + 8) = 2,898 by 18 data frame. We will also check our raw data against the

performance data reported by the state in the item report by calculating `percent_earned`

by Rising Tide students and comparing it to the figure `RT Percent Points` and storing the

difference in `earned_diff`

```{r}

G9Science_StudentItem <- G9ScienceMCAS_2022 %>%

left_join(MCAS_G9Sci2022_Item, "sitem")

view(G9Science_StudentItem)

G9Science_StudentItem

G9Science_StudentItem%>%

group_by(sitem)%>%

summarise(percent_earned = round(sum(sitem_score, na.rm=TRUE)/sum(`item Possible Points`, na.rm=TRUE),2) )%>%

left_join(MCAS_G9Sci2022_Item, "sitem")%>%

mutate(earned_diff = percent_earned-`RT Percent Points`)

```

As expected, we now have a 2,898 X 18 data frame and the `earned_diff` values all

round to 0.

:::

## G9 Science Performance Analysis

When considering our student performance data, we hope to address the following broad questions:

- What adjustments (if any) should be made at the Tier 1 level, i.e., curricular adjustments

for all students?

- What would be the most beneficial areas of focus for a targeted intervention course for

students struggling to meet or exceed performance expectations?

- Are there notable differences in student performance for students with and without disabilities?

::: panel-tabset

### Performance by Content Strands

What reporting categories were emphasized by the state?

We can see from our summary,that 50% of the exam points (30 of the available 60) come from questions from the Motion and Forces reporting category and 23 of the 42 questions were from this category.

```{r}

G9Science_Cat_Total<-MCAS_G9Sci2022_Item%>%

select(`sitem`, `item Possible Points`, `Reporting Category`, `State Percent Points`, `RT Percent Points`, `RT-State Diff`)

G9Science_Cat_Total%>%

group_by(`Reporting Category`)%>%

summarise(available_points = sum(`item Possible Points`, na.rm=TRUE),

RT_percent_points = mean(`RT Percent Points`, na.rm = TRUE),

State_percent_points = mean(`State Percent Points`, na.rm = TRUE))

```

```{r}

ggplot(G9Science_Cat_Total, aes(x= `Reporting Category`, fill = `Reporting Category`))+

geom_bar( alpha=0.9) +