Code

import nltk

import re

from string import digits

import pandas as pdYan Shi

| author_id | username | author_followers | author_tweets | author_description | author_location | text | created_at | geo_id | retweets | replies | likes | quote_count | geo_name | states_abbrev | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2729932651 | TwelveRivers12 | 367 | 1862 | We strive to raise the bar of what it means to... | Austin, TX | #WFH but make it fashion (Twelve Rivers fashio... | 2020-12-19 20:00:14+00:00 | c3f37afa9efcf94b | 1 | 0 | 1 | 1 | Austin, TX | TX |

| 1 | 483173029 | CarrieHOlerich | 2138 | 88698 | Driven, passionate #Communications graduate.🎓📚... | Nebraska, USA | Late night evening #wfh vibes finish my evenin... | 2020-12-19 07:12:54+00:00 | 0fc2e8f588955000 | 0 | 0 | 1 | 0 | Johnny Goodman Golf Course | NaN |

| 2 | 389908361 | JuanC611 | 214 | 12248 | I'm a #BCB, craft beer drinkin #Kaskade listen... | Oxnard, CA | Step 2, in progress...\n#wfh #wfhlife @ Riverp... | 2020-12-19 02:56:54+00:00 | a3c0ae863771d69e | 0 | 0 | 0 | 0 | Oxnard, CA | CA |

| 3 | 737763400118198277 | MissionTXperts | 828 | 1618 | Follow us on IG! @missiontxperts #FamousForExp... | Mission, TX | Congratulations on your graduation!!! Welcome ... | 2020-12-18 22:35:35+00:00 | 77633125ba089dcb | 1 | 1 | 15 | 1 | Mission, TX | TX |

| 4 | 522212036 | FitnessFoundry | 2693 | 14002 | Award Winning Personal Trainer| EMT-B 🚑 NSCA-R... | Boston and Malden, MA | Part 2 #HomeWorkout \n\n#OldSchool Jumping Jac... | 2020-12-18 19:07:33+00:00 | 75f5a403163f6f95 | 1 | 0 | 1 | 0 | Malden, MA | MA |

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 31965 entries, 0 to 31964

Data columns (total 15 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 author_id 31965 non-null int64

1 username 31958 non-null object

2 author_followers 31965 non-null int64

3 author_tweets 31965 non-null int64

4 author_description 30868 non-null object

5 author_location 30154 non-null object

6 text 31965 non-null object

7 created_at 31965 non-null object

8 geo_id 31965 non-null object

9 retweets 31965 non-null int64

10 replies 31965 non-null int64

11 likes 31965 non-null int64

12 quote_count 31965 non-null int64

13 geo_name 31965 non-null object

14 states_abbrev 30392 non-null object

dtypes: int64(7), object(8)

memory usage: 3.7+ MBimport string

string.punctuation

def clean_text(text):

'''

text cleaning, remove numbers, url, punctuation, newline, special characters

'''

text = text.lower()

text = ''.join([i for i in text if not i.isdigit()])

text = re.sub('\[.*?\]', '', text)

text = re.sub('https?://\S+|www\.\S+', '', text)

text = re.sub('<.*?>+', '', text)

text = re.sub('[%s]' % re.escape(string.punctuation), ' ', text)

text = re.sub('\n', '', text)

tweet = re.sub('\w*\d\w*', '', text)

clean_text = re.sub("@[A-Za-z0-9_]+","", tweet)

clean_text = re.sub("#[A-Za-z0-9_]+","", clean_text)

clean_text = re.sub(r'http\S+', '', clean_text)

return clean_text

def text_preprocessing(text):

'''

preprocessing text

'''

tokenizer = nltk.tokenize.RegexpTokenizer(r'\w+')

nopunct = clean_text(text)

tokenized_text = tokenizer.tokenize(nopunct)

combined_text = ' '.join(tokenized_text)

return combined_text| author_id | username | author_followers | author_tweets | author_description | author_location | text | created_at | geo_id | retweets | replies | likes | quote_count | geo_name | states_abbrev | clean_text | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2729932651 | TwelveRivers12 | 367 | 1862 | We strive to raise the bar of what it means to... | Austin, TX | #WFH but make it fashion (Twelve Rivers fashio... | 2020-12-19 20:00:14+00:00 | c3f37afa9efcf94b | 1 | 0 | 1 | 1 | Austin, TX | TX | wfh but make it fashion twelve rivers fashion ... |

| 1 | 483173029 | CarrieHOlerich | 2138 | 88698 | Driven, passionate #Communications graduate.🎓📚... | Nebraska, USA | Late night evening #wfh vibes finish my evenin... | 2020-12-19 07:12:54+00:00 | 0fc2e8f588955000 | 0 | 0 | 1 | 0 | Johnny Goodman Golf Course | NaN | late night evening wfh vibes finish my evening... |

| 2 | 389908361 | JuanC611 | 214 | 12248 | I'm a #BCB, craft beer drinkin #Kaskade listen... | Oxnard, CA | Step 2, in progress...\n#wfh #wfhlife @ Riverp... | 2020-12-19 02:56:54+00:00 | a3c0ae863771d69e | 0 | 0 | 0 | 0 | Oxnard, CA | CA | step in progress wfh wfhlife riverpark |

| 3 | 737763400118198277 | MissionTXperts | 828 | 1618 | Follow us on IG! @missiontxperts #FamousForExp... | Mission, TX | Congratulations on your graduation!!! Welcome ... | 2020-12-18 22:35:35+00:00 | 77633125ba089dcb | 1 | 1 | 15 | 1 | Mission, TX | TX | congratulations on your graduation welcome to ... |

| 4 | 522212036 | FitnessFoundry | 2693 | 14002 | Award Winning Personal Trainer| EMT-B 🚑 NSCA-R... | Boston and Malden, MA | Part 2 #HomeWorkout \n\n#OldSchool Jumping Jac... | 2020-12-18 19:07:33+00:00 | 75f5a403163f6f95 | 1 | 0 | 1 | 0 | Malden, MA | MA | part homeworkout oldschool jumping jack variat... |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 31960 | 2398266878 | realNickWake | 395 | 7586 | #Michiganian / #Michigander, Ardent #Capitalis... | Michigan, USA | @rstudley Open up businesses to determine what... | 2021-03-01 16:05:06+00:00 | 6231ced9cc2b96aa | 2 | 0 | 1 | 0 | Grandville, MI | MI | rstudley open up businesses to determine what ... |

| 31961 | 1180842344 | ksgates__ | 702 | 7563 | Marketing & Membership at LCC | @CMAA | Perman... | NaN | Gearing up for a busy spring welcoming new mem... | 2021-03-01 15:55:28+00:00 | 27c45d804c777999 | 0 | 0 | 1 | 0 | Kansas, USA | KS | gearing up for a busy spring welcoming new mem... |

| 31962 | 17499112 | ahurwitz03 | 207 | 1796 | PSA: my new favorite color is green 💚 meow - U... | Vermont | Wearing carhartts today to pretend like I’m do... | 2021-03-01 13:28:16+00:00 | 9aa25269f04766ab | 0 | 0 | 2 | 0 | Vermont, USA | VT | wearing carhartts today to pretend like i m do... |

| 31963 | 483173029 | CarrieHOlerich | 2141 | 89128 | Driven, passionate #Communications graduate.🎓📚... | Nebraska, USA | If laying on the sofa laughing about Twtter du... | 2021-03-01 07:41:20+00:00 | a84b808ce3f11719 | 0 | 0 | 1 | 0 | Omaha, NE | NE | if laying on the sofa laughing about twtter du... |

| 31964 | 483173029 | CarrieHOlerich | 2141 | 89128 | Driven, passionate #Communications graduate.🎓📚... | Nebraska, USA | Blasting some #Queen during #wfh\n👩💻 #myfirst... | 2021-03-01 00:18:24+00:00 | 0fc2e8f588955000 | 0 | 0 | 1 | 0 | Johnny Goodman Golf Course | NaN | blasting some queen during wfh myfirstconcert ... |

31965 rows × 16 columns

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

STOPWORDS = set(stopwords.words('english'))

def remove_stopwords(text):

"""custom function to remove the stopwords"""

return " ".join([word for word in str(text).split() if word not in STOPWORDS])

df['no_stopwords_text'] = df['clean_text'].apply(lambda x: remove_stopwords(x))[nltk_data] Downloading package stopwords to

[nltk_data] /Users/yanshi/nltk_data...

[nltk_data] Package stopwords is already up-to-date![nltk_data] Downloading package wordnet to /Users/yanshi/nltk_data...

[nltk_data] Package wordnet is already up-to-date!| author_id | username | author_followers | author_tweets | author_description | author_location | text | created_at | geo_id | retweets | replies | likes | quote_count | geo_name | states_abbrev | clean_text | no_stopwords_text | no_remotework_text | lematize_text | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2729932651 | TwelveRivers12 | 367 | 1862 | We strive to raise the bar of what it means to... | Austin, TX | #WFH but make it fashion (Twelve Rivers fashio... | 2020-12-19 20:00:14+00:00 | c3f37afa9efcf94b | 1 | 0 | 1 | 1 | Austin, TX | TX | wfh but make it fashion twelve rivers fashion ... | wfh make fashion twelve rivers fashion office ... | make fashion twelve rivers fashion office big ... | make fashion twelve river fashion office big g... |

| 1 | 483173029 | CarrieHOlerich | 2138 | 88698 | Driven, passionate #Communications graduate.🎓📚... | Nebraska, USA | Late night evening #wfh vibes finish my evenin... | 2020-12-19 07:12:54+00:00 | 0fc2e8f588955000 | 0 | 0 | 1 | 0 | Johnny Goodman Golf Course | NaN | late night evening wfh vibes finish my evening... | late night evening wfh vibes finish evening wf... | late night evening vibes finish evening wfhlife | late night evening vibe finish evening wfhlife |

| 2 | 389908361 | JuanC611 | 214 | 12248 | I'm a #BCB, craft beer drinkin #Kaskade listen... | Oxnard, CA | Step 2, in progress...\n#wfh #wfhlife @ Riverp... | 2020-12-19 02:56:54+00:00 | a3c0ae863771d69e | 0 | 0 | 0 | 0 | Oxnard, CA | CA | step in progress wfh wfhlife riverpark | step progress wfh wfhlife riverpark | step progress wfhlife riverpark | step progress wfhlife riverpark |

| 3 | 737763400118198277 | MissionTXperts | 828 | 1618 | Follow us on IG! @missiontxperts #FamousForExp... | Mission, TX | Congratulations on your graduation!!! Welcome ... | 2020-12-18 22:35:35+00:00 | 77633125ba089dcb | 1 | 1 | 15 | 1 | Mission, TX | TX | congratulations on your graduation welcome to ... | congratulations graduation welcome missiontxpe... | congratulations graduation welcome missiontxpe... | congratulation graduation welcome missiontxper... |

| 4 | 522212036 | FitnessFoundry | 2693 | 14002 | Award Winning Personal Trainer| EMT-B 🚑 NSCA-R... | Boston and Malden, MA | Part 2 #HomeWorkout \n\n#OldSchool Jumping Jac... | 2020-12-18 19:07:33+00:00 | 75f5a403163f6f95 | 1 | 0 | 1 | 0 | Malden, MA | MA | part homeworkout oldschool jumping jack variat... | part homeworkout oldschool jumping jack variat... | part homeworkout oldschool jumping jack variat... | part homeworkout oldschool jumping jack variat... |

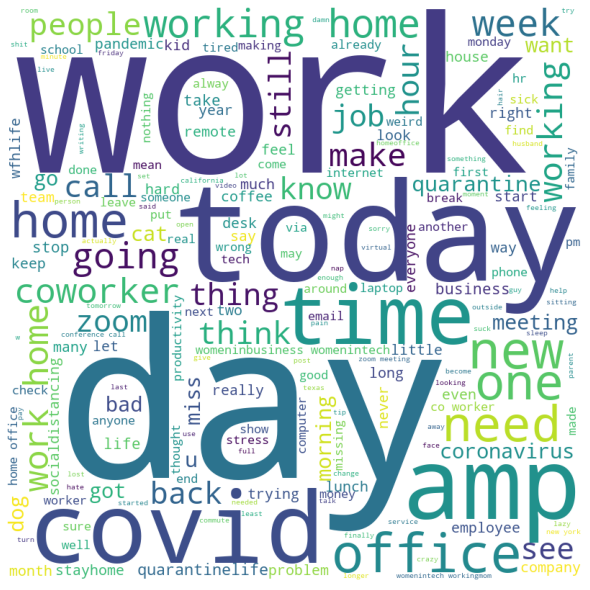

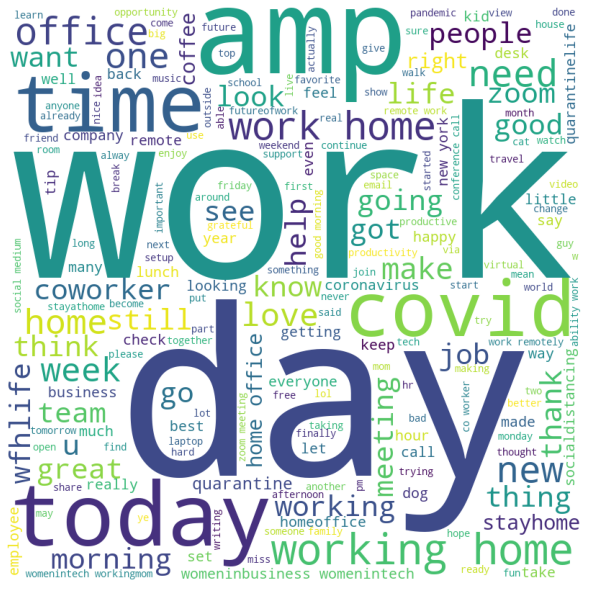

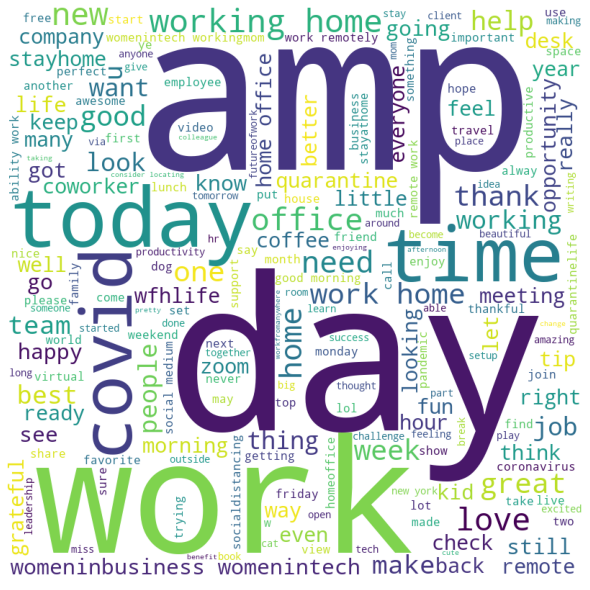

#generate word cloud

tweets = ''

for tweet in df['lematize_text'].values:

tweets += ''.join(tweet)+' '

from wordcloud import WordCloud

import matplotlib.pyplot as plt

wordcloud = WordCloud(width = 800, height = 800,

background_color ='white',

min_font_size = 10).generate(tweets)

# plot the WordCloud image

plt.figure(figsize = (8, 8), facecolor = None)

plt.imshow(wordcloud)

plt.axis("off")

plt.tight_layout(pad = 0)

plt.show()

There are some positive emoiton words associate with remote work tweet, such as love, best, great, flexible

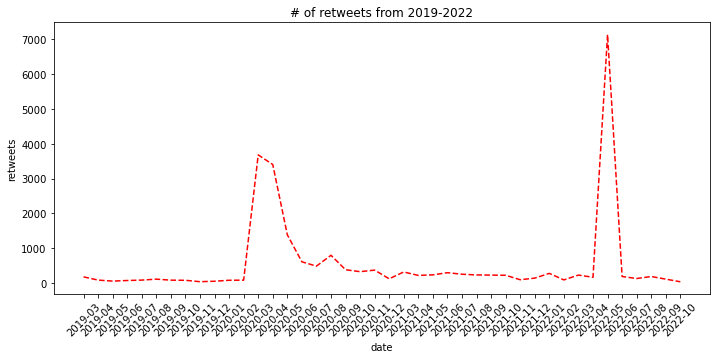

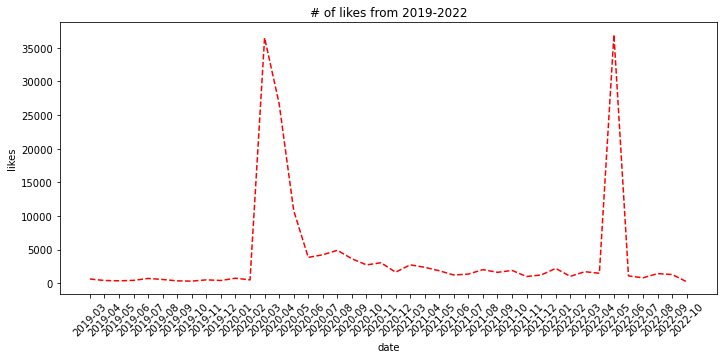

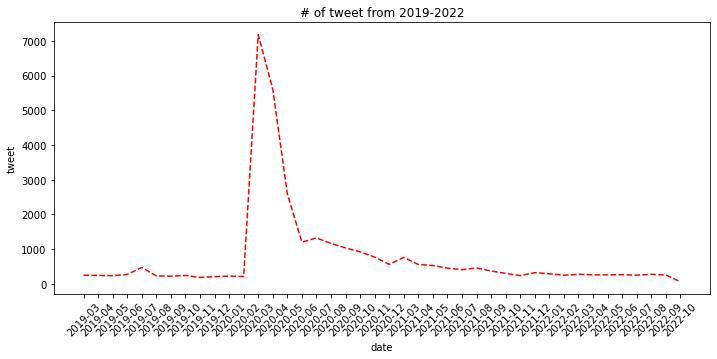

from datetime import datetime

from datetime import timedelta

#convert date str to datetime

df['date'] = df['created_at'].apply(lambda x: datetime.strptime(x, '%Y-%m-%d %H:%M:%S%z').date())

df['month'] = df['date'].apply(lambda x: x.year)

df['month'] = df['date'].apply(lambda x: x.month)

#create new column indicate # of tweet

df['tweet'] = 1| author_id | author_followers | author_tweets | retweets | replies | likes | quote_count | month | tweet | |

|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||

| 2019-03 | 7.438393e+19 | 343788.0 | 2170734.0 | 183.0 | 47.0 | 628.0 | 17.0 | 732 | 244 |

| 2019-04 | 4.046708e+19 | 928765.0 | 3378692.0 | 90.0 | 41.0 | 405.0 | 17.0 | 948 | 237 |

| 2019-05 | 3.972946e+19 | 352691.0 | 2379015.0 | 61.0 | 38.0 | 360.0 | 6.0 | 1145 | 229 |

| 2019-06 | 4.395675e+19 | 1183557.0 | 7955395.0 | 78.0 | 37.0 | 424.0 | 3.0 | 1584 | 264 |

| 2019-07 | 3.932131e+19 | 1360104.0 | 18625242.0 | 90.0 | 75.0 | 714.0 | 8.0 | 3269 | 467 |

| 2019-08 | 4.410848e+19 | 1357105.0 | 6326535.0 | 117.0 | 71.0 | 554.0 | 10.0 | 1784 | 223 |

| 2019-09 | 3.765725e+19 | 1002927.0 | 3209900.0 | 88.0 | 62.0 | 352.0 | 9.0 | 1926 | 214 |

| 2019-10 | 3.544762e+19 | 768274.0 | 5372587.0 | 84.0 | 35.0 | 312.0 | 8.0 | 2360 | 236 |

| 2019-11 | 2.258816e+19 | 1078118.0 | 3426775.0 | 44.0 | 47.0 | 501.0 | 10.0 | 1969 | 179 |

| 2019-12 | 2.915014e+19 | 1189362.0 | 4754427.0 | 57.0 | 59.0 | 405.0 | 8.0 | 2400 | 200 |

| 2020-01 | 2.945092e+19 | 3087824.0 | 8221563.0 | 85.0 | 76.0 | 747.0 | 11.0 | 217 | 217 |

| 2020-02 | 4.105943e+19 | 1584795.0 | 5560972.0 | 88.0 | 35.0 | 496.0 | 12.0 | 410 | 205 |

| 2020-03 | 8.645601e+20 | 48557156.0 | 197921690.0 | 3679.0 | 3629.0 | 36473.0 | 694.0 | 21543 | 7181 |

| 2020-04 | 7.294542e+20 | 46979898.0 | 188548406.0 | 3402.0 | 2406.0 | 26683.0 | 609.0 | 22436 | 5609 |

| 2020-05 | 3.490690e+20 | 30129569.0 | 120193010.0 | 1389.0 | 993.0 | 10803.0 | 207.0 | 12975 | 2595 |

| 2020-06 | 1.649488e+20 | 17943489.0 | 70096650.0 | 614.0 | 341.0 | 3838.0 | 61.0 | 7176 | 1196 |

| 2020-07 | 1.955315e+20 | 17309655.0 | 63812046.0 | 490.0 | 440.0 | 4229.0 | 63.0 | 9226 | 1318 |

| 2020-08 | 1.570420e+20 | 16645513.0 | 60769499.0 | 801.0 | 557.0 | 4891.0 | 113.0 | 9280 | 1160 |

| 2020-09 | 1.735446e+20 | 9439745.0 | 41633450.0 | 387.0 | 383.0 | 3638.0 | 58.0 | 9279 | 1031 |

| 2020-10 | 1.817899e+20 | 8768590.0 | 40669344.0 | 331.0 | 317.0 | 2722.0 | 59.0 | 9170 | 917 |

| 2020-11 | 1.437103e+20 | 7627941.0 | 33446687.0 | 376.0 | 271.0 | 3041.0 | 79.0 | 8426 | 766 |

| 2020-12 | 1.083744e+20 | 4819769.0 | 22376433.0 | 127.0 | 199.0 | 1636.0 | 30.0 | 6660 | 555 |

| 2021-03 | 1.383146e+20 | 3995683.0 | 27766309.0 | 323.0 | 303.0 | 2735.0 | 45.0 | 2274 | 758 |

| 2021-04 | 9.634597e+19 | 4755020.0 | 27458348.0 | 224.0 | 224.0 | 2369.0 | 36.0 | 2212 | 553 |

| 2021-05 | 1.030695e+20 | 9989706.0 | 43211693.0 | 240.0 | 164.0 | 1853.0 | 40.0 | 2615 | 523 |

| 2021-06 | 8.698560e+19 | 5177755.0 | 24851971.0 | 302.0 | 105.0 | 1221.0 | 22.0 | 2688 | 448 |

| 2021-07 | 9.350160e+19 | 4866449.0 | 23239899.0 | 258.0 | 113.0 | 1359.0 | 45.0 | 2807 | 401 |

| 2021-08 | 1.433259e+20 | 5569418.0 | 28447352.0 | 238.0 | 150.0 | 2016.0 | 31.0 | 3616 | 452 |

| 2021-09 | 6.667617e+19 | 5335971.0 | 22639462.0 | 233.0 | 137.0 | 1619.0 | 24.0 | 3276 | 364 |

| 2021-10 | 7.075310e+19 | 3572529.0 | 14799941.0 | 228.0 | 94.0 | 1899.0 | 15.0 | 2950 | 295 |

| 2021-11 | 7.354641e+19 | 2237299.0 | 8141217.0 | 100.0 | 94.0 | 997.0 | 22.0 | 2541 | 231 |

| 2021-12 | 1.652327e+20 | 3041139.0 | 12163714.0 | 147.0 | 83.0 | 1224.0 | 14.0 | 3804 | 317 |

| 2022-01 | 9.561257e+19 | 1813404.0 | 7585565.0 | 282.0 | 155.0 | 2202.0 | 88.0 | 284 | 284 |

| 2022-02 | 9.447138e+19 | 1969710.0 | 9494066.0 | 95.0 | 91.0 | 1017.0 | 7.0 | 488 | 244 |

| 2022-03 | 1.105446e+20 | 1445940.0 | 6369174.0 | 234.0 | 108.0 | 1714.0 | 15.0 | 807 | 269 |

| 2022-04 | 8.211766e+19 | 925590.0 | 5163050.0 | 171.0 | 89.0 | 1479.0 | 20.0 | 1016 | 254 |

| 2022-05 | 9.748346e+19 | 1218399.0 | 4793837.0 | 7125.0 | 605.0 | 36933.0 | 1801.0 | 1265 | 253 |

| 2022-06 | 9.655524e+19 | 2835914.0 | 11209940.0 | 193.0 | 130.0 | 1089.0 | 25.0 | 1548 | 258 |

| 2022-07 | 9.918573e+19 | 1771341.0 | 8111514.0 | 135.0 | 113.0 | 806.0 | 14.0 | 1715 | 245 |

| 2022-08 | 1.049189e+20 | 1783001.0 | 7768621.0 | 193.0 | 107.0 | 1428.0 | 7.0 | 2152 | 269 |

| 2022-09 | 8.922183e+19 | 869384.0 | 3837078.0 | 117.0 | 142.0 | 1294.0 | 16.0 | 2250 | 250 |

| 2022-10 | 1.781892e+19 | 417164.0 | 1698870.0 | 42.0 | 21.0 | 217.0 | 3.0 | 540 | 54 |

| retweets | replies | likes | quote_count | #tweet | tweet | month | |

|---|---|---|---|---|---|---|---|

| count | 12.000000 | 12.000000 | 12.000000 | 12.000000 | 12.000000 | 12.000000 | 12.000000 |

| mean | 278.416667 | 205.166667 | 1941.166667 | 45.916667 | 339.166667 | 339.166667 | 6.500000 |

| std | 253.683216 | 153.817385 | 1768.247557 | 33.494798 | 282.504331 | 282.504331 | 3.605551 |

| min | 78.000000 | 87.000000 | 733.000000 | 13.000000 | 98.000000 | 98.000000 | 1.000000 |

| 25% | 115.000000 | 122.750000 | 776.000000 | 23.250000 | 180.500000 | 180.500000 | 3.750000 |

| 50% | 184.500000 | 169.500000 | 1297.500000 | 36.000000 | 260.500000 | 260.500000 | 6.500000 |

| 75% | 324.500000 | 227.500000 | 2176.000000 | 66.500000 | 402.250000 | 402.250000 | 9.250000 |

| max | 977.000000 | 664.000000 | 6739.000000 | 127.000000 | 1160.000000 | 1160.000000 | 12.000000 |

<class 'pandas.core.frame.DataFrame'>

Int64Index: 768 entries, 25539 to 30069

Data columns (total 25 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 author_id 768 non-null int64

1 username 768 non-null object

2 author_followers 768 non-null int64

3 author_tweets 768 non-null int64

4 author_description 741 non-null object

5 author_location 714 non-null object

6 text 768 non-null object

7 created_at 768 non-null object

8 geo_id 768 non-null object

9 retweets 768 non-null int64

10 replies 768 non-null int64

11 likes 768 non-null int64

12 quote_count 768 non-null int64

13 geo_name 768 non-null object

14 states_abbrev 734 non-null object

15 clean_text 768 non-null object

16 no_stopwords_text 768 non-null object

17 no_remotework_text 768 non-null object

18 lematize_text 768 non-null object

19 date 768 non-null object

20 month 768 non-null int64

21 tweet 768 non-null int64

22 scores 768 non-null object

23 compound_score 768 non-null float64

24 sentiment 768 non-null object

dtypes: float64(1), int64(9), object(15)

memory usage: 156.0+ KB# topic model

import gensim

import gensim.corpora as corpora

from pprint import pprint

import pyLDAvis

import pyLDAvis.gensim_models as gensimvis

import gensim.corpora as corpora

def sent_to_words(sentences):

'''

tokenize words

'''

for sentence in sentences:

yield(gensim.utils.simple_preprocess(str(sentence), deacc=True)) #deacc=True removes punctuations

data_words = list(sent_to_words(df_second_peak['lematize_text'].values.tolist()))

# Create Dictionary

id2word = corpora.Dictionary(data_words)

# Create Corpus

texts = data_words

# Term Document Frequency

corpus = [id2word.doc2bow(text) for text in texts]

# number of topics

num_topics = 5

# Build LDA model

lda_model = gensim.models.ldamodel.LdaModel(corpus=corpus,

id2word=id2word,

num_topics=num_topics,

random_state=100,

update_every=1,

chunksize=100,

passes=10,

alpha='auto')INFO 2022-10-28 14:59:41,055 dictionary.py:201] adding document #0 to Dictionary<0 unique tokens: []>

INFO 2022-10-28 14:59:41,070 dictionary.py:206] built Dictionary<4059 unique tokens: ['advice', 'blackintech', 'coding', 'comment', 'computerscience']...> from 768 documents (total 10300 corpus positions)

INFO 2022-10-28 14:59:41,071 utils.py:448] Dictionary lifecycle event {'msg': "built Dictionary<4059 unique tokens: ['advice', 'blackintech', 'coding', 'comment', 'computerscience']...> from 768 documents (total 10300 corpus positions)", 'datetime': '2022-10-28T14:59:41.070998', 'gensim': '4.2.0', 'python': '3.9.7 (default, Sep 16 2021, 08:50:36) \n[Clang 10.0.0 ]', 'platform': 'macOS-10.16-x86_64-i386-64bit', 'event': 'created'}

INFO 2022-10-28 14:59:41,079 ldamodel.py:595] using autotuned alpha, starting with [0.2, 0.2, 0.2, 0.2, 0.2]

INFO 2022-10-28 14:59:41,079 ldamodel.py:576] using symmetric eta at 0.2

INFO 2022-10-28 14:59:41,080 ldamodel.py:481] using serial LDA version on this node

INFO 2022-10-28 14:59:41,083 ldamodel.py:947] running online (multi-pass) LDA training, 5 topics, 10 passes over the supplied corpus of 768 documents, updating model once every 100 documents, evaluating perplexity every 768 documents, iterating 50x with a convergence threshold of 0.001000

INFO 2022-10-28 14:59:41,083 ldamodel.py:1001] PROGRESS: pass 0, at document #100/768

INFO 2022-10-28 14:59:41,138 ldamodel.py:794] optimized alpha [0.15121561, 0.14029418, 0.13779749, 0.1766257, 0.14536503]

INFO 2022-10-28 14:59:41,139 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,141 ldamodel.py:1196] topic #0 (0.151): 0.013*"edge" + 0.012*"amp" + 0.011*"dell" + 0.011*"remote" + 0.010*"work" + 0.009*"happen" + 0.009*"business" + 0.009*"tech" + 0.008*"make" + 0.008*"job"

INFO 2022-10-28 14:59:41,142 ldamodel.py:1196] topic #1 (0.140): 0.012*"work" + 0.011*"meeting" + 0.009*"coffee" + 0.008*"office" + 0.007*"see" + 0.007*"time" + 0.006*"need" + 0.006*"week" + 0.006*"fascinating" + 0.006*"hybridwork"

INFO 2022-10-28 14:59:41,142 ldamodel.py:1196] topic #2 (0.138): 0.011*"great" + 0.008*"amp" + 0.007*"job" + 0.006*"know" + 0.006*"edge" + 0.006*"remote" + 0.005*"park" + 0.005*"one" + 0.005*"java" + 0.005*"knowledge"

INFO 2022-10-28 14:59:41,143 ldamodel.py:1196] topic #3 (0.177): 0.023*"office" + 0.021*"remote" + 0.020*"work" + 0.009*"workplace" + 0.009*"amp" + 0.008*"today" + 0.007*"back" + 0.007*"airbnb" + 0.005*"great" + 0.005*"one"

INFO 2022-10-28 14:59:41,143 ldamodel.py:1196] topic #4 (0.145): 0.028*"california" + 0.013*"iphonography" + 0.013*"shotoniphone" + 0.013*"nature" + 0.013*"naturelovers" + 0.013*"mvt" + 0.011*"amp" + 0.008*"work" + 0.008*"milpitas" + 0.008*"autocruise"

INFO 2022-10-28 14:59:41,144 ldamodel.py:1074] topic diff=4.303751, rho=1.000000

INFO 2022-10-28 14:59:41,144 ldamodel.py:1001] PROGRESS: pass 0, at document #200/768

INFO 2022-10-28 14:59:41,183 ldamodel.py:794] optimized alpha [0.16121417, 0.15894547, 0.14214501, 0.18443114, 0.13119942]

INFO 2022-10-28 14:59:41,184 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,186 ldamodel.py:1196] topic #0 (0.161): 0.015*"business" + 0.013*"workbnb" + 0.012*"work" + 0.011*"company" + 0.011*"job" + 0.011*"worker" + 0.010*"travel" + 0.010*"traveling" + 0.009*"life" + 0.009*"futureofwork"

INFO 2022-10-28 14:59:41,187 ldamodel.py:1196] topic #1 (0.159): 0.019*"work" + 0.013*"meeting" + 0.011*"time" + 0.010*"home" + 0.007*"office" + 0.007*"much" + 0.007*"internet" + 0.007*"coffee" + 0.006*"call" + 0.006*"experience"

INFO 2022-10-28 14:59:41,187 ldamodel.py:1196] topic #2 (0.142): 0.009*"entrepreneur" + 0.009*"blackintech" + 0.008*"amp" + 0.007*"long" + 0.007*"know" + 0.006*"day" + 0.006*"coming" + 0.006*"texas" + 0.006*"job" + 0.005*"one"

INFO 2022-10-28 14:59:41,188 ldamodel.py:1196] topic #3 (0.184): 0.036*"work" + 0.024*"remote" + 0.016*"office" + 0.015*"home" + 0.010*"job" + 0.009*"anywhere" + 0.008*"back" + 0.008*"working" + 0.007*"startup" + 0.007*"available"

INFO 2022-10-28 14:59:41,188 ldamodel.py:1196] topic #4 (0.131): 0.013*"california" + 0.010*"change" + 0.010*"grateful" + 0.009*"work" + 0.008*"lake" + 0.007*"able" + 0.007*"corporate" + 0.007*"amp" + 0.006*"many" + 0.006*"family"

INFO 2022-10-28 14:59:41,189 ldamodel.py:1074] topic diff=0.698342, rho=0.707107

INFO 2022-10-28 14:59:41,189 ldamodel.py:1001] PROGRESS: pass 0, at document #300/768

INFO 2022-10-28 14:59:41,226 ldamodel.py:794] optimized alpha [0.15998477, 0.16423175, 0.16239658, 0.18531369, 0.13525054]

INFO 2022-10-28 14:59:41,227 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,229 ldamodel.py:1196] topic #0 (0.160): 0.021*"business" + 0.020*"work" + 0.011*"career" + 0.011*"dream" + 0.011*"like" + 0.011*"goal" + 0.010*"mentorship" + 0.010*"workathome" + 0.010*"profit" + 0.010*"finance"

INFO 2022-10-28 14:59:41,229 ldamodel.py:1196] topic #1 (0.164): 0.027*"work" + 0.015*"home" + 0.014*"time" + 0.012*"coffee" + 0.011*"meeting" + 0.011*"nowhiring" + 0.009*"usa" + 0.009*"california" + 0.008*"got" + 0.006*"choose"

INFO 2022-10-28 14:59:41,230 ldamodel.py:1196] topic #2 (0.162): 0.015*"entrepreneur" + 0.012*"follow" + 0.011*"day" + 0.010*"morning" + 0.009*"long" + 0.009*"job" + 0.009*"let" + 0.008*"realestate" + 0.008*"place" + 0.007*"home"

INFO 2022-10-28 14:59:41,230 ldamodel.py:1196] topic #3 (0.185): 0.049*"work" + 0.024*"home" + 0.015*"office" + 0.012*"remote" + 0.010*"like" + 0.009*"back" + 0.008*"comfort" + 0.008*"vacation" + 0.007*"today" + 0.007*"still"

INFO 2022-10-28 14:59:41,231 ldamodel.py:1196] topic #4 (0.135): 0.018*"california" + 0.009*"work" + 0.007*"home" + 0.007*"good" + 0.006*"time" + 0.006*"corporate" + 0.006*"amp" + 0.006*"thing" + 0.006*"grateful" + 0.005*"day"

INFO 2022-10-28 14:59:41,232 ldamodel.py:1074] topic diff=0.608546, rho=0.577350

INFO 2022-10-28 14:59:41,232 ldamodel.py:1001] PROGRESS: pass 0, at document #400/768

INFO 2022-10-28 14:59:41,265 ldamodel.py:794] optimized alpha [0.16158435, 0.17259955, 0.17474662, 0.21464582, 0.13420783]

INFO 2022-10-28 14:59:41,266 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,268 ldamodel.py:1196] topic #0 (0.162): 0.021*"love" + 0.017*"work" + 0.015*"business" + 0.011*"saturday" + 0.011*"good" + 0.010*"get" + 0.009*"dream" + 0.008*"life" + 0.008*"go" + 0.008*"today"

INFO 2022-10-28 14:59:41,268 ldamodel.py:1196] topic #1 (0.173): 0.018*"work" + 0.015*"time" + 0.012*"home" + 0.009*"need" + 0.009*"meeting" + 0.008*"much" + 0.008*"coffee" + 0.007*"today" + 0.007*"california" + 0.007*"got"

INFO 2022-10-28 14:59:41,269 ldamodel.py:1196] topic #2 (0.175): 0.015*"entrepreneur" + 0.014*"day" + 0.012*"sunday" + 0.012*"job" + 0.011*"realestate" + 0.010*"let" + 0.009*"morning" + 0.008*"check" + 0.008*"businessowner" + 0.007*"follow"

INFO 2022-10-28 14:59:41,270 ldamodel.py:1196] topic #3 (0.215): 0.032*"work" + 0.021*"home" + 0.019*"office" + 0.011*"back" + 0.011*"today" + 0.009*"monday" + 0.009*"new" + 0.009*"like" + 0.008*"thursday" + 0.007*"working"

INFO 2022-10-28 14:59:41,270 ldamodel.py:1196] topic #4 (0.134): 0.019*"california" + 0.009*"group" + 0.008*"good" + 0.008*"home" + 0.008*"sold" + 0.007*"ready" + 0.007*"amazing" + 0.006*"listed" + 0.006*"realestateagent" + 0.006*"souza"

INFO 2022-10-28 14:59:41,271 ldamodel.py:1074] topic diff=0.594212, rho=0.500000

INFO 2022-10-28 14:59:41,271 ldamodel.py:1001] PROGRESS: pass 0, at document #500/768

INFO 2022-10-28 14:59:41,308 ldamodel.py:794] optimized alpha [0.1681245, 0.18190226, 0.17842254, 0.22165008, 0.1390742]

INFO 2022-10-28 14:59:41,310 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,311 ldamodel.py:1196] topic #0 (0.168): 0.018*"love" + 0.017*"work" + 0.011*"business" + 0.010*"life" + 0.010*"trying" + 0.010*"get" + 0.009*"job" + 0.009*"go" + 0.008*"make" + 0.008*"good"

INFO 2022-10-28 14:59:41,312 ldamodel.py:1196] topic #1 (0.182): 0.019*"work" + 0.015*"time" + 0.012*"home" + 0.012*"need" + 0.008*"meeting" + 0.007*"today" + 0.007*"much" + 0.007*"zoom" + 0.007*"get" + 0.007*"even"INFO 2022-10-28 14:59:41,313 ldamodel.py:1196] topic #2 (0.178): 0.015*"job" + 0.014*"realestate" + 0.013*"entrepreneur" + 0.013*"day" + 0.013*"another" + 0.009*"sunday" + 0.007*"morning" + 0.007*"way" + 0.006*"home" + 0.006*"let"

INFO 2022-10-28 14:59:41,314 ldamodel.py:1196] topic #3 (0.222): 0.036*"work" + 0.021*"home" + 0.016*"office" + 0.011*"working" + 0.011*"today" + 0.009*"back" + 0.009*"like" + 0.007*"new" + 0.007*"still" + 0.006*"week"

INFO 2022-10-28 14:59:41,314 ldamodel.py:1196] topic #4 (0.139): 0.013*"california" + 0.011*"done" + 0.011*"home" + 0.010*"group" + 0.010*"sold" + 0.010*"ready" + 0.009*"souza" + 0.009*"homesales" + 0.009*"listed" + 0.009*"soldgetting"

INFO 2022-10-28 14:59:41,315 ldamodel.py:1074] topic diff=0.520142, rho=0.447214

INFO 2022-10-28 14:59:41,315 ldamodel.py:1001] PROGRESS: pass 0, at document #600/768

INFO 2022-10-28 14:59:41,351 ldamodel.py:794] optimized alpha [0.17977357, 0.20402989, 0.17562437, 0.24766779, 0.14495757]

INFO 2022-10-28 14:59:41,352 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,353 ldamodel.py:1196] topic #0 (0.180): 0.015*"go" + 0.015*"love" + 0.014*"work" + 0.012*"life" + 0.010*"trying" + 0.010*"get" + 0.010*"make" + 0.008*"today" + 0.007*"job" + 0.007*"day"

INFO 2022-10-28 14:59:41,354 ldamodel.py:1196] topic #1 (0.204): 0.015*"work" + 0.014*"time" + 0.012*"meeting" + 0.011*"need" + 0.010*"home" + 0.009*"today" + 0.008*"much" + 0.007*"get" + 0.006*"zoom" + 0.006*"win"

INFO 2022-10-28 14:59:41,355 ldamodel.py:1196] topic #2 (0.176): 0.015*"job" + 0.010*"day" + 0.009*"realestate" + 0.009*"entrepreneur" + 0.009*"another" + 0.008*"way" + 0.008*"let" + 0.007*"think" + 0.006*"long" + 0.006*"sunday"

INFO 2022-10-28 14:59:41,355 ldamodel.py:1196] topic #3 (0.248): 0.029*"work" + 0.020*"home" + 0.020*"office" + 0.016*"working" + 0.015*"today" + 0.009*"new" + 0.008*"like" + 0.008*"day" + 0.008*"back" + 0.007*"one"

INFO 2022-10-28 14:59:41,356 ldamodel.py:1196] topic #4 (0.145): 0.015*"california" + 0.011*"done" + 0.010*"home" + 0.008*"covid" + 0.007*"work" + 0.007*"group" + 0.006*"sold" + 0.006*"ready" + 0.006*"day" + 0.006*"angeles"

INFO 2022-10-28 14:59:41,357 ldamodel.py:1074] topic diff=0.611343, rho=0.408248

INFO 2022-10-28 14:59:41,357 ldamodel.py:1001] PROGRESS: pass 0, at document #700/768

INFO 2022-10-28 14:59:41,392 ldamodel.py:794] optimized alpha [0.18869899, 0.21525112, 0.17533925, 0.28178075, 0.14962877]

INFO 2022-10-28 14:59:41,393 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,395 ldamodel.py:1196] topic #0 (0.189): 0.015*"work" + 0.013*"love" + 0.013*"go" + 0.011*"get" + 0.010*"today" + 0.010*"life" + 0.009*"day" + 0.007*"year" + 0.007*"job" + 0.007*"make"

INFO 2022-10-28 14:59:41,395 ldamodel.py:1196] topic #1 (0.215): 0.013*"work" + 0.013*"time" + 0.013*"meeting" + 0.011*"need" + 0.009*"home" + 0.008*"get" + 0.007*"first" + 0.007*"today" + 0.006*"zoom" + 0.006*"little"

INFO 2022-10-28 14:59:41,396 ldamodel.py:1196] topic #2 (0.175): 0.010*"job" + 0.009*"think" + 0.009*"tuesday" + 0.008*"way" + 0.008*"day" + 0.008*"spring" + 0.007*"long" + 0.007*"let" + 0.006*"realestate" + 0.006*"hybrid"

INFO 2022-10-28 14:59:41,397 ldamodel.py:1196] topic #3 (0.282): 0.028*"work" + 0.022*"office" + 0.020*"home" + 0.016*"working" + 0.014*"today" + 0.010*"day" + 0.010*"back" + 0.009*"thing" + 0.009*"one" + 0.008*"new"

INFO 2022-10-28 14:59:41,397 ldamodel.py:1196] topic #4 (0.150): 0.014*"california" + 0.010*"employee" + 0.009*"done" + 0.008*"going" + 0.008*"corporate" + 0.007*"work" + 0.007*"home" + 0.006*"angeles" + 0.006*"los" + 0.006*"year"

INFO 2022-10-28 14:59:41,397 ldamodel.py:1074] topic diff=0.558420, rho=0.377964

INFO 2022-10-28 14:59:41,424 ldamodel.py:847] -10.448 per-word bound, 1396.7 perplexity estimate based on a held-out corpus of 68 documents with 815 words

INFO 2022-10-28 14:59:41,425 ldamodel.py:1001] PROGRESS: pass 0, at document #768/768

INFO 2022-10-28 14:59:41,445 ldamodel.py:794] optimized alpha [0.1996359, 0.22733627, 0.18495363, 0.31522462, 0.14613664]

INFO 2022-10-28 14:59:41,446 ldamodel.py:233] merging changes from 68 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,447 ldamodel.py:1196] topic #0 (0.200): 0.015*"go" + 0.014*"life" + 0.013*"work" + 0.012*"love" + 0.010*"make" + 0.010*"would" + 0.009*"day" + 0.008*"get" + 0.008*"today" + 0.007*"job"

INFO 2022-10-28 14:59:41,448 ldamodel.py:1196] topic #1 (0.227): 0.014*"time" + 0.012*"meeting" + 0.011*"work" + 0.010*"need" + 0.010*"zoom" + 0.010*"team" + 0.008*"home" + 0.007*"coffee" + 0.007*"got" + 0.006*"even"

INFO 2022-10-28 14:59:41,449 ldamodel.py:1196] topic #2 (0.185): 0.010*"morning" + 0.009*"day" + 0.009*"way" + 0.008*"job" + 0.008*"think" + 0.008*"long" + 0.008*"let" + 0.007*"another" + 0.007*"spring" + 0.006*"open"

INFO 2022-10-28 14:59:41,449 ldamodel.py:1196] topic #3 (0.315): 0.025*"work" + 0.024*"office" + 0.018*"home" + 0.017*"day" + 0.015*"back" + 0.013*"working" + 0.012*"new" + 0.010*"today" + 0.010*"one" + 0.007*"want"

INFO 2022-10-28 14:59:41,450 ldamodel.py:1196] topic #4 (0.146): 0.014*"california" + 0.011*"employee" + 0.010*"covid" + 0.008*"beach" + 0.007*"done" + 0.007*"going" + 0.006*"year" + 0.005*"corporate" + 0.005*"lunchtime" + 0.005*"work"

INFO 2022-10-28 14:59:41,450 ldamodel.py:1074] topic diff=0.476088, rho=0.353553

INFO 2022-10-28 14:59:41,451 ldamodel.py:1001] PROGRESS: pass 1, at document #100/768

INFO 2022-10-28 14:59:41,477 ldamodel.py:794] optimized alpha [0.18810043, 0.20171675, 0.17305338, 0.29309604, 0.14138676]

INFO 2022-10-28 14:59:41,478 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,479 ldamodel.py:1196] topic #0 (0.188): 0.012*"work" + 0.011*"go" + 0.010*"life" + 0.010*"make" + 0.009*"love" + 0.008*"job" + 0.008*"people" + 0.007*"would" + 0.007*"amp" + 0.007*"business"

INFO 2022-10-28 14:59:41,480 ldamodel.py:1196] topic #1 (0.202): 0.012*"meeting" + 0.012*"time" + 0.012*"work" + 0.010*"need" + 0.008*"team" + 0.008*"zoom" + 0.008*"coffee" + 0.006*"home" + 0.006*"even" + 0.006*"see"

INFO 2022-10-28 14:59:41,481 ldamodel.py:1196] topic #2 (0.173): 0.010*"morning" + 0.008*"job" + 0.007*"way" + 0.006*"day" + 0.006*"another" + 0.006*"great" + 0.006*"world" + 0.006*"think" + 0.006*"long" + 0.006*"let"

INFO 2022-10-28 14:59:41,482 ldamodel.py:1196] topic #3 (0.293): 0.026*"office" + 0.025*"work" + 0.014*"home" + 0.013*"back" + 0.012*"day" + 0.011*"working" + 0.011*"new" + 0.010*"today" + 0.010*"remote" + 0.008*"one"

INFO 2022-10-28 14:59:41,482 ldamodel.py:1196] topic #4 (0.141): 0.022*"california" + 0.008*"park" + 0.008*"employee" + 0.007*"nature" + 0.007*"beach" + 0.007*"iphonography" + 0.007*"shotoniphone" + 0.007*"naturelovers" + 0.007*"mvt" + 0.006*"covid"

INFO 2022-10-28 14:59:41,483 ldamodel.py:1074] topic diff=0.450895, rho=0.321412

INFO 2022-10-28 14:59:41,483 ldamodel.py:1001] PROGRESS: pass 1, at document #200/768

INFO 2022-10-28 14:59:41,506 ldamodel.py:794] optimized alpha [0.1910355, 0.20958474, 0.17600764, 0.2929079, 0.1375881]

INFO 2022-10-28 14:59:41,507 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,509 ldamodel.py:1196] topic #0 (0.191): 0.012*"work" + 0.011*"business" + 0.011*"life" + 0.010*"job" + 0.009*"company" + 0.009*"go" + 0.009*"make" + 0.008*"people" + 0.007*"workbnb" + 0.007*"travel"

INFO 2022-10-28 14:59:41,509 ldamodel.py:1196] topic #1 (0.210): 0.016*"work" + 0.013*"meeting" + 0.013*"time" + 0.009*"home" + 0.008*"need" + 0.007*"zoom" + 0.007*"coffee" + 0.006*"team" + 0.006*"much" + 0.006*"even"

INFO 2022-10-28 14:59:41,510 ldamodel.py:1196] topic #2 (0.176): 0.009*"morning" + 0.008*"job" + 0.008*"think" + 0.007*"another" + 0.007*"long" + 0.007*"day" + 0.006*"amp" + 0.006*"open" + 0.006*"way" + 0.006*"let"

INFO 2022-10-28 14:59:41,511 ldamodel.py:1196] topic #3 (0.293): 0.031*"work" + 0.023*"office" + 0.016*"home" + 0.013*"remote" + 0.013*"working" + 0.012*"day" + 0.011*"back" + 0.009*"new" + 0.007*"today" + 0.007*"like"

INFO 2022-10-28 14:59:41,511 ldamodel.py:1196] topic #4 (0.138): 0.017*"california" + 0.008*"change" + 0.007*"grateful" + 0.007*"beach" + 0.006*"corporate" + 0.006*"park" + 0.006*"family" + 0.005*"employee" + 0.005*"able" + 0.005*"nature"INFO 2022-10-28 14:59:41,511 ldamodel.py:1074] topic diff=0.438547, rho=0.321412

INFO 2022-10-28 14:59:41,512 ldamodel.py:1001] PROGRESS: pass 1, at document #300/768

INFO 2022-10-28 14:59:41,537 ldamodel.py:794] optimized alpha [0.1882309, 0.20935552, 0.18632987, 0.27986538, 0.14232595]

INFO 2022-10-28 14:59:41,538 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,539 ldamodel.py:1196] topic #0 (0.188): 0.017*"work" + 0.016*"business" + 0.009*"career" + 0.009*"money" + 0.009*"dream" + 0.009*"life" + 0.009*"love" + 0.008*"like" + 0.008*"company" + 0.008*"job"

INFO 2022-10-28 14:59:41,539 ldamodel.py:1196] topic #1 (0.209): 0.021*"work" + 0.014*"time" + 0.012*"meeting" + 0.011*"home" + 0.010*"coffee" + 0.007*"got" + 0.007*"nowhiring" + 0.007*"need" + 0.006*"team" + 0.006*"much"

INFO 2022-10-28 14:59:41,540 ldamodel.py:1196] topic #2 (0.186): 0.012*"morning" + 0.010*"day" + 0.009*"long" + 0.009*"entrepreneur" + 0.008*"follow" + 0.008*"let" + 0.007*"realestate" + 0.007*"job" + 0.007*"place" + 0.006*"think"

INFO 2022-10-28 14:59:41,540 ldamodel.py:1196] topic #3 (0.280): 0.040*"work" + 0.022*"office" + 0.021*"home" + 0.011*"working" + 0.011*"back" + 0.011*"like" + 0.010*"remote" + 0.009*"day" + 0.008*"today" + 0.008*"new"

INFO 2022-10-28 14:59:41,541 ldamodel.py:1196] topic #4 (0.142): 0.022*"california" + 0.008*"home" + 0.007*"done" + 0.006*"group" + 0.006*"sold" + 0.006*"corporate" + 0.006*"soldgetting" + 0.006*"listed" + 0.006*"realestateagent" + 0.006*"souza"

INFO 2022-10-28 14:59:41,541 ldamodel.py:1074] topic diff=0.399238, rho=0.321412

INFO 2022-10-28 14:59:41,542 ldamodel.py:1001] PROGRESS: pass 1, at document #400/768

INFO 2022-10-28 14:59:41,564 ldamodel.py:794] optimized alpha [0.18479933, 0.21177283, 0.19334303, 0.30272394, 0.13953488]

INFO 2022-10-28 14:59:41,565 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,567 ldamodel.py:1196] topic #0 (0.185): 0.016*"love" + 0.015*"work" + 0.012*"business" + 0.010*"go" + 0.009*"good" + 0.009*"life" + 0.009*"get" + 0.009*"job" + 0.008*"dream" + 0.008*"saturday"

INFO 2022-10-28 14:59:41,567 ldamodel.py:1196] topic #1 (0.212): 0.016*"work" + 0.015*"time" + 0.011*"home" + 0.011*"meeting" + 0.010*"need" + 0.008*"much" + 0.008*"coffee" + 0.007*"got" + 0.007*"even" + 0.006*"zoom"

INFO 2022-10-28 14:59:41,568 ldamodel.py:1196] topic #2 (0.193): 0.011*"entrepreneur" + 0.011*"morning" + 0.010*"day" + 0.010*"realestate" + 0.010*"let" + 0.010*"sunday" + 0.008*"job" + 0.007*"check" + 0.007*"long" + 0.006*"follow"

INFO 2022-10-28 14:59:41,568 ldamodel.py:1196] topic #3 (0.303): 0.032*"work" + 0.021*"office" + 0.020*"home" + 0.011*"back" + 0.010*"day" + 0.010*"today" + 0.010*"like" + 0.010*"working" + 0.009*"new" + 0.008*"monday"

INFO 2022-10-28 14:59:41,569 ldamodel.py:1196] topic #4 (0.140): 0.021*"california" + 0.010*"home" + 0.010*"group" + 0.009*"sold" + 0.008*"done" + 0.007*"souza" + 0.007*"listed" + 0.007*"homesales" + 0.007*"soldgetting" + 0.007*"realestateagent"

INFO 2022-10-28 14:59:41,569 ldamodel.py:1074] topic diff=0.388357, rho=0.321412

INFO 2022-10-28 14:59:41,570 ldamodel.py:1001] PROGRESS: pass 1, at document #500/768

INFO 2022-10-28 14:59:41,590 ldamodel.py:794] optimized alpha [0.18758786, 0.21469162, 0.1944635, 0.2999581, 0.14217678]

INFO 2022-10-28 14:59:41,591 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,593 ldamodel.py:1196] topic #0 (0.188): 0.015*"work" + 0.015*"love" + 0.010*"business" + 0.010*"life" + 0.010*"job" + 0.010*"go" + 0.009*"get" + 0.008*"make" + 0.008*"trying" + 0.008*"good"

INFO 2022-10-28 14:59:41,594 ldamodel.py:1196] topic #1 (0.215): 0.018*"work" + 0.015*"time" + 0.011*"need" + 0.011*"home" + 0.009*"meeting" + 0.008*"much" + 0.007*"zoom" + 0.007*"even" + 0.007*"coffee" + 0.006*"get"

INFO 2022-10-28 14:59:41,594 ldamodel.py:1196] topic #2 (0.194): 0.012*"another" + 0.011*"realestate" + 0.011*"entrepreneur" + 0.010*"day" + 0.010*"job" + 0.009*"morning" + 0.008*"sunday" + 0.007*"let" + 0.007*"way" + 0.006*"hiring"

INFO 2022-10-28 14:59:41,595 ldamodel.py:1196] topic #3 (0.300): 0.034*"work" + 0.020*"home" + 0.018*"office" + 0.011*"working" + 0.010*"today" + 0.009*"day" + 0.009*"back" + 0.009*"like" + 0.008*"new" + 0.008*"week"

INFO 2022-10-28 14:59:41,595 ldamodel.py:1196] topic #4 (0.142): 0.016*"california" + 0.012*"home" + 0.012*"done" + 0.011*"group" + 0.010*"sold" + 0.009*"realestateagent" + 0.009*"souza" + 0.009*"homesales" + 0.009*"realtorlife" + 0.009*"soldgetting"

INFO 2022-10-28 14:59:41,596 ldamodel.py:1074] topic diff=0.335128, rho=0.321412

INFO 2022-10-28 14:59:41,596 ldamodel.py:1001] PROGRESS: pass 1, at document #600/768

INFO 2022-10-28 14:59:41,618 ldamodel.py:794] optimized alpha [0.19467387, 0.23224805, 0.18951692, 0.31437692, 0.1462007]

INFO 2022-10-28 14:59:41,620 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,621 ldamodel.py:1196] topic #0 (0.195): 0.015*"go" + 0.013*"work" + 0.013*"love" + 0.012*"life" + 0.009*"make" + 0.009*"job" + 0.009*"trying" + 0.009*"get" + 0.009*"today" + 0.008*"day"

INFO 2022-10-28 14:59:41,622 ldamodel.py:1196] topic #1 (0.232): 0.014*"work" + 0.013*"time" + 0.012*"meeting" + 0.011*"need" + 0.010*"home" + 0.008*"much" + 0.007*"today" + 0.007*"get" + 0.006*"zoom" + 0.006*"desk"

INFO 2022-10-28 14:59:41,623 ldamodel.py:1196] topic #2 (0.190): 0.010*"job" + 0.009*"day" + 0.009*"another" + 0.008*"realestate" + 0.008*"let" + 0.008*"way" + 0.008*"entrepreneur" + 0.007*"think" + 0.007*"morning" + 0.007*"long"

INFO 2022-10-28 14:59:41,623 ldamodel.py:1196] topic #3 (0.314): 0.030*"work" + 0.021*"office" + 0.020*"home" + 0.015*"working" + 0.013*"today" + 0.011*"day" + 0.009*"like" + 0.009*"new" + 0.009*"back" + 0.008*"one"

INFO 2022-10-28 14:59:41,624 ldamodel.py:1196] topic #4 (0.146): 0.017*"california" + 0.012*"done" + 0.010*"home" + 0.008*"group" + 0.008*"covid" + 0.007*"sold" + 0.007*"homesales" + 0.007*"souza" + 0.007*"cloudoffice" + 0.007*"realestateagent"

INFO 2022-10-28 14:59:41,624 ldamodel.py:1074] topic diff=0.390220, rho=0.321412

INFO 2022-10-28 14:59:41,625 ldamodel.py:1001] PROGRESS: pass 1, at document #700/768

INFO 2022-10-28 14:59:41,647 ldamodel.py:794] optimized alpha [0.19913414, 0.23720531, 0.1867905, 0.34187663, 0.14703542]

INFO 2022-10-28 14:59:41,649 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,650 ldamodel.py:1196] topic #0 (0.199): 0.014*"work" + 0.013*"go" + 0.011*"love" + 0.010*"today" + 0.010*"life" + 0.009*"get" + 0.009*"day" + 0.009*"job" + 0.007*"make" + 0.007*"people"

INFO 2022-10-28 14:59:41,651 ldamodel.py:1196] topic #1 (0.237): 0.013*"work" + 0.013*"meeting" + 0.012*"time" + 0.011*"need" + 0.009*"home" + 0.008*"get" + 0.007*"first" + 0.006*"today" + 0.006*"zoom" + 0.006*"see"

INFO 2022-10-28 14:59:41,652 ldamodel.py:1196] topic #2 (0.187): 0.009*"think" + 0.008*"tuesday" + 0.008*"way" + 0.007*"spring" + 0.007*"job" + 0.007*"day" + 0.007*"morning" + 0.007*"let" + 0.007*"long" + 0.006*"another"

INFO 2022-10-28 14:59:41,652 ldamodel.py:1196] topic #3 (0.342): 0.029*"work" + 0.021*"office" + 0.019*"home" + 0.015*"working" + 0.013*"day" + 0.012*"today" + 0.009*"back" + 0.009*"one" + 0.009*"thing" + 0.008*"like"

INFO 2022-10-28 14:59:41,653 ldamodel.py:1196] topic #4 (0.147): 0.016*"california" + 0.010*"done" + 0.009*"employee" + 0.008*"home" + 0.007*"corporate" + 0.006*"angeles" + 0.006*"los" + 0.006*"work" + 0.005*"group" + 0.005*"covid"

INFO 2022-10-28 14:59:41,653 ldamodel.py:1074] topic diff=0.360543, rho=0.321412

INFO 2022-10-28 14:59:41,676 ldamodel.py:847] -9.439 per-word bound, 694.3 perplexity estimate based on a held-out corpus of 68 documents with 815 words

INFO 2022-10-28 14:59:41,677 ldamodel.py:1001] PROGRESS: pass 1, at document #768/768

INFO 2022-10-28 14:59:41,690 ldamodel.py:794] optimized alpha [0.20648462, 0.24206385, 0.19246857, 0.36442706, 0.14316475]

INFO 2022-10-28 14:59:41,691 ldamodel.py:233] merging changes from 68 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,692 ldamodel.py:1196] topic #0 (0.206): 0.015*"go" + 0.014*"life" + 0.012*"work" + 0.011*"love" + 0.010*"make" + 0.009*"would" + 0.009*"job" + 0.009*"day" + 0.008*"today" + 0.007*"good"INFO 2022-10-28 14:59:41,693 ldamodel.py:1196] topic #1 (0.242): 0.012*"meeting" + 0.011*"time" + 0.011*"work" + 0.010*"need" + 0.010*"team" + 0.010*"zoom" + 0.008*"home" + 0.007*"coffee" + 0.007*"got" + 0.006*"desk"

INFO 2022-10-28 14:59:41,694 ldamodel.py:1196] topic #2 (0.192): 0.011*"morning" + 0.008*"way" + 0.008*"new" + 0.008*"think" + 0.008*"day" + 0.008*"let" + 0.008*"long" + 0.007*"another" + 0.007*"spring" + 0.006*"open"

INFO 2022-10-28 14:59:41,694 ldamodel.py:1196] topic #3 (0.364): 0.026*"work" + 0.024*"office" + 0.018*"day" + 0.018*"home" + 0.014*"back" + 0.013*"working" + 0.010*"one" + 0.010*"new" + 0.010*"today" + 0.008*"week"

INFO 2022-10-28 14:59:41,695 ldamodel.py:1196] topic #4 (0.143): 0.015*"california" + 0.010*"employee" + 0.009*"covid" + 0.008*"beach" + 0.008*"done" + 0.006*"home" + 0.006*"afternoon" + 0.005*"lunchtime" + 0.005*"corporate" + 0.005*"environment"

INFO 2022-10-28 14:59:41,695 ldamodel.py:1074] topic diff=0.310045, rho=0.321412

INFO 2022-10-28 14:59:41,696 ldamodel.py:1001] PROGRESS: pass 2, at document #100/768

INFO 2022-10-28 14:59:41,718 ldamodel.py:794] optimized alpha [0.19503799, 0.21292374, 0.17921858, 0.33126903, 0.1383282]

INFO 2022-10-28 14:59:41,719 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,721 ldamodel.py:1196] topic #0 (0.195): 0.011*"work" + 0.011*"go" + 0.010*"make" + 0.010*"life" + 0.010*"job" + 0.008*"people" + 0.008*"love" + 0.007*"edge" + 0.007*"would" + 0.007*"business"

INFO 2022-10-28 14:59:41,722 ldamodel.py:1196] topic #1 (0.213): 0.012*"meeting" + 0.012*"work" + 0.011*"time" + 0.010*"need" + 0.009*"team" + 0.008*"coffee" + 0.008*"zoom" + 0.006*"home" + 0.006*"even" + 0.006*"much"

INFO 2022-10-28 14:59:41,723 ldamodel.py:1196] topic #2 (0.179): 0.010*"morning" + 0.007*"way" + 0.007*"new" + 0.007*"another" + 0.007*"job" + 0.006*"world" + 0.006*"great" + 0.006*"think" + 0.006*"let" + 0.006*"long"

INFO 2022-10-28 14:59:41,723 ldamodel.py:1196] topic #3 (0.331): 0.026*"office" + 0.026*"work" + 0.014*"home" + 0.014*"day" + 0.012*"back" + 0.011*"working" + 0.010*"today" + 0.010*"new" + 0.009*"remote" + 0.009*"one"

INFO 2022-10-28 14:59:41,724 ldamodel.py:1196] topic #4 (0.138): 0.023*"california" + 0.009*"park" + 0.007*"employee" + 0.007*"beach" + 0.007*"nature" + 0.007*"iphonography" + 0.007*"shotoniphone" + 0.007*"naturelovers" + 0.007*"mvt" + 0.006*"covid"

INFO 2022-10-28 14:59:41,724 ldamodel.py:1074] topic diff=0.341216, rho=0.305995

INFO 2022-10-28 14:59:41,725 ldamodel.py:1001] PROGRESS: pass 2, at document #200/768

INFO 2022-10-28 14:59:41,748 ldamodel.py:794] optimized alpha [0.19696988, 0.22055255, 0.18136667, 0.32950586, 0.13531826]

INFO 2022-10-28 14:59:41,749 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,751 ldamodel.py:1196] topic #0 (0.197): 0.012*"job" + 0.011*"work" + 0.011*"business" + 0.011*"life" + 0.009*"company" + 0.009*"go" + 0.009*"make" + 0.008*"people" + 0.007*"futureofwork" + 0.007*"travel"

INFO 2022-10-28 14:59:41,751 ldamodel.py:1196] topic #1 (0.221): 0.015*"work" + 0.013*"meeting" + 0.011*"time" + 0.009*"home" + 0.009*"need" + 0.007*"coffee" + 0.007*"zoom" + 0.007*"team" + 0.007*"much" + 0.006*"even"

INFO 2022-10-28 14:59:41,752 ldamodel.py:1196] topic #2 (0.181): 0.009*"morning" + 0.008*"think" + 0.008*"another" + 0.007*"long" + 0.007*"open" + 0.006*"job" + 0.006*"way" + 0.006*"let" + 0.006*"day" + 0.006*"new"

INFO 2022-10-28 14:59:41,753 ldamodel.py:1196] topic #3 (0.330): 0.032*"work" + 0.024*"office" + 0.016*"home" + 0.013*"day" + 0.013*"working" + 0.013*"remote" + 0.011*"back" + 0.009*"new" + 0.007*"today" + 0.007*"like"

INFO 2022-10-28 14:59:41,753 ldamodel.py:1196] topic #4 (0.135): 0.018*"california" + 0.008*"change" + 0.007*"grateful" + 0.007*"beach" + 0.006*"park" + 0.006*"corporate" + 0.005*"employee" + 0.005*"able" + 0.005*"family" + 0.005*"nature"

INFO 2022-10-28 14:59:41,754 ldamodel.py:1074] topic diff=0.332922, rho=0.305995

INFO 2022-10-28 14:59:41,754 ldamodel.py:1001] PROGRESS: pass 2, at document #300/768

INFO 2022-10-28 14:59:41,778 ldamodel.py:794] optimized alpha [0.19342166, 0.21853842, 0.18492366, 0.31284988, 0.14012122]

INFO 2022-10-28 14:59:41,779 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,780 ldamodel.py:1196] topic #0 (0.193): 0.016*"work" + 0.016*"business" + 0.010*"job" + 0.009*"career" + 0.009*"money" + 0.009*"life" + 0.009*"company" + 0.009*"dream" + 0.008*"like" + 0.008*"success"

INFO 2022-10-28 14:59:41,781 ldamodel.py:1196] topic #1 (0.219): 0.020*"work" + 0.013*"time" + 0.012*"meeting" + 0.011*"home" + 0.010*"coffee" + 0.007*"got" + 0.007*"need" + 0.007*"nowhiring" + 0.007*"team" + 0.006*"much"

INFO 2022-10-28 14:59:41,782 ldamodel.py:1196] topic #2 (0.185): 0.012*"morning" + 0.009*"day" + 0.009*"long" + 0.008*"let" + 0.008*"entrepreneur" + 0.007*"place" + 0.006*"think" + 0.006*"texas" + 0.006*"way" + 0.006*"another"

INFO 2022-10-28 14:59:41,782 ldamodel.py:1196] topic #3 (0.313): 0.040*"work" + 0.022*"office" + 0.021*"home" + 0.011*"day" + 0.011*"working" + 0.011*"like" + 0.011*"back" + 0.010*"remote" + 0.008*"today" + 0.007*"new"

INFO 2022-10-28 14:59:41,783 ldamodel.py:1196] topic #4 (0.140): 0.022*"california" + 0.009*"home" + 0.008*"done" + 0.007*"job" + 0.006*"group" + 0.006*"sold" + 0.006*"homesales" + 0.006*"cloudoffice" + 0.006*"souza" + 0.006*"realestateagent"

INFO 2022-10-28 14:59:41,783 ldamodel.py:1074] topic diff=0.315184, rho=0.305995

INFO 2022-10-28 14:59:41,784 ldamodel.py:1001] PROGRESS: pass 2, at document #400/768

INFO 2022-10-28 14:59:41,804 ldamodel.py:794] optimized alpha [0.18947105, 0.22091793, 0.19067062, 0.33386186, 0.13684028]

INFO 2022-10-28 14:59:41,805 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,807 ldamodel.py:1196] topic #0 (0.189): 0.015*"love" + 0.015*"work" + 0.012*"business" + 0.010*"job" + 0.010*"go" + 0.009*"life" + 0.009*"good" + 0.008*"dream" + 0.008*"entrepreneur" + 0.008*"get"

INFO 2022-10-28 14:59:41,808 ldamodel.py:1196] topic #1 (0.221): 0.016*"work" + 0.013*"time" + 0.011*"meeting" + 0.010*"home" + 0.010*"need" + 0.009*"much" + 0.008*"coffee" + 0.007*"got" + 0.007*"even" + 0.006*"zoom"

INFO 2022-10-28 14:59:41,808 ldamodel.py:1196] topic #2 (0.191): 0.011*"morning" + 0.011*"entrepreneur" + 0.010*"let" + 0.010*"sunday" + 0.009*"day" + 0.007*"check" + 0.007*"long" + 0.006*"another" + 0.006*"tuesday" + 0.006*"businessowner"

INFO 2022-10-28 14:59:41,809 ldamodel.py:1196] topic #3 (0.334): 0.032*"work" + 0.021*"office" + 0.020*"home" + 0.012*"day" + 0.011*"back" + 0.010*"today" + 0.010*"like" + 0.010*"working" + 0.009*"new" + 0.008*"monday"

INFO 2022-10-28 14:59:41,810 ldamodel.py:1196] topic #4 (0.137): 0.022*"california" + 0.010*"home" + 0.010*"done" + 0.010*"group" + 0.009*"sold" + 0.008*"job" + 0.007*"realtorlife" + 0.007*"listed" + 0.007*"souza" + 0.007*"homesales"

INFO 2022-10-28 14:59:41,810 ldamodel.py:1074] topic diff=0.309994, rho=0.305995

INFO 2022-10-28 14:59:41,811 ldamodel.py:1001] PROGRESS: pass 2, at document #500/768

INFO 2022-10-28 14:59:41,834 ldamodel.py:794] optimized alpha [0.19055578, 0.22279479, 0.18909073, 0.32956627, 0.1393638]

INFO 2022-10-28 14:59:41,835 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,837 ldamodel.py:1196] topic #0 (0.191): 0.014*"work" + 0.014*"love" + 0.011*"job" + 0.010*"business" + 0.010*"life" + 0.010*"go" + 0.008*"make" + 0.008*"trying" + 0.008*"get" + 0.008*"good"

INFO 2022-10-28 14:59:41,837 ldamodel.py:1196] topic #1 (0.223): 0.017*"work" + 0.014*"time" + 0.012*"need" + 0.011*"home" + 0.010*"meeting" + 0.008*"much" + 0.007*"zoom" + 0.007*"get" + 0.007*"even" + 0.007*"coffee"

INFO 2022-10-28 14:59:41,838 ldamodel.py:1196] topic #2 (0.189): 0.012*"another" + 0.010*"entrepreneur" + 0.010*"morning" + 0.010*"day" + 0.008*"sunday" + 0.008*"job" + 0.007*"let" + 0.007*"way" + 0.006*"think" + 0.006*"hiring"

INFO 2022-10-28 14:59:41,839 ldamodel.py:1196] topic #3 (0.330): 0.035*"work" + 0.021*"home" + 0.019*"office" + 0.011*"day" + 0.011*"working" + 0.010*"today" + 0.009*"like" + 0.009*"back" + 0.008*"week" + 0.008*"new"INFO 2022-10-28 14:59:41,839 ldamodel.py:1196] topic #4 (0.139): 0.017*"california" + 0.014*"done" + 0.012*"home" + 0.011*"group" + 0.010*"job" + 0.010*"sold" + 0.010*"realestate" + 0.009*"souza" + 0.009*"listed" + 0.009*"realtorlife"

INFO 2022-10-28 14:59:41,840 ldamodel.py:1074] topic diff=0.260716, rho=0.305995

INFO 2022-10-28 14:59:41,840 ldamodel.py:1001] PROGRESS: pass 2, at document #600/768

INFO 2022-10-28 14:59:41,862 ldamodel.py:794] optimized alpha [0.19722223, 0.23845957, 0.18507709, 0.3410467, 0.14272004]

INFO 2022-10-28 14:59:41,863 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,865 ldamodel.py:1196] topic #0 (0.197): 0.015*"go" + 0.013*"work" + 0.012*"love" + 0.011*"life" + 0.011*"job" + 0.009*"make" + 0.009*"trying" + 0.009*"today" + 0.008*"get" + 0.008*"day"

INFO 2022-10-28 14:59:41,866 ldamodel.py:1196] topic #1 (0.238): 0.014*"work" + 0.012*"time" + 0.012*"meeting" + 0.011*"need" + 0.010*"home" + 0.008*"much" + 0.007*"get" + 0.007*"today" + 0.006*"zoom" + 0.006*"desk"

INFO 2022-10-28 14:59:41,866 ldamodel.py:1196] topic #2 (0.185): 0.009*"another" + 0.008*"day" + 0.008*"let" + 0.008*"way" + 0.008*"entrepreneur" + 0.008*"job" + 0.007*"think" + 0.007*"morning" + 0.007*"long" + 0.006*"tuesday"

INFO 2022-10-28 14:59:41,867 ldamodel.py:1196] topic #3 (0.341): 0.031*"work" + 0.021*"office" + 0.020*"home" + 0.014*"working" + 0.013*"today" + 0.013*"day" + 0.009*"like" + 0.008*"back" + 0.008*"new" + 0.008*"one"

INFO 2022-10-28 14:59:41,868 ldamodel.py:1196] topic #4 (0.143): 0.018*"california" + 0.014*"done" + 0.010*"home" + 0.008*"group" + 0.007*"covid" + 0.007*"job" + 0.007*"sold" + 0.007*"realestate" + 0.007*"listed" + 0.007*"cloudoffice"

INFO 2022-10-28 14:59:41,868 ldamodel.py:1074] topic diff=0.311222, rho=0.305995

INFO 2022-10-28 14:59:41,869 ldamodel.py:1001] PROGRESS: pass 2, at document #700/768

INFO 2022-10-28 14:59:41,889 ldamodel.py:794] optimized alpha [0.2007364, 0.2418768, 0.18307465, 0.3668733, 0.1433353]

INFO 2022-10-28 14:59:41,890 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,891 ldamodel.py:1196] topic #0 (0.201): 0.013*"work" + 0.013*"go" + 0.011*"love" + 0.010*"today" + 0.010*"life" + 0.010*"job" + 0.009*"day" + 0.009*"get" + 0.008*"make" + 0.007*"trying"

INFO 2022-10-28 14:59:41,892 ldamodel.py:1196] topic #1 (0.242): 0.013*"work" + 0.013*"meeting" + 0.011*"time" + 0.011*"need" + 0.009*"home" + 0.008*"get" + 0.006*"zoom" + 0.006*"first" + 0.006*"today" + 0.006*"much"

INFO 2022-10-28 14:59:41,893 ldamodel.py:1196] topic #2 (0.183): 0.009*"think" + 0.008*"tuesday" + 0.008*"way" + 0.007*"spring" + 0.007*"morning" + 0.007*"let" + 0.007*"long" + 0.007*"day" + 0.006*"another" + 0.006*"hybrid"

INFO 2022-10-28 14:59:41,893 ldamodel.py:1196] topic #3 (0.367): 0.030*"work" + 0.022*"office" + 0.019*"home" + 0.014*"day" + 0.014*"working" + 0.013*"today" + 0.009*"one" + 0.009*"back" + 0.009*"thing" + 0.008*"like"

INFO 2022-10-28 14:59:41,894 ldamodel.py:1196] topic #4 (0.143): 0.016*"california" + 0.012*"done" + 0.009*"employee" + 0.009*"home" + 0.007*"corporate" + 0.006*"job" + 0.006*"los" + 0.006*"angeles" + 0.006*"group" + 0.005*"covid"

INFO 2022-10-28 14:59:41,894 ldamodel.py:1074] topic diff=0.284091, rho=0.305995

INFO 2022-10-28 14:59:41,916 ldamodel.py:847] -9.191 per-word bound, 584.6 perplexity estimate based on a held-out corpus of 68 documents with 815 words

INFO 2022-10-28 14:59:41,917 ldamodel.py:1001] PROGRESS: pass 2, at document #768/768

INFO 2022-10-28 14:59:41,928 ldamodel.py:794] optimized alpha [0.20661844, 0.24400021, 0.18827258, 0.3829082, 0.13927574]

INFO 2022-10-28 14:59:41,929 ldamodel.py:233] merging changes from 68 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,931 ldamodel.py:1196] topic #0 (0.207): 0.014*"go" + 0.014*"life" + 0.012*"work" + 0.010*"love" + 0.010*"make" + 0.010*"job" + 0.009*"would" + 0.009*"day" + 0.009*"today" + 0.008*"good"

INFO 2022-10-28 14:59:41,931 ldamodel.py:1196] topic #1 (0.244): 0.012*"meeting" + 0.011*"work" + 0.011*"need" + 0.010*"team" + 0.010*"time" + 0.009*"zoom" + 0.008*"home" + 0.007*"coffee" + 0.007*"got" + 0.006*"desk"

INFO 2022-10-28 14:59:41,932 ldamodel.py:1196] topic #2 (0.188): 0.010*"morning" + 0.010*"new" + 0.008*"way" + 0.008*"think" + 0.008*"let" + 0.008*"long" + 0.008*"day" + 0.007*"another" + 0.007*"spring" + 0.006*"open"

INFO 2022-10-28 14:59:41,933 ldamodel.py:1196] topic #3 (0.383): 0.027*"work" + 0.024*"office" + 0.019*"day" + 0.018*"home" + 0.013*"back" + 0.012*"working" + 0.010*"one" + 0.010*"today" + 0.009*"new" + 0.009*"week"

INFO 2022-10-28 14:59:41,933 ldamodel.py:1196] topic #4 (0.139): 0.015*"california" + 0.010*"employee" + 0.009*"covid" + 0.009*"done" + 0.008*"beach" + 0.006*"home" + 0.006*"afternoon" + 0.005*"corporate" + 0.005*"environment" + 0.005*"lunchtime"

INFO 2022-10-28 14:59:41,933 ldamodel.py:1074] topic diff=0.239196, rho=0.305995

INFO 2022-10-28 14:59:41,934 ldamodel.py:1001] PROGRESS: pass 3, at document #100/768

INFO 2022-10-28 14:59:41,955 ldamodel.py:794] optimized alpha [0.19649082, 0.2157341, 0.17601363, 0.34872165, 0.13565457]

INFO 2022-10-28 14:59:41,956 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,957 ldamodel.py:1196] topic #0 (0.196): 0.011*"work" + 0.011*"go" + 0.011*"job" + 0.010*"life" + 0.010*"make" + 0.008*"people" + 0.008*"love" + 0.007*"business" + 0.007*"would" + 0.007*"edge"

INFO 2022-10-28 14:59:41,958 ldamodel.py:1196] topic #1 (0.216): 0.012*"meeting" + 0.012*"work" + 0.010*"need" + 0.009*"time" + 0.009*"team" + 0.008*"zoom" + 0.008*"coffee" + 0.006*"home" + 0.006*"much" + 0.006*"even"

INFO 2022-10-28 14:59:41,959 ldamodel.py:1196] topic #2 (0.176): 0.010*"morning" + 0.008*"new" + 0.007*"way" + 0.007*"another" + 0.006*"world" + 0.006*"think" + 0.006*"let" + 0.006*"long" + 0.006*"great" + 0.006*"open"

INFO 2022-10-28 14:59:41,959 ldamodel.py:1196] topic #3 (0.349): 0.027*"work" + 0.026*"office" + 0.015*"day" + 0.015*"home" + 0.012*"back" + 0.011*"working" + 0.010*"today" + 0.009*"week" + 0.009*"one" + 0.009*"new"

INFO 2022-10-28 14:59:41,960 ldamodel.py:1196] topic #4 (0.136): 0.022*"california" + 0.009*"park" + 0.007*"employee" + 0.007*"beach" + 0.007*"nature" + 0.006*"iphonography" + 0.006*"shotoniphone" + 0.006*"naturelovers" + 0.006*"mvt" + 0.006*"covid"

INFO 2022-10-28 14:59:41,960 ldamodel.py:1074] topic diff=0.288534, rho=0.292603

INFO 2022-10-28 14:59:41,961 ldamodel.py:1001] PROGRESS: pass 3, at document #200/768

INFO 2022-10-28 14:59:41,983 ldamodel.py:794] optimized alpha [0.19915058, 0.22202091, 0.17671853, 0.34459117, 0.13262449]

INFO 2022-10-28 14:59:41,984 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:41,986 ldamodel.py:1196] topic #0 (0.199): 0.013*"job" + 0.011*"work" + 0.011*"business" + 0.011*"life" + 0.009*"company" + 0.009*"make" + 0.009*"go" + 0.008*"people" + 0.008*"futureofwork" + 0.007*"worker"

INFO 2022-10-28 14:59:41,986 ldamodel.py:1196] topic #1 (0.222): 0.015*"work" + 0.013*"meeting" + 0.010*"time" + 0.009*"need" + 0.009*"home" + 0.007*"zoom" + 0.007*"coffee" + 0.007*"team" + 0.007*"much" + 0.006*"amp"

INFO 2022-10-28 14:59:41,987 ldamodel.py:1196] topic #2 (0.177): 0.009*"morning" + 0.008*"think" + 0.008*"another" + 0.007*"long" + 0.007*"new" + 0.007*"open" + 0.006*"way" + 0.006*"let" + 0.006*"day" + 0.006*"technology"

INFO 2022-10-28 14:59:41,988 ldamodel.py:1196] topic #3 (0.345): 0.032*"work" + 0.024*"office" + 0.017*"home" + 0.014*"day" + 0.013*"working" + 0.012*"remote" + 0.011*"back" + 0.008*"new" + 0.008*"today" + 0.007*"like"

INFO 2022-10-28 14:59:41,988 ldamodel.py:1196] topic #4 (0.133): 0.018*"california" + 0.008*"change" + 0.007*"grateful" + 0.007*"beach" + 0.006*"park" + 0.006*"corporate" + 0.005*"employee" + 0.005*"able" + 0.005*"nature" + 0.005*"family"

INFO 2022-10-28 14:59:41,989 ldamodel.py:1074] topic diff=0.278052, rho=0.292603

INFO 2022-10-28 14:59:41,989 ldamodel.py:1001] PROGRESS: pass 3, at document #300/768

INFO 2022-10-28 14:59:42,013 ldamodel.py:794] optimized alpha [0.19533515, 0.21971941, 0.1802001, 0.32623127, 0.13689247]INFO 2022-10-28 14:59:42,014 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,016 ldamodel.py:1196] topic #0 (0.195): 0.015*"business" + 0.015*"work" + 0.011*"job" + 0.009*"career" + 0.009*"life" + 0.009*"company" + 0.009*"money" + 0.008*"dream" + 0.008*"like" + 0.008*"success"

INFO 2022-10-28 14:59:42,016 ldamodel.py:1196] topic #1 (0.220): 0.019*"work" + 0.012*"meeting" + 0.012*"time" + 0.011*"home" + 0.010*"coffee" + 0.007*"need" + 0.007*"got" + 0.007*"team" + 0.007*"much" + 0.007*"nowhiring"

INFO 2022-10-28 14:59:42,017 ldamodel.py:1196] topic #2 (0.180): 0.012*"morning" + 0.009*"long" + 0.009*"day" + 0.008*"let" + 0.008*"entrepreneur" + 0.007*"place" + 0.006*"think" + 0.006*"another" + 0.006*"way" + 0.006*"texas"

INFO 2022-10-28 14:59:42,017 ldamodel.py:1196] topic #3 (0.326): 0.041*"work" + 0.022*"office" + 0.021*"home" + 0.012*"day" + 0.011*"working" + 0.011*"like" + 0.010*"back" + 0.010*"remote" + 0.009*"today" + 0.008*"time"

INFO 2022-10-28 14:59:42,018 ldamodel.py:1196] topic #4 (0.137): 0.022*"california" + 0.009*"home" + 0.008*"done" + 0.007*"job" + 0.006*"realestate" + 0.006*"group" + 0.006*"sold" + 0.006*"listed" + 0.006*"souza" + 0.006*"realestateagent"

INFO 2022-10-28 14:59:42,019 ldamodel.py:1074] topic diff=0.266040, rho=0.292603

INFO 2022-10-28 14:59:42,019 ldamodel.py:1001] PROGRESS: pass 3, at document #400/768

INFO 2022-10-28 14:59:42,038 ldamodel.py:794] optimized alpha [0.19145115, 0.22263739, 0.18601501, 0.34646887, 0.13494308]

INFO 2022-10-28 14:59:42,040 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,041 ldamodel.py:1196] topic #0 (0.191): 0.014*"work" + 0.014*"love" + 0.012*"business" + 0.012*"job" + 0.010*"go" + 0.009*"life" + 0.009*"good" + 0.008*"entrepreneur" + 0.008*"dream" + 0.008*"saturday"

INFO 2022-10-28 14:59:42,042 ldamodel.py:1196] topic #1 (0.223): 0.015*"work" + 0.012*"time" + 0.011*"meeting" + 0.010*"need" + 0.010*"home" + 0.009*"much" + 0.008*"coffee" + 0.007*"got" + 0.007*"even" + 0.006*"zoom"

INFO 2022-10-28 14:59:42,043 ldamodel.py:1196] topic #2 (0.186): 0.011*"morning" + 0.011*"entrepreneur" + 0.010*"let" + 0.010*"sunday" + 0.009*"day" + 0.007*"check" + 0.007*"long" + 0.007*"another" + 0.006*"tuesday" + 0.006*"businessowner"

INFO 2022-10-28 14:59:42,043 ldamodel.py:1196] topic #3 (0.346): 0.033*"work" + 0.021*"office" + 0.020*"home" + 0.013*"day" + 0.010*"back" + 0.010*"today" + 0.010*"working" + 0.010*"like" + 0.009*"new" + 0.008*"monday"

INFO 2022-10-28 14:59:42,044 ldamodel.py:1196] topic #4 (0.135): 0.022*"california" + 0.010*"done" + 0.010*"home" + 0.009*"group" + 0.009*"realestate" + 0.009*"job" + 0.008*"sold" + 0.007*"realestateagent" + 0.007*"homesales" + 0.007*"cloudoffice"

INFO 2022-10-28 14:59:42,044 ldamodel.py:1074] topic diff=0.264161, rho=0.292603

INFO 2022-10-28 14:59:42,045 ldamodel.py:1001] PROGRESS: pass 3, at document #500/768

INFO 2022-10-28 14:59:42,065 ldamodel.py:794] optimized alpha [0.19159876, 0.2229233, 0.18592289, 0.344313, 0.13665171]

INFO 2022-10-28 14:59:42,066 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,068 ldamodel.py:1196] topic #0 (0.192): 0.013*"work" + 0.013*"love" + 0.012*"job" + 0.010*"business" + 0.010*"life" + 0.010*"go" + 0.008*"make" + 0.008*"trying" + 0.008*"entrepreneur" + 0.008*"get"

INFO 2022-10-28 14:59:42,069 ldamodel.py:1196] topic #1 (0.223): 0.017*"work" + 0.013*"time" + 0.012*"need" + 0.011*"home" + 0.010*"meeting" + 0.008*"much" + 0.007*"get" + 0.007*"zoom" + 0.007*"coffee" + 0.007*"even"

INFO 2022-10-28 14:59:42,069 ldamodel.py:1196] topic #2 (0.186): 0.012*"another" + 0.010*"entrepreneur" + 0.010*"morning" + 0.009*"day" + 0.008*"sunday" + 0.007*"let" + 0.007*"way" + 0.007*"job" + 0.006*"think" + 0.006*"hiring"

INFO 2022-10-28 14:59:42,070 ldamodel.py:1196] topic #3 (0.344): 0.036*"work" + 0.021*"home" + 0.019*"office" + 0.012*"day" + 0.011*"working" + 0.010*"today" + 0.009*"like" + 0.009*"back" + 0.009*"week" + 0.007*"new"

INFO 2022-10-28 14:59:42,071 ldamodel.py:1196] topic #4 (0.137): 0.017*"california" + 0.015*"done" + 0.011*"home" + 0.011*"realestate" + 0.010*"group" + 0.010*"job" + 0.010*"sold" + 0.009*"listed" + 0.009*"souza" + 0.009*"cloudoffice"

INFO 2022-10-28 14:59:42,071 ldamodel.py:1074] topic diff=0.217299, rho=0.292603

INFO 2022-10-28 14:59:42,072 ldamodel.py:1001] PROGRESS: pass 3, at document #600/768

INFO 2022-10-28 14:59:42,093 ldamodel.py:794] optimized alpha [0.19781709, 0.23755448, 0.18241186, 0.35356525, 0.1395502]

INFO 2022-10-28 14:59:42,094 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,096 ldamodel.py:1196] topic #0 (0.198): 0.014*"go" + 0.012*"work" + 0.012*"love" + 0.011*"job" + 0.011*"life" + 0.009*"make" + 0.009*"trying" + 0.009*"today" + 0.008*"get" + 0.008*"day"

INFO 2022-10-28 14:59:42,096 ldamodel.py:1196] topic #1 (0.238): 0.014*"work" + 0.012*"meeting" + 0.011*"time" + 0.011*"need" + 0.009*"home" + 0.008*"much" + 0.007*"get" + 0.006*"zoom" + 0.006*"today" + 0.006*"desk"

INFO 2022-10-28 14:59:42,097 ldamodel.py:1196] topic #2 (0.182): 0.009*"another" + 0.008*"let" + 0.008*"way" + 0.008*"day" + 0.008*"entrepreneur" + 0.007*"think" + 0.007*"morning" + 0.007*"job" + 0.007*"long" + 0.006*"tuesday"

INFO 2022-10-28 14:59:42,098 ldamodel.py:1196] topic #3 (0.354): 0.032*"work" + 0.021*"office" + 0.021*"home" + 0.014*"working" + 0.014*"day" + 0.013*"today" + 0.009*"like" + 0.008*"back" + 0.008*"new" + 0.008*"one"

INFO 2022-10-28 14:59:42,099 ldamodel.py:1196] topic #4 (0.140): 0.018*"california" + 0.014*"done" + 0.010*"home" + 0.008*"realestate" + 0.008*"group" + 0.008*"job" + 0.007*"covid" + 0.007*"sold" + 0.007*"realtorlife" + 0.007*"souza"

INFO 2022-10-28 14:59:42,099 ldamodel.py:1074] topic diff=0.265041, rho=0.292603

INFO 2022-10-28 14:59:42,099 ldamodel.py:1001] PROGRESS: pass 3, at document #700/768

INFO 2022-10-28 14:59:42,122 ldamodel.py:794] optimized alpha [0.20066427, 0.2404108, 0.18080637, 0.37801436, 0.14072503]

INFO 2022-10-28 14:59:42,123 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,125 ldamodel.py:1196] topic #0 (0.201): 0.013*"work" + 0.012*"go" + 0.011*"love" + 0.010*"today" + 0.010*"life" + 0.010*"job" + 0.008*"day" + 0.008*"get" + 0.008*"make" + 0.007*"trying"

INFO 2022-10-28 14:59:42,126 ldamodel.py:1196] topic #1 (0.240): 0.013*"work" + 0.013*"meeting" + 0.011*"need" + 0.011*"time" + 0.008*"home" + 0.008*"get" + 0.006*"zoom" + 0.006*"much" + 0.006*"first" + 0.006*"today"

INFO 2022-10-28 14:59:42,127 ldamodel.py:1196] topic #2 (0.181): 0.009*"think" + 0.008*"tuesday" + 0.008*"way" + 0.007*"morning" + 0.007*"spring" + 0.007*"let" + 0.007*"long" + 0.007*"day" + 0.006*"another" + 0.006*"hybrid"

INFO 2022-10-28 14:59:42,127 ldamodel.py:1196] topic #3 (0.378): 0.031*"work" + 0.022*"office" + 0.019*"home" + 0.015*"day" + 0.014*"working" + 0.013*"today" + 0.009*"one" + 0.009*"back" + 0.008*"thing" + 0.008*"like"

INFO 2022-10-28 14:59:42,128 ldamodel.py:1196] topic #4 (0.141): 0.016*"california" + 0.013*"done" + 0.009*"employee" + 0.008*"home" + 0.007*"corporate" + 0.007*"job" + 0.006*"realestate" + 0.006*"angeles" + 0.006*"los" + 0.006*"group"

INFO 2022-10-28 14:59:42,129 ldamodel.py:1074] topic diff=0.238868, rho=0.292603

INFO 2022-10-28 14:59:42,148 ldamodel.py:847] -9.061 per-word bound, 533.9 perplexity estimate based on a held-out corpus of 68 documents with 815 words

INFO 2022-10-28 14:59:42,149 ldamodel.py:1001] PROGRESS: pass 3, at document #768/768

INFO 2022-10-28 14:59:42,160 ldamodel.py:794] optimized alpha [0.20581722, 0.24198253, 0.18566324, 0.38889664, 0.13699433]

INFO 2022-10-28 14:59:42,162 ldamodel.py:233] merging changes from 68 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,163 ldamodel.py:1196] topic #0 (0.206): 0.014*"go" + 0.014*"life" + 0.012*"work" + 0.010*"make" + 0.010*"love" + 0.010*"job" + 0.009*"would" + 0.009*"today" + 0.009*"day" + 0.008*"good"

INFO 2022-10-28 14:59:42,164 ldamodel.py:1196] topic #1 (0.242): 0.012*"meeting" + 0.011*"work" + 0.011*"need" + 0.010*"team" + 0.009*"zoom" + 0.009*"time" + 0.008*"home" + 0.007*"coffee" + 0.007*"got" + 0.006*"desk"INFO 2022-10-28 14:59:42,165 ldamodel.py:1196] topic #2 (0.186): 0.011*"new" + 0.010*"morning" + 0.008*"way" + 0.008*"think" + 0.008*"let" + 0.008*"long" + 0.007*"another" + 0.007*"day" + 0.006*"spring" + 0.006*"open"

INFO 2022-10-28 14:59:42,165 ldamodel.py:1196] topic #3 (0.389): 0.028*"work" + 0.024*"office" + 0.020*"day" + 0.018*"home" + 0.013*"back" + 0.012*"working" + 0.010*"one" + 0.010*"today" + 0.009*"week" + 0.008*"new"

INFO 2022-10-28 14:59:42,166 ldamodel.py:1196] topic #4 (0.137): 0.015*"california" + 0.010*"done" + 0.010*"employee" + 0.009*"covid" + 0.008*"beach" + 0.006*"home" + 0.005*"afternoon" + 0.005*"corporate" + 0.005*"environment" + 0.005*"lunchtime"

INFO 2022-10-28 14:59:42,167 ldamodel.py:1074] topic diff=0.200569, rho=0.292603

INFO 2022-10-28 14:59:42,167 ldamodel.py:1001] PROGRESS: pass 4, at document #100/768

INFO 2022-10-28 14:59:42,189 ldamodel.py:794] optimized alpha [0.19585069, 0.21535587, 0.17435808, 0.35555747, 0.13378218]

INFO 2022-10-28 14:59:42,190 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,192 ldamodel.py:1196] topic #0 (0.196): 0.011*"job" + 0.011*"work" + 0.010*"go" + 0.010*"life" + 0.010*"make" + 0.008*"people" + 0.008*"love" + 0.007*"business" + 0.007*"would" + 0.007*"company"

INFO 2022-10-28 14:59:42,192 ldamodel.py:1196] topic #1 (0.215): 0.012*"meeting" + 0.011*"work" + 0.010*"need" + 0.009*"team" + 0.009*"time" + 0.008*"zoom" + 0.008*"coffee" + 0.006*"much" + 0.006*"home" + 0.006*"even"

INFO 2022-10-28 14:59:42,193 ldamodel.py:1196] topic #2 (0.174): 0.010*"morning" + 0.009*"new" + 0.007*"way" + 0.007*"another" + 0.006*"world" + 0.006*"think" + 0.006*"let" + 0.006*"long" + 0.006*"open" + 0.006*"great"

INFO 2022-10-28 14:59:42,193 ldamodel.py:1196] topic #3 (0.356): 0.027*"work" + 0.026*"office" + 0.015*"day" + 0.015*"home" + 0.012*"back" + 0.011*"working" + 0.010*"today" + 0.010*"week" + 0.009*"one" + 0.009*"remote"

INFO 2022-10-28 14:59:42,194 ldamodel.py:1196] topic #4 (0.134): 0.022*"california" + 0.008*"park" + 0.007*"employee" + 0.007*"beach" + 0.006*"nature" + 0.006*"done" + 0.006*"naturelovers" + 0.006*"shotoniphone" + 0.006*"iphonography" + 0.006*"mvt"

INFO 2022-10-28 14:59:42,195 ldamodel.py:1074] topic diff=0.256748, rho=0.280828

INFO 2022-10-28 14:59:42,195 ldamodel.py:1001] PROGRESS: pass 4, at document #200/768

INFO 2022-10-28 14:59:42,213 ldamodel.py:794] optimized alpha [0.19896886, 0.22130443, 0.17521787, 0.35106468, 0.13105777]

INFO 2022-10-28 14:59:42,215 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,216 ldamodel.py:1196] topic #0 (0.199): 0.013*"job" + 0.011*"work" + 0.011*"life" + 0.011*"business" + 0.009*"company" + 0.009*"make" + 0.008*"go" + 0.008*"people" + 0.008*"futureofwork" + 0.007*"worker"

INFO 2022-10-28 14:59:42,217 ldamodel.py:1196] topic #1 (0.221): 0.014*"work" + 0.013*"meeting" + 0.010*"time" + 0.009*"need" + 0.008*"home" + 0.007*"zoom" + 0.007*"coffee" + 0.007*"team" + 0.007*"much" + 0.007*"amp"

INFO 2022-10-28 14:59:42,218 ldamodel.py:1196] topic #2 (0.175): 0.009*"morning" + 0.008*"think" + 0.008*"another" + 0.008*"new" + 0.007*"long" + 0.007*"open" + 0.006*"way" + 0.006*"let" + 0.006*"day" + 0.006*"technology"

INFO 2022-10-28 14:59:42,218 ldamodel.py:1196] topic #3 (0.351): 0.033*"work" + 0.024*"office" + 0.017*"home" + 0.015*"day" + 0.013*"working" + 0.012*"remote" + 0.011*"back" + 0.008*"today" + 0.008*"new" + 0.007*"week"

INFO 2022-10-28 14:59:42,219 ldamodel.py:1196] topic #4 (0.131): 0.018*"california" + 0.008*"change" + 0.007*"grateful" + 0.007*"beach" + 0.006*"park" + 0.006*"corporate" + 0.006*"employee" + 0.005*"able" + 0.005*"nature" + 0.005*"done"

INFO 2022-10-28 14:59:42,219 ldamodel.py:1074] topic diff=0.245085, rho=0.280828

INFO 2022-10-28 14:59:42,220 ldamodel.py:1001] PROGRESS: pass 4, at document #300/768

INFO 2022-10-28 14:59:42,241 ldamodel.py:794] optimized alpha [0.19523726, 0.21909595, 0.17859928, 0.33215046, 0.1351897]

INFO 2022-10-28 14:59:42,242 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,244 ldamodel.py:1196] topic #0 (0.195): 0.015*"business" + 0.015*"work" + 0.011*"job" + 0.009*"life" + 0.009*"career" + 0.009*"company" + 0.009*"money" + 0.008*"dream" + 0.008*"like" + 0.008*"would"

INFO 2022-10-28 14:59:42,245 ldamodel.py:1196] topic #1 (0.219): 0.018*"work" + 0.012*"meeting" + 0.011*"time" + 0.010*"home" + 0.010*"coffee" + 0.008*"need" + 0.007*"got" + 0.007*"team" + 0.007*"much" + 0.006*"nowhiring"

INFO 2022-10-28 14:59:42,245 ldamodel.py:1196] topic #2 (0.179): 0.012*"morning" + 0.009*"long" + 0.009*"day" + 0.008*"let" + 0.008*"entrepreneur" + 0.007*"place" + 0.006*"think" + 0.006*"another" + 0.006*"way" + 0.006*"texas"

INFO 2022-10-28 14:59:42,246 ldamodel.py:1196] topic #3 (0.332): 0.041*"work" + 0.023*"office" + 0.021*"home" + 0.013*"day" + 0.012*"working" + 0.011*"like" + 0.010*"back" + 0.009*"remote" + 0.009*"today" + 0.008*"time"

INFO 2022-10-28 14:59:42,247 ldamodel.py:1196] topic #4 (0.135): 0.022*"california" + 0.008*"done" + 0.008*"home" + 0.007*"job" + 0.006*"realestate" + 0.006*"group" + 0.006*"sold" + 0.006*"corporate" + 0.006*"souza" + 0.006*"listed"

INFO 2022-10-28 14:59:42,247 ldamodel.py:1074] topic diff=0.236147, rho=0.280828

INFO 2022-10-28 14:59:42,248 ldamodel.py:1001] PROGRESS: pass 4, at document #400/768

INFO 2022-10-28 14:59:42,267 ldamodel.py:794] optimized alpha [0.19259767, 0.22206187, 0.18423861, 0.352042, 0.13315527]

INFO 2022-10-28 14:59:42,269 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,271 ldamodel.py:1196] topic #0 (0.193): 0.014*"work" + 0.013*"love" + 0.012*"business" + 0.012*"job" + 0.010*"good" + 0.009*"go" + 0.009*"life" + 0.008*"entrepreneur" + 0.008*"dream" + 0.008*"company"

INFO 2022-10-28 14:59:42,271 ldamodel.py:1196] topic #1 (0.222): 0.015*"work" + 0.012*"time" + 0.011*"meeting" + 0.010*"need" + 0.010*"home" + 0.009*"much" + 0.008*"coffee" + 0.007*"got" + 0.007*"even" + 0.006*"zoom"

INFO 2022-10-28 14:59:42,272 ldamodel.py:1196] topic #2 (0.184): 0.011*"morning" + 0.011*"entrepreneur" + 0.010*"let" + 0.009*"sunday" + 0.009*"day" + 0.007*"long" + 0.007*"check" + 0.007*"another" + 0.006*"tuesday" + 0.006*"businessowner"

INFO 2022-10-28 14:59:42,273 ldamodel.py:1196] topic #3 (0.352): 0.034*"work" + 0.022*"office" + 0.020*"home" + 0.014*"day" + 0.011*"today" + 0.010*"back" + 0.010*"working" + 0.009*"like" + 0.008*"new" + 0.008*"monday"

INFO 2022-10-28 14:59:42,274 ldamodel.py:1196] topic #4 (0.133): 0.022*"california" + 0.010*"done" + 0.010*"home" + 0.009*"group" + 0.009*"realestate" + 0.009*"job" + 0.008*"sold" + 0.007*"realtorlife" + 0.007*"cloudoffice" + 0.007*"soldgetting"

INFO 2022-10-28 14:59:42,274 ldamodel.py:1074] topic diff=0.235534, rho=0.280828

INFO 2022-10-28 14:59:42,275 ldamodel.py:1001] PROGRESS: pass 4, at document #500/768

INFO 2022-10-28 14:59:42,292 ldamodel.py:794] optimized alpha [0.19253674, 0.22237869, 0.18447535, 0.35046908, 0.13455795]

INFO 2022-10-28 14:59:42,293 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,295 ldamodel.py:1196] topic #0 (0.193): 0.013*"work" + 0.013*"love" + 0.012*"job" + 0.011*"business" + 0.010*"life" + 0.009*"go" + 0.008*"make" + 0.008*"good" + 0.008*"trying" + 0.008*"entrepreneur"

INFO 2022-10-28 14:59:42,296 ldamodel.py:1196] topic #1 (0.222): 0.016*"work" + 0.012*"time" + 0.012*"need" + 0.010*"home" + 0.010*"meeting" + 0.008*"much" + 0.007*"get" + 0.007*"zoom" + 0.007*"coffee" + 0.007*"even"

INFO 2022-10-28 14:59:42,297 ldamodel.py:1196] topic #2 (0.184): 0.012*"another" + 0.010*"entrepreneur" + 0.010*"morning" + 0.009*"day" + 0.008*"sunday" + 0.007*"let" + 0.007*"way" + 0.007*"job" + 0.006*"think" + 0.006*"hiring"

INFO 2022-10-28 14:59:42,297 ldamodel.py:1196] topic #3 (0.350): 0.036*"work" + 0.021*"home" + 0.019*"office" + 0.013*"day" + 0.011*"working" + 0.011*"today" + 0.009*"back" + 0.009*"week" + 0.009*"like" + 0.007*"new"

INFO 2022-10-28 14:59:42,298 ldamodel.py:1196] topic #4 (0.135): 0.017*"california" + 0.015*"done" + 0.011*"realestate" + 0.011*"home" + 0.010*"group" + 0.010*"job" + 0.010*"sold" + 0.009*"cloudoffice" + 0.009*"souza" + 0.009*"homesales"INFO 2022-10-28 14:59:42,298 ldamodel.py:1074] topic diff=0.192729, rho=0.280828

INFO 2022-10-28 14:59:42,299 ldamodel.py:1001] PROGRESS: pass 4, at document #600/768

INFO 2022-10-28 14:59:42,317 ldamodel.py:794] optimized alpha [0.19841008, 0.2352511, 0.18128434, 0.35965866, 0.13781807]

INFO 2022-10-28 14:59:42,319 ldamodel.py:233] merging changes from 100 documents into a model of 768 documents

INFO 2022-10-28 14:59:42,320 ldamodel.py:1196] topic #0 (0.198): 0.014*"go" + 0.012*"job" + 0.012*"work" + 0.012*"love" + 0.011*"life" + 0.009*"make" + 0.009*"trying" + 0.009*"today" + 0.008*"get" + 0.008*"business"